Proving ROI with Data-Driven AI Agent Experiments

Published October 9th, 2025

What You’ll Learn in 5 Minutes (or Build in 30)

Key Findings from Our Experiments:

- Unexpected discovery: Free Mistral model is not only $0 but also significantly faster than Claude Haiku

- Cost paradox revealed: “Free” security agent increased total system costs by forcing downstream agents to compensate

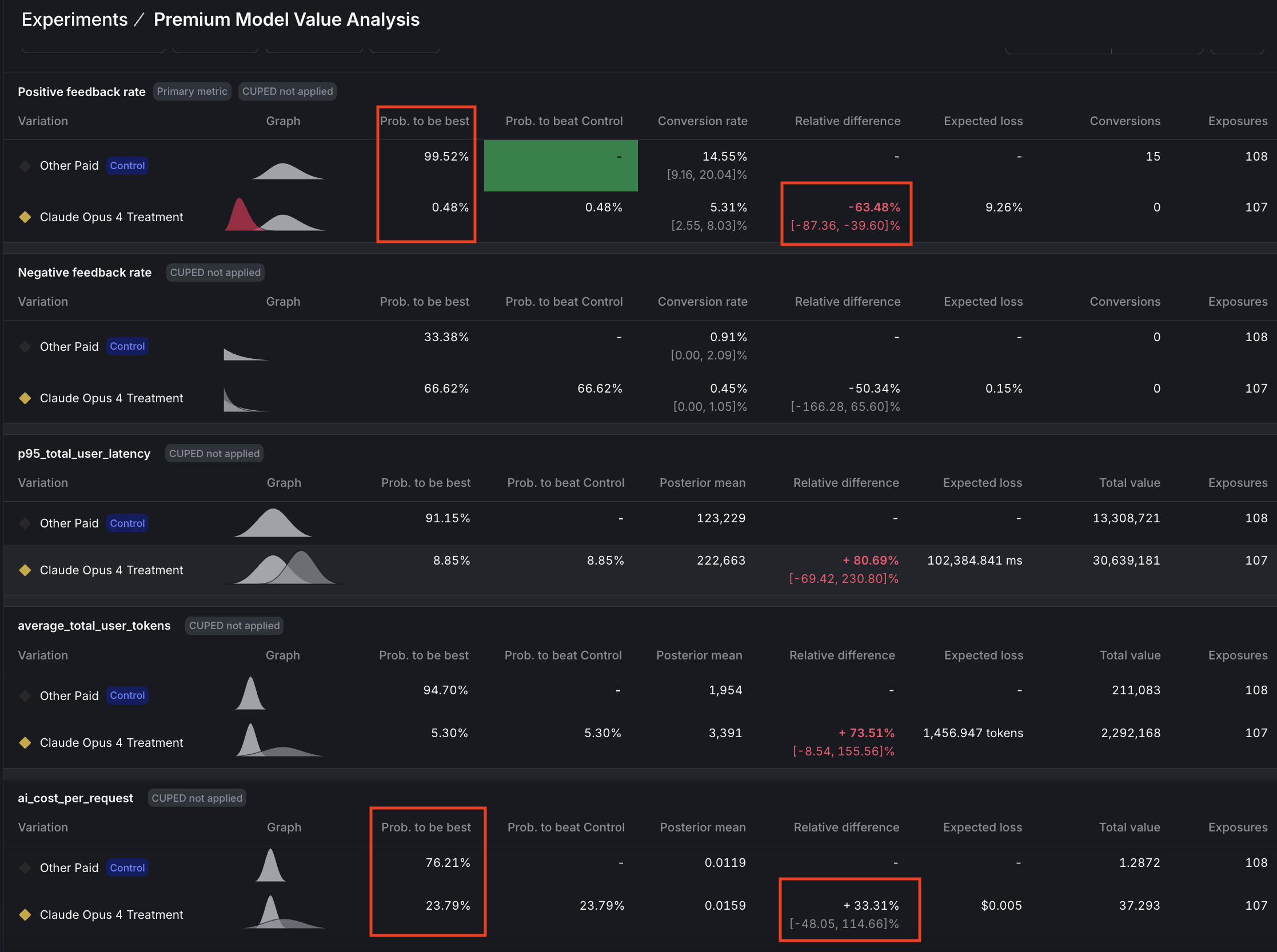

- Premium model failure: Claude Opus 4 performed 64% worse than GPT-4o despite costing 33% more

- Sample size reality: High-variance metrics (cost, feedback) require 5-10x more data than low-variance ones (latency)

The Problem

Your CEO asks: “Is the new expensive AI model worth it?”

Your finance team wonders: “Does the enhanced privacy justify the cost?”

Without experiments, you’re guessing. This tutorial shows you how to measure the truth and sometimes discover unanticipated gains.

The Solution: Real Experiments, Real Answers

In 30 minutes, you’ll run actual A/B tests that reveal:

Will aggressive PII redaction hurt user satisfaction?

Is Claude Opus 4 worth 33% more than GPT-4o?

Part 3 of 3 of the series: Chaos to Clarity: Defensible AI Systems That Deliver on Your Goals

Quick Start Options

Option 1: Just Want the Concepts? (5 min read)

Skip to Understanding the Experiments to learn the methodology without running code.

Option 2: Full Hands-On Tutorial (30 min)

Follow the complete guide to run your own experiments.

Prerequisites for Hands-On Tutorial

Required from Previous Parts:

- Active LaunchDarkly project completed from Part 1 & Part 2

- API keys: Anthropic, OpenAI, LaunchDarkly. Sign up for a free account here and then follow these instructions to get your API access token.

Investment:

- Time: ~30 minutes

- Cost: $25-35 default ($5-10 with

--queries 50)

Reduce Experiment Costs

The default walk-through uses Claude Opus 4 (premium model) for testing. To reduce costs while still learning the experimentation patterns, you can modify bootstrap/tutorial_3_experiment_variations.py in your cloned repository to test with the free Mistral model instead:

In the create_premium_model_variations function, change:

This reduces the experiment cost by about $20 (you’ll still have costs from the control group using GPT-4o and other agents in the system).

How the Experiments Work

The Setup: Your AI system will automatically test variations on simulated users, collecting real performance data that flows directly to LaunchDarkly for statistical analysis.

The Process:

- Traffic simulation generates queries from your actual knowledge base

- Each user gets randomly assigned to experiment variations

- AI responses are evaluated for quality and tracked for cost/speed

- LaunchDarkly calculates statistical significance automatically

Note: The two experiments can run independently. Each user can participate in both, but the results are analyzed separately.

Experiment Methodology: Our supervisor agent routes PII queries to the security agent (then to support), while clean queries go directly to support. LaunchDarkly tracks metrics at the user level across all agents, revealing system-wide effects.

Understanding Your Two Experiments

Experiment 1: Security Agent Analysis

Question: Does Strict Security (free Mistral model with aggressive PII redaction) improve performance without harming user experience or significantly increasing system costs?

Variations (50% each):

- Control: Basic Security (Claude Haiku, moderate PII redaction)

- Treatment: Strict Security (Mistral free, aggressive PII redaction)

Success Criteria:

- Positive feedback rate: stable or improving (not significantly worse)

- Cost increase: ≤15% with ≥75% confidence

- Latency increase: ≤3 seconds (don’t significantly slow down)

- Enhanced privacy protection delivered

Experiment 2: Premium Model Value Analysis

Question: Does Claude Opus 4 justify its premium cost vs GPT-4o?

Variations (50% each):

- Control: GPT-4o with full tools (current version)

- Treatment: Claude Opus 4 with identical tools

Success Criteria (must meet 90% threshold):

- ≥15% positive feedback rate improvement by Claude Opus 4

- Cost-value ratio ≥ 0.25 (positive feedback rate gain % ÷ cost increase %)

Setting Up Metrics and Experiments

Step 1: Configure Metrics (5 minutes)

Quick Metric Setup

Navigate to Metrics in LaunchDarkly and create three custom metrics:

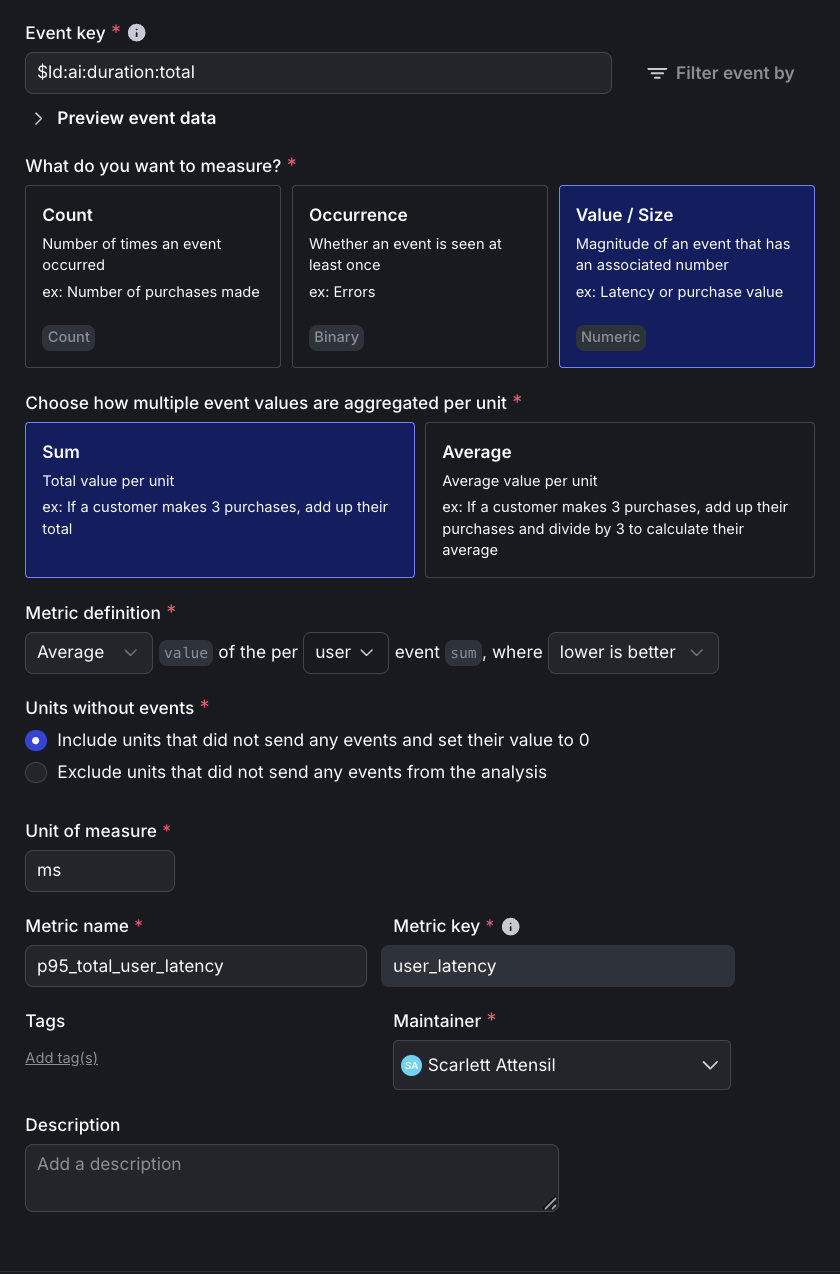

See detailed setup for P95 Latency

- Event key:

$ld:ai:duration:total - Type: Value/Size → Numeric, Aggregation: Sum

- Definition: P95, value, user, sum, “lower is better”

- Unit:

ms, Name:p95_total_user_latency

View other metric configurations

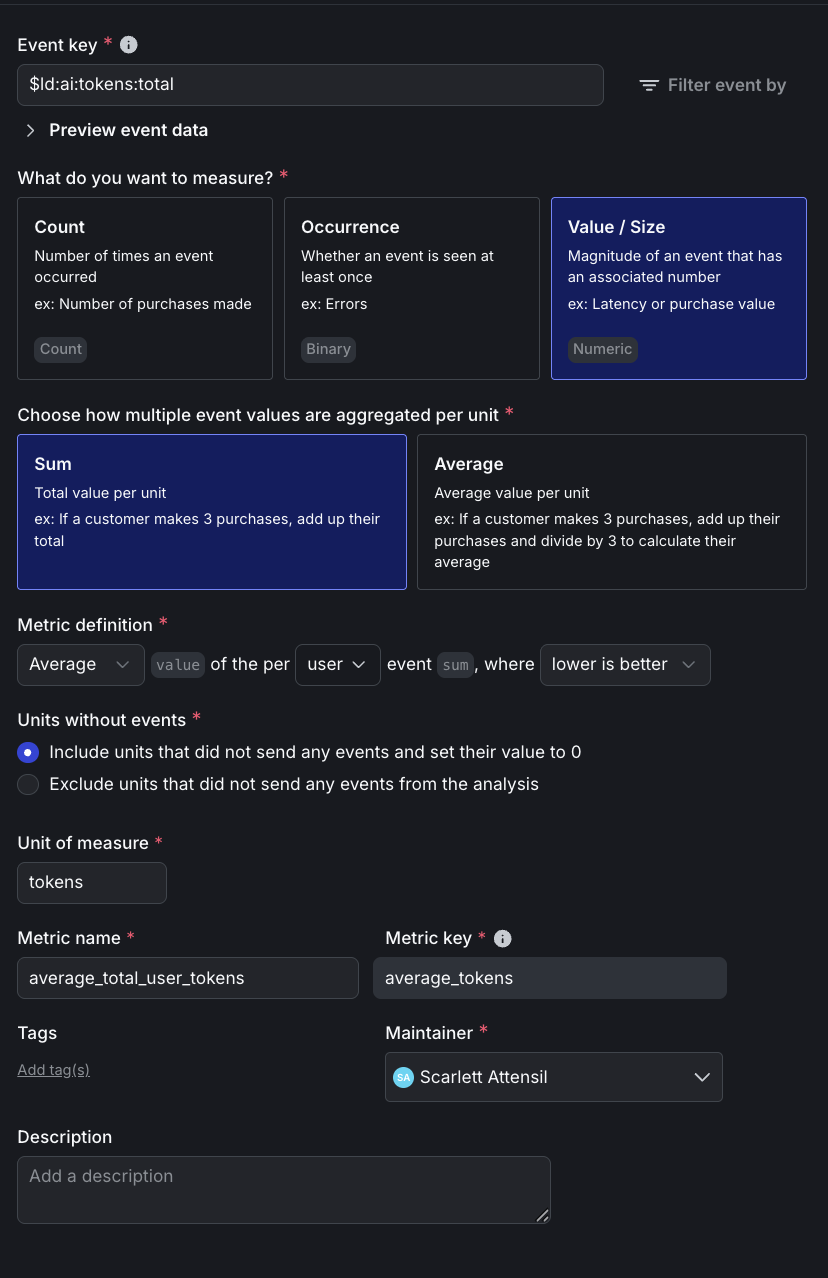

- Tokens: Event key

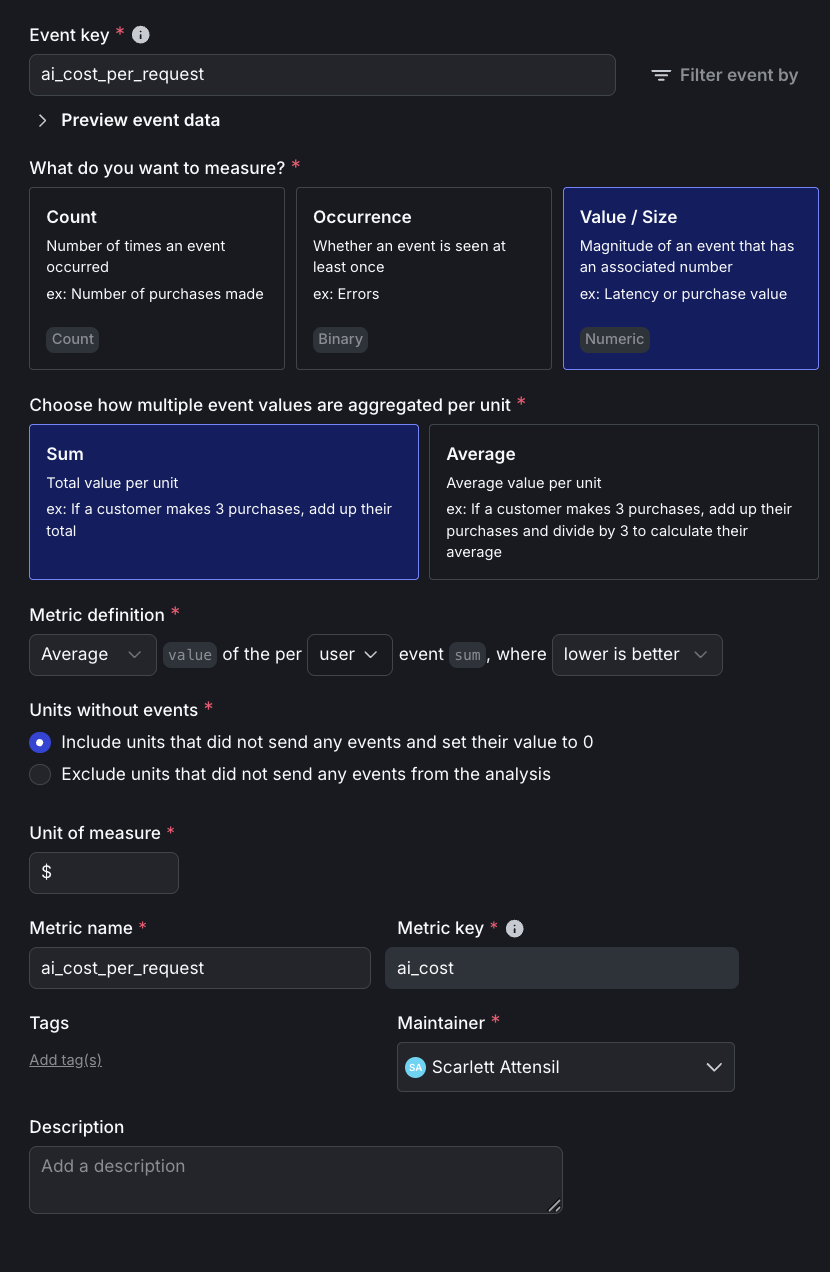

$ld:ai:tokens:total, Name:average_total_user_tokens, Average aggregation - Cost: Event key

ai_cost_per_request, Name:ai_cost_per_request, Average in dollars

The cost tracking is implemented in utils/cost_calculator.py, which calculates actual dollar costs using the formula (input_tokens × input_price + output_tokens × output_price) / 1M. The system has pre-configured pricing for each model (as of October 2025): GPT-4o at $2.50/$10 per million tokens, Claude Opus 4 at $15/$75, and Claude Sonnet at $3/$15. When a request completes, the cost is immediately calculated and sent to LaunchDarkly as a custom event, enabling direct cost-per-user analysis in your experiments.

Step 2: Create Experiment Variations

Create the experiment variations using the bootstrap script:

This creates the claude-opus-treatment variation for the Premium Model Value experiment. To verify the script worked correctly, navigate to your support-model-config feature flag in LaunchDarkly - you should now see the claude-opus-treatment variation alongside your existing variations. The Security Agent Analysis experiment will use your existing baseline and enhanced variations. Both experiments use the existing other-paid configuration as their control group.

Step 3: Configure Security Agent Experiment

Click for details

Navigate to AI Configs → security-agent. In the right navigation menu, click the plus (+) sign next to Experiments to create a new experiment

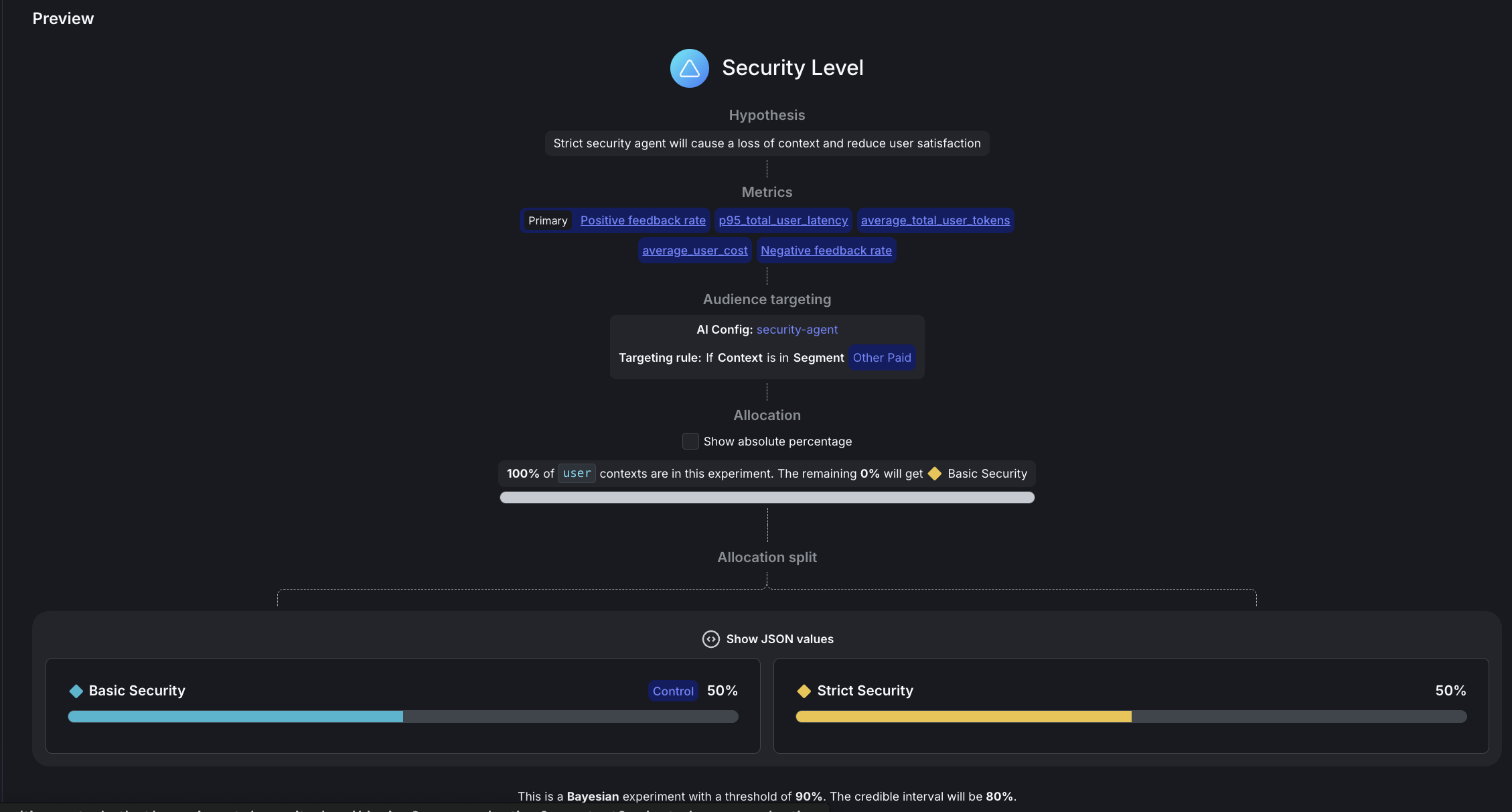

Experiment Design

Name: Security Level

Hypothesis and Metrics

Hypothesis: Enhanced security improves safety compliance without significantly harming positive feedback rates

Randomize by: user

Metrics: Click “Select metrics or metric groups” and add:

Positive feedback rate→ Select first to set as PrimaryNegative feedback ratep95_total_user_latencyai_cost_per_request

Audience Targeting

Flag or AI Config

- Click the dropdown and select security-agent

Targeting rule:

- Click the dropdown and select Rule 4

- This will configure:

If Context→is in Segment→Other Paid

Audience Allocation

Variations served outside of this experiment:

Basic Security

Sample size: Set to 100% of users in this experiment

Variations split: Click “Edit” and configure:

Note: Before setting these percentages, scroll down to the Control field below and set Basic Security as the control variation first, otherwise you won’t be able to allocate 50% traffic to it.

pii-detector:0%Basic Security:50%Strict Security:50%

Control:

Basic Security

Statistical Approach and Success Criteria

Statistical approach: Bayesian

Threshold: 90%

Click “Save” Click “Start experiment” to launch.

Note: You may see a “Health warning” indicator after starting the experiment. This is normal and expected when no variations have been exposed yet. The warning will clear once your experiment starts receiving traffic and data begins flowing.

Step 4: Configure Premium Model Experiment

Click for details

Navigate to AI Configs → support-agent. In the right navigation menu, click the plus (+) sign next to Experiments to create a new experiment

Experiment Design

Name: Premium Model Value Analysis

Hypothesis and Metrics

Hypothesis: Claude Opus 4 justifies premium cost with superior positive feedback rate

Randomize by: user

Metrics: Click “Select metrics or metric groups” and add:

Positive feedback rate→ Select first to set as PrimaryNegative feedback ratep95_total_user_latencyaverage_total_user_tokensai_cost_per_request

Audience Targeting

Flag or AI Config

- Click the dropdown and select support-agent

Targeting rule:

- Click the dropdown and select Rule 4

- This will configure:

If Context→is in Segment→Other Paid

Audience Allocation

Variations served outside of this experiment:

other-paid

Sample size: Set to 100% of users in this experiment

Variations split: Click “Edit” and configure:

Note: Before setting these percentages, scroll down to the Control field below and set other-paid as the control variation first, otherwise you won’t be able to allocate 50% traffic to it.

rag-search-enhanced:0%eu-free:0%eu-paid:0%other-free:0%other-paid:50%international-standard:0%claude-opus-treatment:50%

Control:

other-paid

Statistical Approach and Success Criteria

Statistical approach: Bayesian

Threshold: 90%

Click “Save” Click “Start experiment” to launch.

Note: You may see a “Health warning” indicator after starting the experiment. This is normal and expected when no variations have been exposed yet. The warning will clear once your experiment starts receiving traffic and data begins flowing.

Understanding Your Experimental Design

If Two Independent Experiments Running Concurrently:

Since these are the same users, each user experiences:

- One security variation (

Basic SecurityorStrict Security) - One model variation (

Claude Opus 4 TreatmentOROther Paid (GPT-4o))

Random assignment ensures balance: ~50 users get each combination naturally.

Generating Experiment Data

Step 5: Run Traffic Generator

Start your backend and generate realistic experiment data. Choose between sequential or concurrent traffic generation:

Concurrent Traffic Generator (Recommended for large datasets)

For faster experiment data generation with parallel requests:

Configuration:

- 200 queries by default (edit script to adjust)

- 10 concurrent requests running in parallel

- 2000-second timeout (33 minutes) per request to handle MCP tool rate limits

Note: runtime depends largely on if you retain MCP tool enablement as these take much longer to complete.

For smaller test runs or debugging

Sequential Traffic Generator (Simple, one-at-a-time)

What Happens During Simulation:

-

Knowledge extraction Claude analyzes your docs and identifies 20+ realistic topics

-

Query generation Each test randomly selects from these topics for diversity

-

AI-powered evaluation Claude judges responses as thumbs_up/thumbs_down/neutral

-

Automatic tracking All metrics flow to LaunchDarkly in real-time

Generation Output:

Performance Notes:

- Most queries complete in 10-60 seconds

- Queries using

semantic_scholarMCP tool may take 5-20 minutes due to API rate limits - Concurrent execution handles slow requests gracefully by continuing with others

- Failed/timeout requests (less than 5% typically) don’t affect experiment validity

Monitor Results: Refresh your LaunchDarkly experiment “Results” tabs to see data flowing in. Cost metrics appear as custom events alongside feedback and token metrics.

Interpreting Your Results (After Data Collection)

Once your experiments have collected data from ~100 users per variation, you’ll see results in the LaunchDarkly UI. Here’s how to interpret them:

1. Security Agent Analysis: Does enhanced security improve safety without significantly impacting positive feedback rates?

Reality Check: Not all metrics reach significance at the same rate. In our security experiment we ran over 2,000 more users than the model experiment, turning off the MCP tools and using --pii-percentage 100 to maximize pii detection:

- Latency: 87% confidence (nearly significant, clear 36% improvement)

- Cost: 21% confidence (high variance, needs 5-10x more data)

- Feedback: 58% confidence (sparse signal, needs 5-10x more data)

This is normal! Low-variance metrics (latency, tokens) prove out quickly. High-variance metrics (cost, feedback) need massive samples. You may not be able to wait for every metric to hit 90%. Use strong signals on some metrics plus directional insights on others.

✅ VERDICT: Deploy Strict Security: Enhanced Privacy is Worth the Modest Cost

The results tell a compelling story: Latency (p95) is approaching significance with 87% confidence that Strict Security is faster, a win we didn’t anticipate. Cost per request shows 79% confidence that Basic Security costs less (or conversely, 21% confidence that Strict costs more), also approaching significance. Meanwhile, positive feedback rate remains inconclusive with only 58% confidence that Strict Security performs better, indicating we need more data to draw conclusions about user satisfaction.

The Hidden Cost Paradox:

Strict Security uses FREE Mistral for PII detection, yet increases total system cost by 14.6%.

Why does the support agent cost more? More aggressive PII redaction removes context, forcing the support agent to generate longer, more detailed responses to compensate for the missing information. This demonstrates why system-level experiments matter. Optimizing one agent can inadvertently increase costs downstream.

Decision Logic:

Bottom line: Deploy Strict Security. We expected latency to stay within 3 seconds of baseline, but discovered a 36% improvement instead (87% confidence). Mistral is significantly faster than Claude Haiku. Combined with enhanced privacy protection, this more than justifies the modest 14.5% cost increase (79% confidence).

Read across: At scale, paying ~$0.004 more per request for significantly better privacy compliance and faster responses is a clear win for EU users and privacy-conscious segments.

The Data That Proves It:

2. Premium Model Value Analysis: Does Claude Opus 4 justify its premium cost with superior positive feedback rates?

🔴 VERDICT: Reject Claude Opus 4

The experiment delivered a decisive verdict: Positive feedback rate showed a significant failure with 99.5% confidence that GPT-4o is superior. Cost per request is approaching significance with 76% confidence that Claude Opus is 33% more expensive, while latency (p95) reached significance with 91% confidence that Claude Opus is 81% slower. The cost-to-value ratio tells the whole story: -1.9x, meaning we’re paying 33% more for 64% worse performance: a clear case of premium pricing without premium results.

Decision Logic:

Bottom line: Premium price delivered worse results on every metric. Experiment was stopped when positive feedback rate reached significance.

Read across: GPT-4o dominates on performance and speed and most likely also on cost.

The Numbers Don’t Lie:

Key Insights from Real Experiment Data

-

Low-variance metrics (latency, tokens) reach significance quickly (~1,000 samples). High-variance metrics (cost, feedback) may need 5,000-10,000+ samples. This isn’t a flaw in your experiment but the reality of statistical power. Don’t chase 90% confidence on every metric; focus on directional insights for high-variance metrics and statistical proof for low-variance ones.

-

Using a free Mistral model for security reduced that agent’s cost to $0, yet increased total system cost by 14.5% because downstream agents had to work harder with reduced context. However, the experiment also revealed an unexpected 36% latency improvement. Mistral is not just free but significantly faster. LaunchDarkly’s user-level tracking captured both effects, enabling an informed decision: enhanced privacy + faster responses for ~$0.004 more per request is a worthwhile tradeoff.

-

At 87% confidence for latency (vs 90% target), the 36% improvement is clear enough for decision-making. Perfect statistical significance is ideal, but 85-89% confidence combined with other positive signals (stable feedback, acceptable cost) can justify deployment when the effect size is large.

Experimental Limitations & Mitigations

Model-as-Judge Evaluation

We use Claude to evaluate response quality rather than real users, which represents a limitation of this experimental setup. However, research shows that model-as-judge approaches correlate well with human preferences, as documented in Anthropic’s Constitutional AI paper.

Independent Experiments

While random assignment naturally balances security versions across model versions, preventing systematic bias, you cannot analyze interaction effects between security and model choices. If interaction effects are important to your use case, consider running a proper factorial experiment design.

Statistical Confidence LaunchDarkly uses Bayesian statistics to calculate confidence, where 90% confidence means there’s a 90% probability the true effect is positive. This is NOT the same as p-value < 0.10 from frequentist tests. We set the threshold at 90% (rather than 95%) to balance false positives versus false negatives, though for mission-critical features you should consider raising the confidence threshold to 95%.

Common Mistakes You Just Avoided

❌ “Let’s run the experiment for a week and see”

✅ We defined success criteria upfront (≥15% improvement threshold)

❌ “We need 90% confidence on every metric to ship”

✅ We used 87% confidence + directional signals (36% latency win was decision-worthy)

❌ “Let’s run experiments until all metrics reach significance”

✅ We understood variance (cost/feedback need 5-10x more data than latency)

❌ “Agent-level metrics show the full picture”

✅ We tracked user-level workflows (revealed downstream cost increases)

What You’ve Accomplished

You’ve built a data-driven optimization engine with statistical rigor through falsifiable hypotheses and clear success criteria. Your predefined success criteria ensure clear decisions and prevent post-hoc rationalization. Every feature investment now has quantified business impact for ROI justification, and you have a framework for continuous optimization through ongoing measurable experimentation.

Troubleshooting

Long Response Times (>20 minutes)

If you see requests taking exceptionally long, the root cause is likely the semantic_scholar MCP tool hitting API rate limits, which causes 30-second retry delays. Queries using this tool may take 5-20 minutes to complete. The 2000-second timeout handles this gracefully, but if you need faster responses (60-120 seconds typical), consider removing semantic_scholar from tool configurations. You can verify this issue by checking logs for HTTP/1.1 429 errors indicating rate limiting.

Cost Metrics Not Appearing

If ai_cost_per_request events aren’t showing in LaunchDarkly, first verify that utils/cost_calculator.py has pricing configured for your models. Cost is only tracked when requests complete successfully (not on timeout or error). The system flushes cost events to LaunchDarkly immediately after each request completion. To debug, look for COST CALCULATED: and COST TRACKING (async): messages in your API logs.

Beyond This Tutorial: Advanced AI Experimentation Patterns

Other AI experimentation types you can run in LaunchDarkly

Context from earlier: you ran two experiments:

- Security‑agent test: a bundle change (both prompt/instructions and model changed).

- Premium‑model test: a model‑only change.

AI Configs come in two modes: prompt‑based (single‑step completions) and agent‑based (multi‑step workflows with tools). Below are additional patterns to explore.

Experiments you can run entirely in AI Configs (no app redeploy)

-

Prompt & template experiments (prompt‑based or agent instructions) Duplicate a variation and iterate on system/assistant messages or agent instructions to measure adherence to schema, tone, or qualitative satisfaction. Use LaunchDarkly Experimentation to tie those variations to product metrics.

-

Model‑parameter experiments In a single model, vary parameters like

temperatureormax_tokens, and (optionally) add custom parameters you define (for example, an internalmax_tool_callsor decoding setting) directly on the variation. -

Tool‑bundle experiments (agent mode or tool‑enabled completions) Attach/detach reusable tools from the Tools Library to compare stacks (e.g.,

search_v2, a reranker, or MCP‑exposed research tools) across segments. Keep one variable at a time when possible -

Cost/latency trade‑offs Compare “slim” vs “premium” stacks by segment. Track tokens, time‑to‑first‑token, duration, and satisfaction to decide where higher spend is warranted.

Practical notes

- Use Experimentation for behavior impact (clicks, task success); use the Monitoring tab for LLM‑level metrics (tokens, latency, errors, satisfaction).

- You can’t run a guarded rollout and an experiment on the same flag at the same time. Pick one: guarded rollout for risk‑managed releases, experiment for causal measurement.

Patterns that usually need feature flags and/or custom instrumentation

-

Fine‑grained RAG tuning k‑values, similarity thresholds, chunking, reranker swaps, and cache policy are typically coded inside your retrieval layer. Expose these as flags or AI Config custom parameters if you want to A/B them.

-

Tool‑routing guardrails Fallback flows (e.g., retry with a different tool/model on error), escalation rules, or heuristics need logic in your agent/orchestrator. Gate those behaviors behind flags and measure with custom metrics.

-

Safety guardrail calibration Moderation thresholds, red‑team prompts, and PII sensitivity levers belong in a dedicated safety service or the agent wrapper. Wire them to flags so you can raise/lower sensitivity by segment (e.g., enterprise vs free).

-

Session budget enforcement Monitoring will show token costs and usage, but enforcing per‑session or per‑org budgets (denylist, degrade model, or stop‑tooling) requires application logic. Wrap policies in flags before you experiment.

Targeting & segmentation ideas (works across all the above)

- Route by plan/tier, geo, device, or org using AI Config targeting rules and percentage rollouts.

- Keep variations narrow (one change per experiment) to avoid confounding; reserve “bundle” tests for tool‑stack bake‑offs.

Advanced Practices: Require statistical evidence before shipping configuration changes. Pair each variation with clear success metrics, then A/B test prompt or tool adjustments and use confidence intervals to confirm improvements. When you introduce the new code paths above, protect them behind feature flags so you can run sequential tests, multi-armed bandits for faster convergence, or change your experiment design to understand how prompts, tools, and safety levers interact.

From Chaos to Clarity

Across this three-part series, you’ve transformed from hardcoded AI configurations to a scientifically rigorous, data-driven optimization engine. Part 1 established your foundation with a dynamic multi-agent LangGraph system controlled by LaunchDarkly AI Configs, eliminating the need for code deployments when adjusting AI behavior. Part 2 added sophisticated targeting with geographic privacy rules, user segmentation by plan tiers, and MCP (Model Context Protocol) tool integration for real academic research capabilities. This tutorial, Part 3 completed your journey with statistical experimentation that proves ROI and guides optimization decisions with mathematical confidence rather than intuition.

You now possess a defensible AI system that adapts to changing requirements, scales across user segments, and continuously improves through measured experimentation. Your stakeholders receive concrete evidence for AI investments, your engineering team deploys features with statistical backing, and your users benefit from optimized experiences driven by real data rather than assumptions. The chaos of ad-hoc AI development has given way to clarity through systematic, scientific product development.

Resources

- LaunchDarkly Experimentation Docs - Deep dive into statistical methods

Remember: Every AI decision backed by data is a risk avoided and a lesson learned. Start small, measure everything, ship with confidence.