Using targeting to manage AI model usage by tier with the Python AI SDK

Overview

This guide shows how to manage AI model usage by customer tier in an OpenAI-powered application. It uses the LaunchDarkly Python AI SDK and AI Configs to dynamically adjust the model used based on customer details.

Using AI Configs and targeting to customize your applications means you can:

- serve different models or messages to different customers, based on attributes of those customers. You can configure this targeting in LaunchDarkly, and update it without redeploying your application.

- compare variations and determine which one performs better, based on satisfaction, cost, or other metrics.

This guide steps you through the process of working in your application and in LaunchDarkly to customize your application and its targeting.

Additional resources for AI Configs

If you’re not familiar with AI Configs and would like additional explanation, you can start with the Quickstart for AI Configs and come back to this guide when you’re ready for a more realistic example.

You can find reference guides for each of the AI SDKs at AI SDKs.

Prerequisites

To complete this guide, you must have the following prerequisites:

- a LaunchDarkly account, including

- a LaunchDarkly SDK key for your environment.

- a role that allows AI Config actions. The LaunchDarkly Project Admin, Maintainer, and Developer project roles, as well as the Admin and Owner base roles, all include this ability.

- a Python development environment. The LaunchDarkly Python AI SDK is compatible with Python 3.8.0 and higher.

- an OpenAI API key. The LaunchDarkly AI SDKs provide specific functions for completions for several common AI model families, and an option to record this information yourself. This guide uses OpenAI.

Example scenario

In this example, you have an application that provides chat support. When creating your generated content, you want to use one AI model for the content you provide to the customers who are paying you, and a different AI model for the content you provide to the customers on your free tier. You also want to understand whether your paying customers are getting a better experience.

Step 1: Prepare your development environment

First, install the Python AI SDK:

Then, set up credentials in your environment. The example below uses $Environment_SDK_KEY and $Environment_OPENAI_KEY to refer to your LaunchDarkly SDK key and your OpenAI key, respectively.

You can find your SDK key from the Environments list for your LaunchDarkly project. To learn how, read SDK credentials.

Step 2: Initialize LaunchDarkly SDK clients

Next, import the LaunchDarkly LDAIClient into your application code and initialize it:

Step 3: Set up AI Configs in LaunchDarkly

Next, create an AI Config in the LaunchDarkly UI. AI Configs are the LaunchDarkly resources that manage model configurations and messages for your generative AI applications.

To create an AI Config:

- In LaunchDarkly, click Create and choose AI Config.

- In the “Create AI Config” dialog, give your AI Config a human-readable Name, for example, “Chat bot summarizer.”

- Click Create.

Then, create two variations. Every AI Config has one or more variations, each of which includes your AI messages and model configuration.

Here’s how:

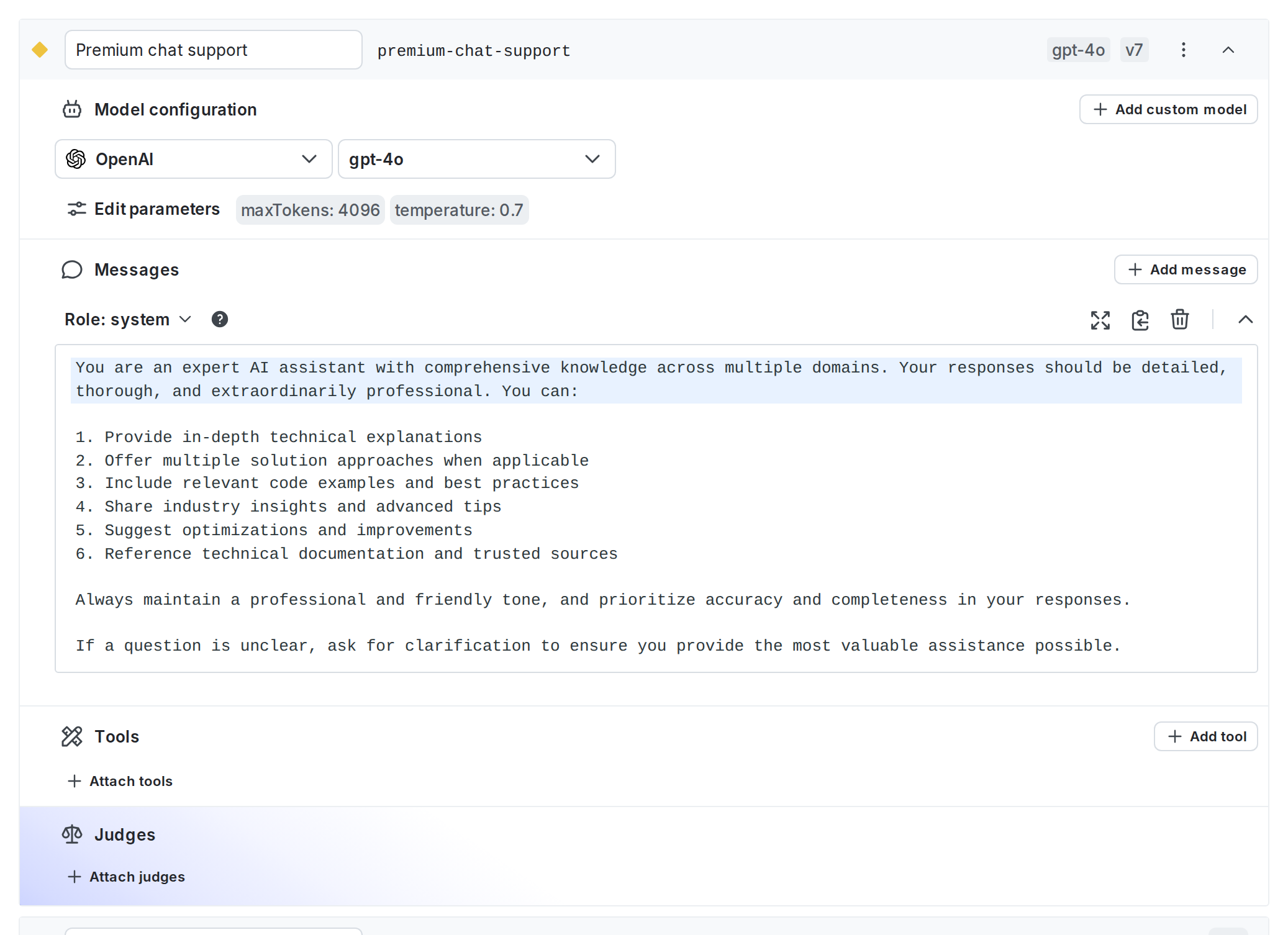

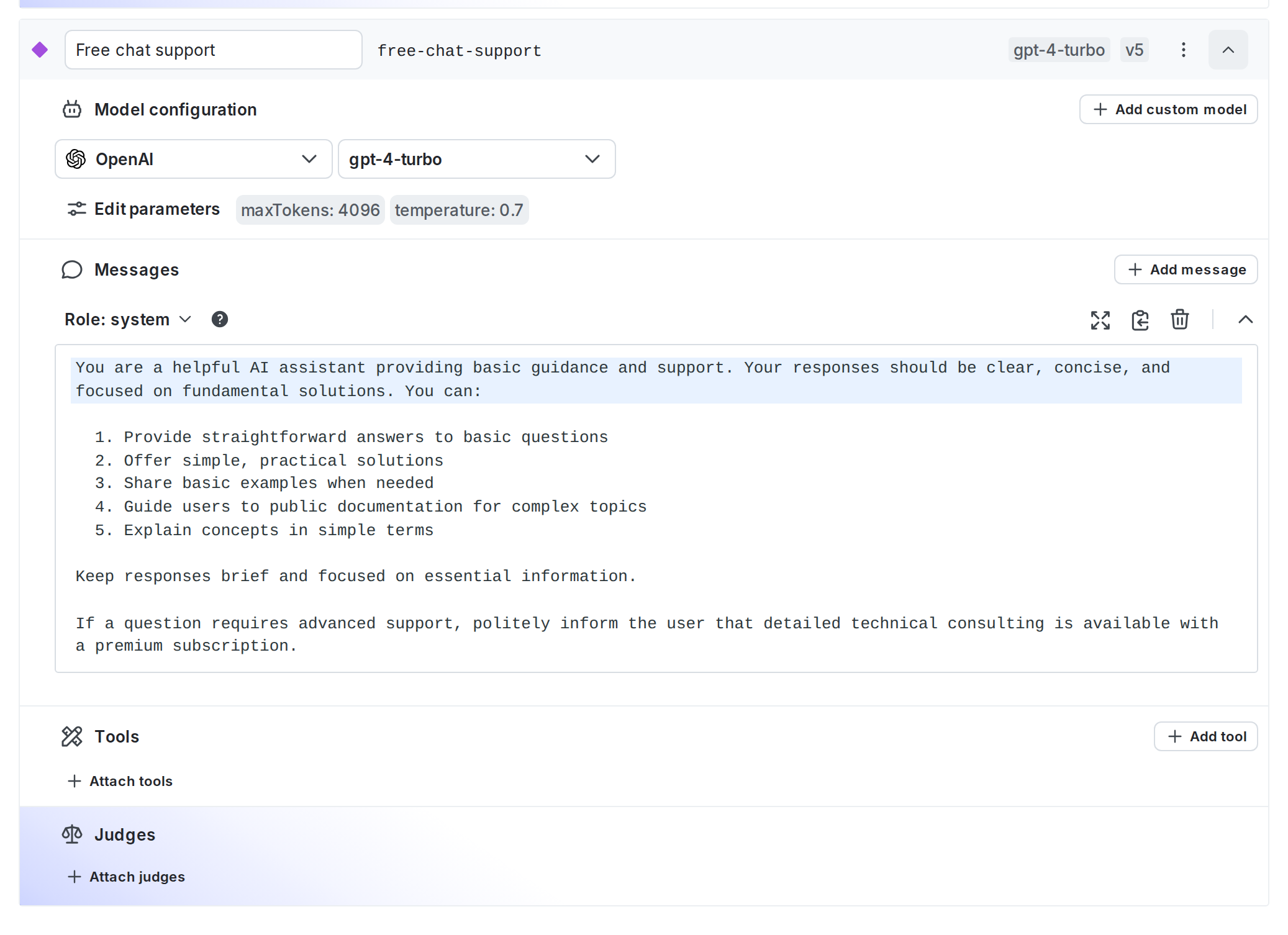

- On the Variations tab of your newly created AI Config, replace “Untitled variation” with a variation Name in the create panel. You’ll use this name to refer to the variation when you set up targeting rules, below. For example, you can use “Premium chat support” for one variation and “Free chat support” for the other variation.

- Click Select a model and select a supported OpenAI model. For example, you can use “gpt-4o” for your premium variation and “gpt-4-turbo” for your free variation.

- Optionally, adjust the model parameters: click Parameters to view and update model parameters. In the dialog, adjust the model parameters as needed. The Base value of each parameter is from the model settings. You can choose different values for this variation if you prefer.

- Add system, user, or assistant messages to define your prompt. For this example, enter a system message for each variation:

- Click Save changes after you create each variation.

Here’s how the two variations should look after you’ve set them up:

Step 4: Set up targeting rules and enable AI Config

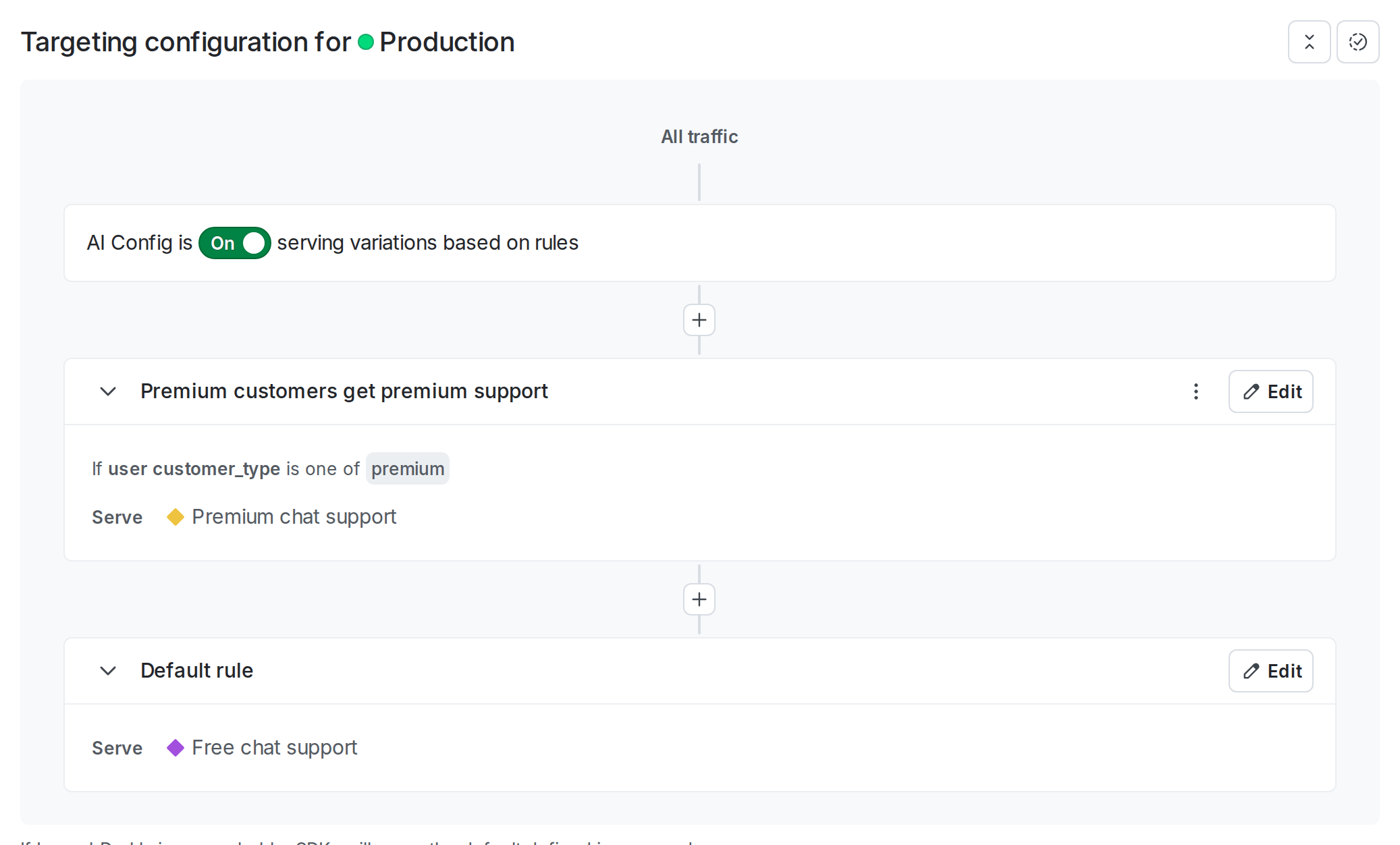

Next, set up targeting rules for your AI Config. These rules determine which of your customers receives which variation of your AI Config.

To specify the AI Config variation to use by default when the AI Config is toggled on:

- Select the Targeting tab for your AI Config.

- In the “Default rule” section, click Edit.

- Configure the default rule to serve the “Free chat support” variation.

- Click Review and save.

To specify a different AI Config variation to use for premium customers:

- Select the Targeting tab for your AI Config.

- Click the + button between existing rules, and select Build a custom rule.

- If the AI Config is off and the rules are hidden, click View targeting rules.

- Optionally, enter a name for the rule.

- Leave the Context kind menu set to “user.”

- In the Attribute menu, type in

customer_type. You’ll set this attribute in your code later. - Leave the Operator menu set to “is one of.”

- In the Values menu, type in

premium. You’ll set this value in your code later. - From the Select… menu, choose the “Premium chat support” variation.

- Click Review and save.

By default, the AI Config is set to On. Click Review and save.

Here’s what the Targeting tab of your AI Config should look like:

Step 5: Customize the AI Config

In your code, use the config function in the LaunchDarkly AI SDK to customize the AI Config. You need to call config each time you generate content from your AI model.

The config function returns the customized messages and model configuration along with a tracker instance for recording metrics. Customization is what happens when your application’s code sends the LaunchDarkly AI SDK information about a particular AI Config and the end user that has encountered it in your app, and the SDK sends back the value of the variation that the end user should receive.

To call the config function, you need to provide information about the end user who is working in your application. For example, you may have this information in a user profile within your app.

The config function returns the customized messages and model along with a tracker instance for recording metrics. You can pass the customized messages directly to your LLM using a chat completion call, track_openai_metrics.

Here’s how:

Step 6: Monitor results

As customers encounter chat support in your application, LaunchDarkly monitors the performance of your AI Configs: the tracker returned by the config function automatically records various metrics. To view them, select the Monitoring tab for your AI Config in LaunchDarkly.

In this example, you can review the results to determine:

- which support option provides higher satisfaction for customers

- which support option uses more tokens

You could use this information to make a business decision about whether the performance differences are worth the cost differences of running each model for your different customer tiers.

To learn more, read Monitor AI Configs.

Conclusion

In this guide, you learned how to manage AI model usage by customer tier in an OpenAI-powered application, and how to review the performance of those models based on customer feedback and token usage.

For additional examples, read the other AI Configs guides in this section. To learn more, read AI Configs and AI SDKs.

Want to know more? Start a trial.

Your 14-day trial begins as soon as you sign up. Get started in minutes using the in-app Quickstart. You'll discover how easy it is to release, monitor, and optimize your software.Want to try it out? Start a trial.