Creating mutually exclusive experiments

Overview

This guide provides an example of how to create mutually exclusive experiments using experiment layers. Mutually exclusive experiments are two or more experiments configured to prevent contexts from being included in more than one experiment at a time.

Generally, it is not a problem to include a context in multiple experiments. Our Experimentation offering minimizes interaction effects using audience allocation, and you can be confident in the winning variation even when running multiple concurrent experiments with overlapping audiences.

However, there are some cases in which you may not want to include each context in more than one experiment at a time. For example, you may be concerned about collisions between experiments that are testing similar parts of your app, like two different changes to the same section of your app’s user interface (UI), or experiments running on both the back end and front end of the same functionality. In these cases, you can create mutually exclusive experiments using experiment layers.

Prerequisites

To complete this tutorial, you must have the following prerequisites:

- An active LaunchDarkly account with Experimentation enabled, and with permissions to create flags, edit experiments, and edit layers

- Familiarity with LaunchDarkly’s Experimentation feature

- A basic understanding of your business’s needs or key performance indicators (KPIs)

Concepts

Before you begin, you should understand the following concepts:

Layers

LaunchDarkly Experimentation achieves mutually exclusive experiments using “layers.” You can add two or more experiments to a layer to ensure that those experiments never share the same traffic.

For example, you can create a “Checkout” layer that you add all of your experiments related to the checkout process to. Then, if you run multiple experiments at once, customers are never exposed to more than one experiment variation at a time.

To learn more, read Experiment layers.

Reservation amounts

Before you create an experiment, you should decide how much of the layer’s traffic you want to include in the experiment. This is called the “reservation amount.” The more experiments you expect to include in the layer, the lower the reservation amount be.

For example:

- If you plan to have only two experiments in the layer, you might want to reserve 50% of traffic for each.

- If you plan to have five experiments in the layer, you might want to reserve 20% for each.

As new contexts encounter experiments within the layer, LaunchDarkly will assign contexts based on each experiment’s reservation amount.

In this example, each experiment will have a reservation amount of 25%. Although there are only three planned experiments for this layer, leaving an extra 25% available means you could add a fourth experiment later.

Get started

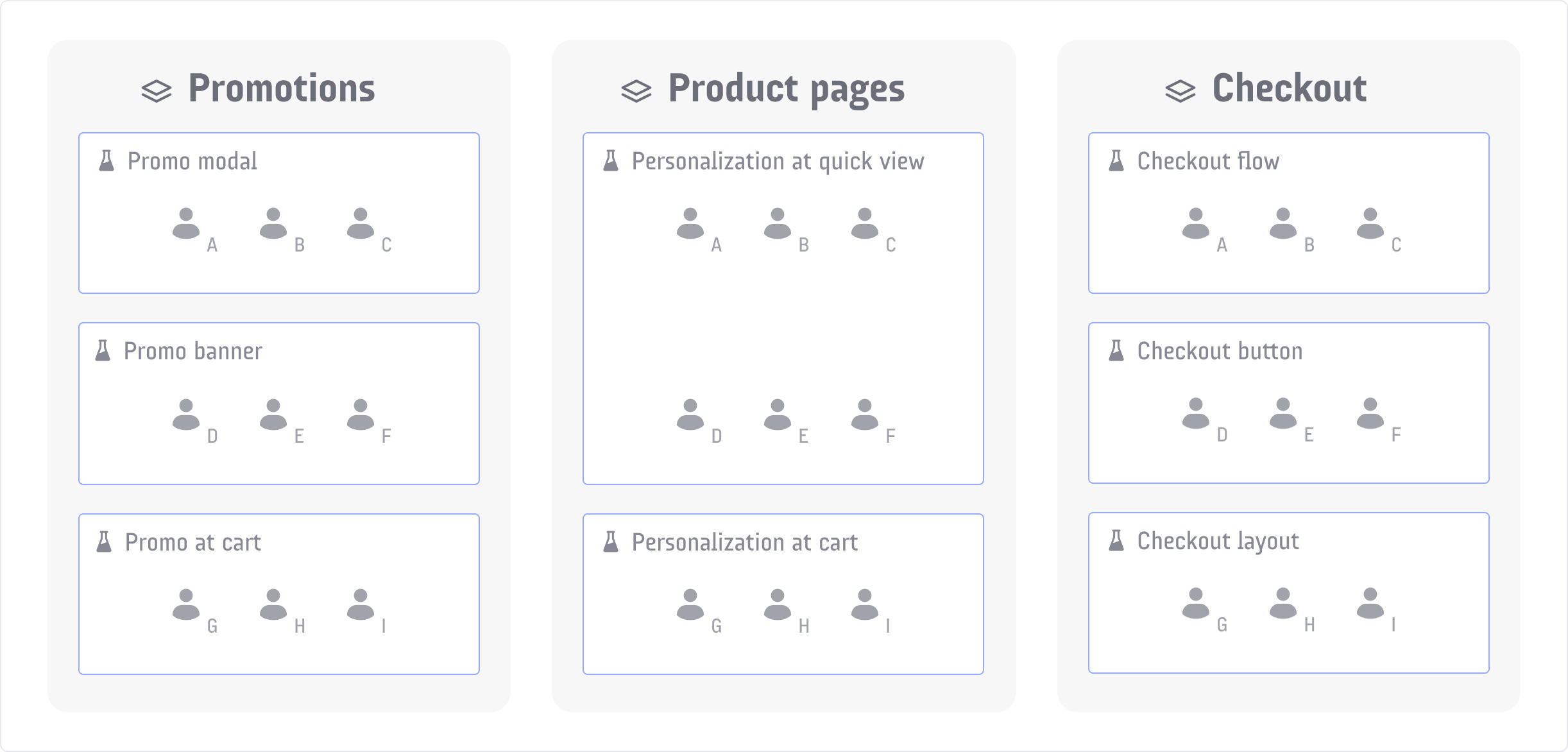

Here is an example of multiple experiments split between three different layers. The “Promotions” layer includes three experiments, the “Product pages” layer includes two experiments, and the “Checkout” layer includes three experiments:

In this example, customer A can’t be included in more than one experiment in the “Promotions” layer, more than one experiment in the “Product pages” layer, nor more than one experiment in the “Checkout” layer.

Customer A can simultaneously be included in the “Promo modal,” “Personalization at quick view,” and “Checkout flow” experiments, because those experiments are all in different layers.

This guide will walk you through creating a “Promotions” layer and the three mutually exclusive experiments within it.

Create experiment flags

To begin, create the flags you will use in your experiments within the layer.

In this example, you will create three boolean experiment flags, one for each experiment in the layer:

- the “Promo modal” flag,

- the “Promo banner” flag, and

- the “Promo at cart” flag.

To learn how, read Experiment flags.

Create metrics

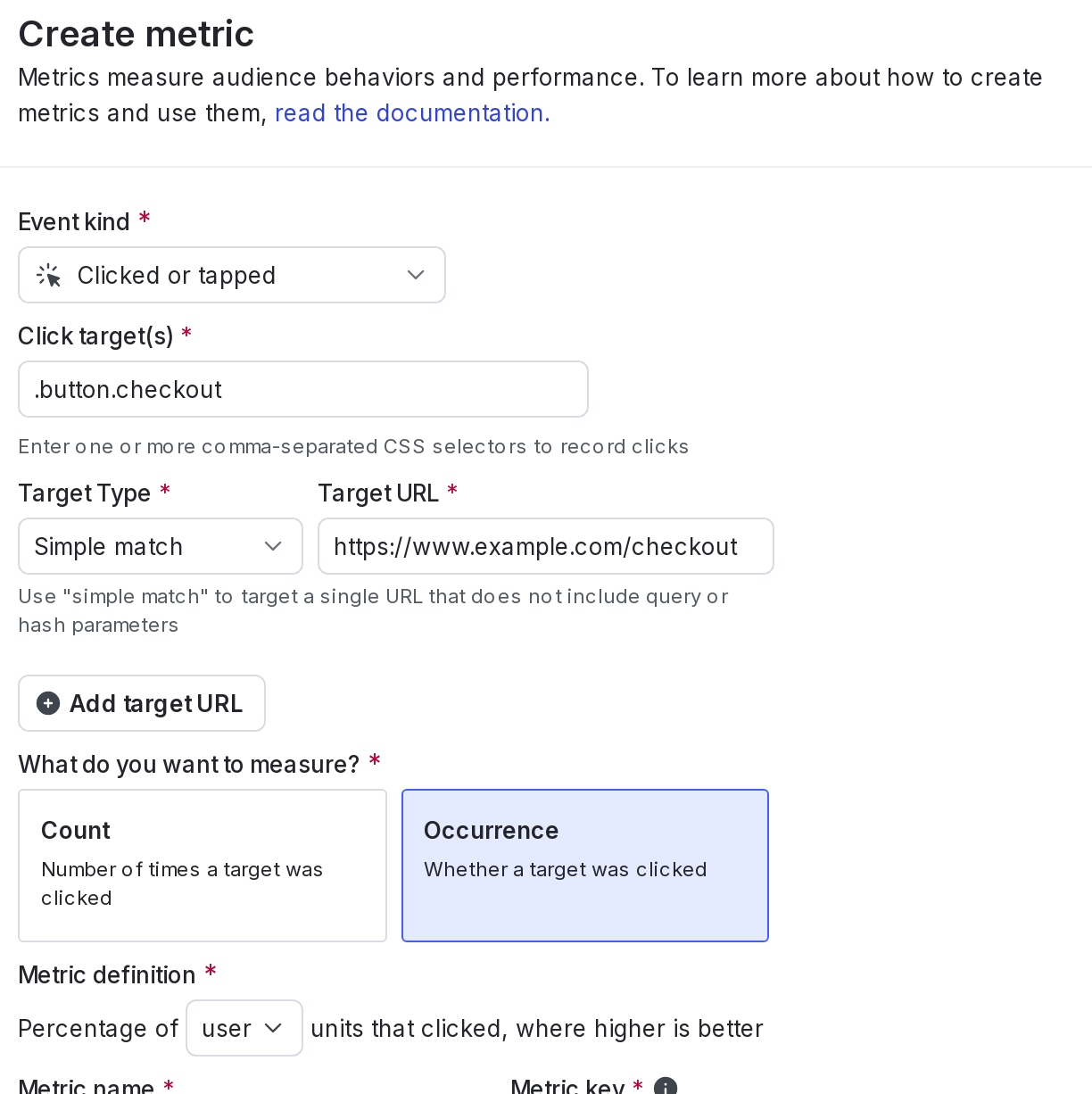

Next, create or select existing metrics to use in your experiments. In this example, you will be using a clicked or tapped conversion metric to measure completed checkouts.

To create the new metric:

- Navigate to the Metrics list.

- Click Create metric. The “Create metric” dialog appears.

- Leave the “Data warehouse” selection on LaunchDarkly hosted.

- Select a metric kind of Clicked or tapped.

- Enter one or more CSS selectors in the Click targets field. In this example, you are using

.button.checkout. - Specify the Target type you want to track behavior on. In this example, you are using “Simple match.”

- Enter the Target URL. In this example, you are using

https://www.example.com/checkout. - Select Occurrence.

- In the “Metric definition” section, select percentage of User units

- Enter “Completed checkouts” as the Name for the metric.

- Enter “Customers who complete a checkout by clicking the ‘purchase’ button” for the Description.

- Click Create metric.

Here is what the metric looks like:

To learn more, read Clicked or tapped conversion metrics.

Create the first experiment and layer

Now, you will create the first experiment and the layer together.

Here’s how:

- Click Create and choose Experiment. The “Create experiment” dialog appears.

- Enter “Promo modal” for the Name.

- Enter a Hypothesis.

- Click Create experiment. The experiment Design tab appears.

- Leave user as the context kind to Randomize by.

- Select “LaunchDarkly” as the Metric source.

- Add the “Completed checkouts” Metric you created in the Create metrics step.

- Choose the “Promo modal” Flag you created in the Create experiment flags step.

- Leave the “Default rule” as the Targeting rule.

- In the “Audience allocation” section, click Add experiment to mutual exclusion layer.

- Click the Create layer tab.

- Add “Promotions” as the layer Name.

- Click Create layer.

- Enter a Reservation amount of 25%.

- Click Save layer.

- Choose “True” for the Variation served to users outside this experiment.

- Select the Sample size for the experiment. In this example, you will include 5% of your audience.

- Select a Statistical approach of Bayesian or frequentist.

- If you selected a statistical approach of Bayesian, select a preset or Custom success threshold.

- If you selected a statistical approach of frequentist, select:

- a Significance level.

- a one-sided or two-sided Direction of hypothesis test.

Expand statistical approach options

You can select a statistical approach of Bayesian or Frequentist. Each approach includes one or more analysis options.

The Bayesian options include:

- Threshold:

- 90% probability to beat control is the standard success threshold, but you can raise the threshold to 95% or 99% if you want to be more confident in your experiment results.

- You can lower the threshold to less than 90% using the Custom option. We recommend a lower threshold only when you are experimenting on non-critical parts of your app and are less concerned with determining a clear winning variation.

The frequentist options include:

- Enable sequential testing: Sequential testing lets you act on the results of your experiment at any time, instead of waiting for a specific sample size. To learn more, read Fixed-horizon versus sequential.

- Significance level:

- 0.05 p-value is the standard significance level, but you can lower the level to 0.01 or raise the level to 0.10, depending on whether you need to be more or less confident in your results. A lower significance level means that you can be more confident in your winning variation.

- You can raise the significance level to more than 0.10 using the Custom option. We recommend a higher significance level only when you are experimenting on non-critical parts of your app and are less concerned with determining a clear winning variation.

- Direction of hypothesis test:

- Two-sided: We recommend two-sided when you’re unsure about whether the difference between the control and the treatment variations will be negative or positive, and want to look for indications of statistical significance in both directions.

- One-sided: We recommend one-sided when you feel confident that the difference between the control and treatment variations will be either negative or positive, and want to look for indications of statistical significance only in one direction.

- (Optional) Select a Multiple comparisons correction option:

- Select Apply across treatments to correct for additional comparisons from multiple treatments

- Select Apply across metrics to correct for additional comparisons across multiple metrics

- Select Apply across both metrics and treatments to correct for additional comparisons from multiple metrics and multiple treatments

To learn more, read Bayesian versus frequentist statistics.

- Scroll to the top of the page and click Save.

Create the second and third experiments

To create the second and third experiments, you will repeat the process above, except you will use different flags, and you will add the experiments to the new layer you just created.

To create the second experiment:

- Click Create and choose Experiment.

- Enter “Promo banner” for the Name.

- Enter a Hypothesis.

- Click Create experiment. The experiment Design tab appears.

- Leave user as the context kind to Randomize by.

- Select “LaunchDarkly” as the Metric source.

- Add the “Completed checkouts” Metric.

- Choose the “Promo banner” Flag.

- Leave the “Default rule” as the Targeting rule.

- In the “Audience allocation” section, click Add experiment to mutual exclusion layer.

- Select the “Promotions” layer from the menu.

- Enter a Reservation amount of 25%.

- Click Save layer.

- Select Sample size of 5%.

- Choose “False” for the Variation served to users outside this experiment.

- Select Split equally for the variations split.

- Select the same Statistical approach you chose for the first and second experiment.

- Scroll to the top of the page and click Save.

To create the third experiment:

- Click Create and choose Experiment.

- Enter “Promo at cart” for the Name.

- Enter a Hypothesis.

- Click Create experiment. The experiment Design tab appears.

- Leave user as the context kind to Randomize by.

- Select “LaunchDarkly” as the Metric source.

- Add the “Completed checkouts” Metric.

- Choose the “Promo at cart” Flag.

- Leave the “Default rule” as the Targeting rule.

- In the “Audience allocation” section, click Add experiment to mutual exclusion layer.

- Select the “Promotions” layer from the menu.

- Enter a Reservation amount of 25%.

- Click Save layer.

- Select Sample size of 5%.

- Choose “False” for the Variation served to users outside this experiment.

- Select Split equally for the variations split.

- Select the same Statistical approach you chose for the first and second experiment.

- Scroll to the top of the page and click Save.

Toggle on the flags

When you are ready to run your experiments, toggle On the related flags.

Start experiment iterations

The last step is to start an iteration for each of your mutually exclusive experiments.

To start your experiment iterations:

- Navigate to the Experiments list.

- Click on “Promo modal.”

- Click Start.

- Repeat steps 1-3 for the remaining two experiments.

You are now running three mutually exclusive experiments, with no overlap between the contexts included in each.

Read results

When you are finished running your experiments, you can look at which variation has the highest probability to be best, or the highlighted p-value, to decide which features to ship to your customers. To learn more, read Analyzing experiments.

Conclusion

In this guide, you learned how to create layers to prevent contexts from being included in multiple concurrent experiments.

Mutually exclusive experiments are not limited to two or three at a time. When it’s time to create your own, you can create as many mutually exclusive experiments as you need to prevent collisions between experiments running on similar parts of your app or infrastructure.

To get started building your own experiment, follow our Quickstart for Experimentation.

You can also send data to and run experiments with Snowflake

Snowflake users who want to explore this data more in-depth can send all of the experiment data to a Snowflake database using the Snowflake Data Export integration. By exporting your LaunchDarkly experiment data to the same Snowflake warehouse as your other data, you can build custom reports in Snowflake to answer product behavior questions. To learn more, read Snowflake Data Export.

You can also run experiments using warehouse native metrics. To learn more, read Snowflake native Experimentation.

Want to know more? Start a trial.

Your 14-day trial begins as soon as you sign up. Get started in minutes using the in-app Quickstart. You'll discover how easy it is to release, monitor, and optimize your software.Want to try it out? Start a trial.