Software is never developed in isolation. In addition to all the teammates working together to ship a great product—developers, designers, support, and so forth—the most important collaborator is the one who isn't even in your organization chart: the end-user.

We build things that we hope will make our users' lives just a little bit easier, but most of the time we can only make educated guesses at what they truly need. That’s why user feedback is a critical step to developing software that's functional and useful. User feedback can help you iteratively integrate new features and updates in a way that is useful and pleasing to the user.

In order to gather that feedback, many organizations undertake some form of User Acceptance Testing (UAT). UAT is a stage of testing that usually occurs as the last step before release. As opposed to other types of testing (functional, integration, and so on) where the developers or testers execute the tests, UAT testing is performed by the users. UAT is that final stage of testing, just before (or sometimes just after) release, where your users validate that the product works and meets their requirements.

UAT can take on many forms, such as:

- New platform released as a public beta

- New feature that's released to only a subset of users

- Round of testing in a production mirror environment

- Bringing in a user experience researcher to observe how new changes affect the overall product

In all cases, the aim of UAT is to anticipate any bugs, errors, or otherwise confusing elements of your software before it's released as a working product. In other words, UAT helps you release a highly polished, working product that doesn't need constant revamping.

UAT is absolutely critical to the successful release of an application or a feature. In one survey, 88% of respondents said UAT was key to achieving quality objectives, but less than 50% of those respondents had any tools to implement UAT.

In this post, we'll take a close look at the what, when, and who of UAT, and then how to execute it successfully in the real world.

The What, When, and Who of UAT

What Are the Benefits of UAT?

The use cases for and benefits of UAT are broad. The process could be considered a more reliable form of black-box testing (or, a way to test the functionality of an app without knowing anything about the internals of that app).

- UAT validates the decisions made by your engineering and design teams

While software testing can ensure that a feature is functional, it does not necessarily ensure that it satisfies a user's needs. While test cases can help identify how code integrates with the rest of an existing system, UAT operates on feedback from real-world scenarios, helping teams verify whether their changes are actually helping customers. - UAT methodologies collect information from outside your organization to understand what works and what doesn't.

It's not uncommon for organizations to have a somewhat subjective view of the tools they develop. Developers suffer from the "curse of knowledge"—that is, once you know something, you can't un-know it. As the builders who are accustomed to their app and its features, developers have more experiences and knowledge than a general user exposed to them for the first time. UAT helps you to understand anything your team missed or overlooked. - UAT can also guide you towards solving issues around scale and scope.

If you discover through UAT that your feature is wildly popular, you might find it necessary to allocate more resources—like servers or disk space—to ensure that it operates properly when released to a wider audience.

Ultimately, there’s an enormous operational risk for businesses that don’t engage in UAT. Running a UAT session takes about 10% of the overall project timeline, but it can save about 30% more time in the future by identifying and correcting software errors ahead of a release.

When Do I Use UAT?

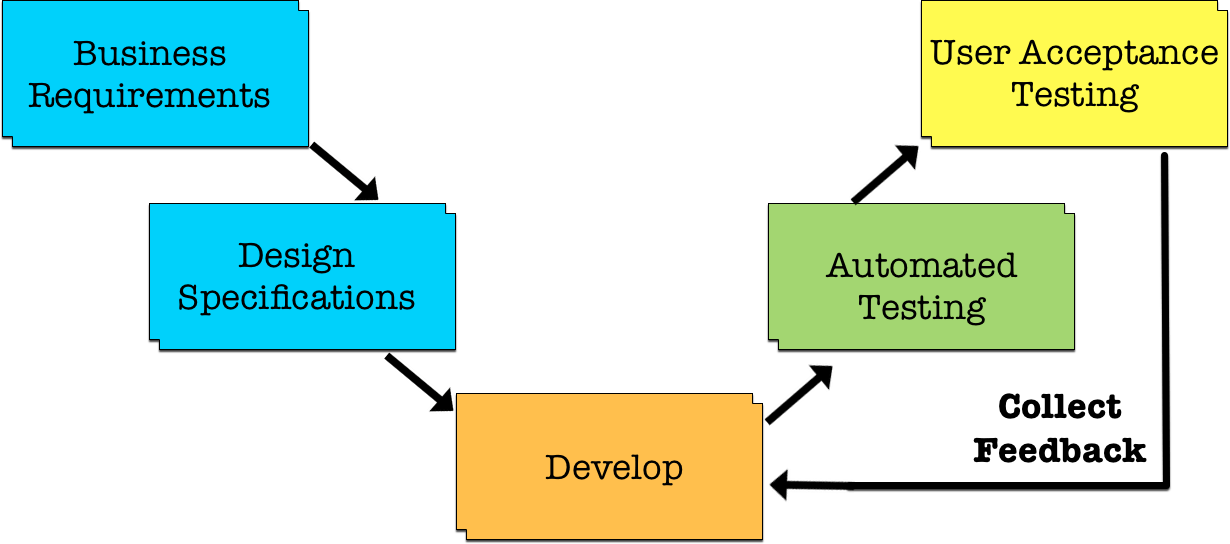

It's likely that you already have some stable software development lifecycle (SDLC) processes in place that include testing. UAT usually occurs as one of the last stages in your SDLC, just before you're ready for a full deployment to production, or while you are testing a portion of your release in production using feature management/feature flags.

After you've established business requirements, designed the interface, written the code, and implemented application testing, you typically start UAT on a subset of your users. While functional testing identifies whether your code works, and integration testing determines how a feature works alongside the rest of your app, UAT engages with end-users to validate your work. This should be the last phase of the software testing process before a team can sign off a component of work.

In the classic waterfall development model, different stages of work are expected to occur sequentially. In this scenario, it’s easy to see where UAT fits—after your automated testing, but just before your full release.

But software development has largely moved away from this older practice. UAT also fits in well in a modern agile context. Teams can release changes quickly, run UAT scenarios, and iterate on their code with new information about users' experiences. Even though the users participating in UAT might not directly talk to engineers, the habits and behaviors they exhibit are data points that teams can collect and address.

Who Implements UAT?

Deciding who in your organization is responsible for managing UAT programs depends largely on how you will run those programs. Although the notion of defining test scenarios is entwined with UAT, neither your QA teams nor other testing teams should run a UAT session. While UAT is technically a type of testing, it involves much more nuance in identifying how users think and behave.

Instead, business analysts or user experience researchers are typically in charge of collecting and assessing feedback. This might be in the form of in-person interviews, but it might also involve creating dashboards to show information like the time spent on a page or which elements are being interacted with.

While establishing a permanent UAT team would be ideal, smaller organizations seldom have the resources to do so. If you're just starting to explore UAT test cases, you can select a subset of colleagues who are accustomed to receiving and interpreting user feedback before creating any permanent roles. According to a recent Forrester survey, nearly two-thirds of the companies they interviewed have UAT as a component of their agile workflow.

Ultimately, someone like a product manager needs to turn the user feedback into an actionable item for engineers. If users find a feature to be too confusing, it might require a visual redesign or even some updated copy from documentation teams. If new features don't actually solve a user's problems, the product manager would facilitate a discussion with engineers detailing the concerns and working together to create solutions.

Putting UAT into Real-World Practice

Because the UAT process is somewhat subjective, it can be difficult to gauge whether you're running the testing process correctly. For example, if you only gather information from one or two users—or even the subset of users who are evangelists and superfans of your app—you may be missing out on broader perspectives. Let’s look next at some of the best practices needed to run a successful, real-world UAT.

The UAT Test Plan

Before engaging in any UAT process, the first step is to define and communicate to your team what exactly you hope to accomplish. Be sure your expected results are quantifiable. Do you want to reduce the number of clicks necessary for a user to perform an action, or simply make a user self-sufficient in completing a task without looking up documentation? Since we can't quantify whether some software change makes a process "easier" or "harder" than before, you'll need to define your goal in a clear and direct language that can be assessed when UAT is completed.

Just as a functional test plan states how you expect your code to behave in a variety of scenarios, you should define what you want to get out of UAT using a UAT test plan.

Your plan will vary but might start with broad categories, such as:

- Identifying the needs of business users and which users to target

- Identifying where critical communication updates will be made (JIRA, project management software, a final email, and so on)

- Identifying how quickly a team should respond to issues

This sort of acceptance criteria is no different than any other guidelines development teams establish for determining the quality of their code. It's not too dissimilar from defining user stories; the difference is that UAT testing phrases its expectations in absolutes ("A user must be able to...") rather than hypotheticals ("As a user, I would like to...").

Location

Where should you perform your UAT? Like any testing, UAT can be performed in-person or remotely, either synchronously or asynchronously. You'll need to determine whether you want to engage in conversation with the user when they get stuck, or whether you would prefer to observe how a user resolves any situation in which they might get stuck.

Collecting User Feedback

Regardless of location, too much involvement from your teams may inadvertently influence how an end-user reacts to your app or feature. One measure of a successful UAT process is your capacity to listen to a user's concerns and needs, not educate or inform them on how you think they should be using the app. While bugs are inevitable, the UAT process shouldn't be focused on finding defects. Rather, it should be about validating user experience.

A few ways to help this process include:

- If an error occurs, make a note of it, determine its severity in impacting a user's ability to continue working with the app, but continue to test.

- If you're working directly with the user, ask them what they thought should have happened.

- Keep the scope of your testing session well-bounded, to focus on validating a particular user experience scenario while minimizing the surface for encountering bugs.

- After you've gathered the feedback, work with your teams to anticipate user expectations in future sessions, working towards the goal of ensuring future attempts don't get users in an error state once more.

- Bring any surfaced defects to your product team as well as your engineering team to determine if the bug might be related to a flawed model for where the user experience ought to be.

Validating Ideas in Production

Here at LaunchDarkly, we understand the value and significance of in-person UAT. However, there comes a time when setting up interviews to observe user behavior in real-time doesn't scale. Consider the following scenarios:

- You need to experiment with whether a feature design should go one way or another. Ideally, you would test both user experience options with two large subsets from your current user base.

- You need to increase your efficiency in getting features out to your users so that they can test them. Your users are happy to be the guinea pigs and provide you with valuable feedback, so you just want a way to do this quickly and at scale.

- The UAT session for your application just doesn't fit the "one-on-one interview" model. For example, what if you're testing features to be used by a teacher in front of 25 kindergartners?

- The feature you want to test is related to mitigating load issues, such that testing the feature one user at a time doesn't yield useful feedback. A proper UAT approach means letting hundreds or thousands of simultaneous real-time users determine the usefulness of your features.

In these kinds of situations, the ideal solution involves asynchronous UAT methodologies, administered and controlled by feature flags.

Feature flags are software toggles that control the behavior of your application in a production environment. Imagine a database table that has two columns: a user's ID, and a boolean value that determines the behavior of your app. That "behavior" could be a new UI layout, access to some new API, or an entirely new feature. As a user interacts with your app, your internal business logic would determine whether or not the user has access to these new changes. Most commonly, feature flags are used in situations called A/B testing, where a portion of your users get access to the new features and the other group doesn't. A well-defined UAT process would identify which of those two control groups are closer to accomplishing your initial design goals.

A broad set of your actual software users should either be granted access to a new feature or given notification that a beta feature is available. This sets up a user's expectation and might make them even more willing to provide feedback. Knowing that a feature is still undergoing beta testing signals that it's open to change in the future.

Although we're accustomed to separate test environments—and testing in production might seem scary—it is, on the contrary, much easier to control. The idea isn't simply to grant access to new features to half your user base. Instead, feature flags enable two different strategies for releasing new updates: canary tests and beta tests.

Canary testing

Canary testing is the process of introducing new features to a small, controlled subset of your user base—for example, a dozen of your most active users. These are the users who will use the new feature, give you feedback, and possibly be more forgiving if something goes wrong. The idea is to make calculated changes for just a portion of your users. If the software fails, you can disable the feature flag in order to revert the users back to the stable release of your software. Generally, the primary thing you’re trying to determine with canary testing is whether the new feature breaks production.

Beta testing

You can also run beta tests with feature flags. This can be done through hand-selecting users, allowing users to enroll themselves in the beta, or through a percentage rollout. For example, you might make a feature available to a random 5% of your user base, observe their interactions, and then increase the availability to 10%, 20%, 35%, and so on. This allows you to make any adjustments necessary—fixing bugs or making tweaks based on real user responses—without limiting testers to just power users or potentially disrupting everyone's workflow. In addition to detecting bugs, you’re observing user feedback during a beta test.

UAT with feature flags

UAT is an important part of the software development process, and it can take many different forms. A well-executed UAT plan does much more than just give your development teams confidence that they're headed in the right direction. It also delights your users, as they finally get to use an app that does exactly what they need it to do.

If you'd like to learn more about LaunchDarkly's philosophy on "testing in production," check out our article on the advantages of feature flagging. Many software companies, such as GitHub, Atlassian, and Microsoft, have also written about their positive experiences with feature flags.

.png)