Product Updates

[ What's launched at LaunchDarkly ]

October 30, 2025

AI Engineering

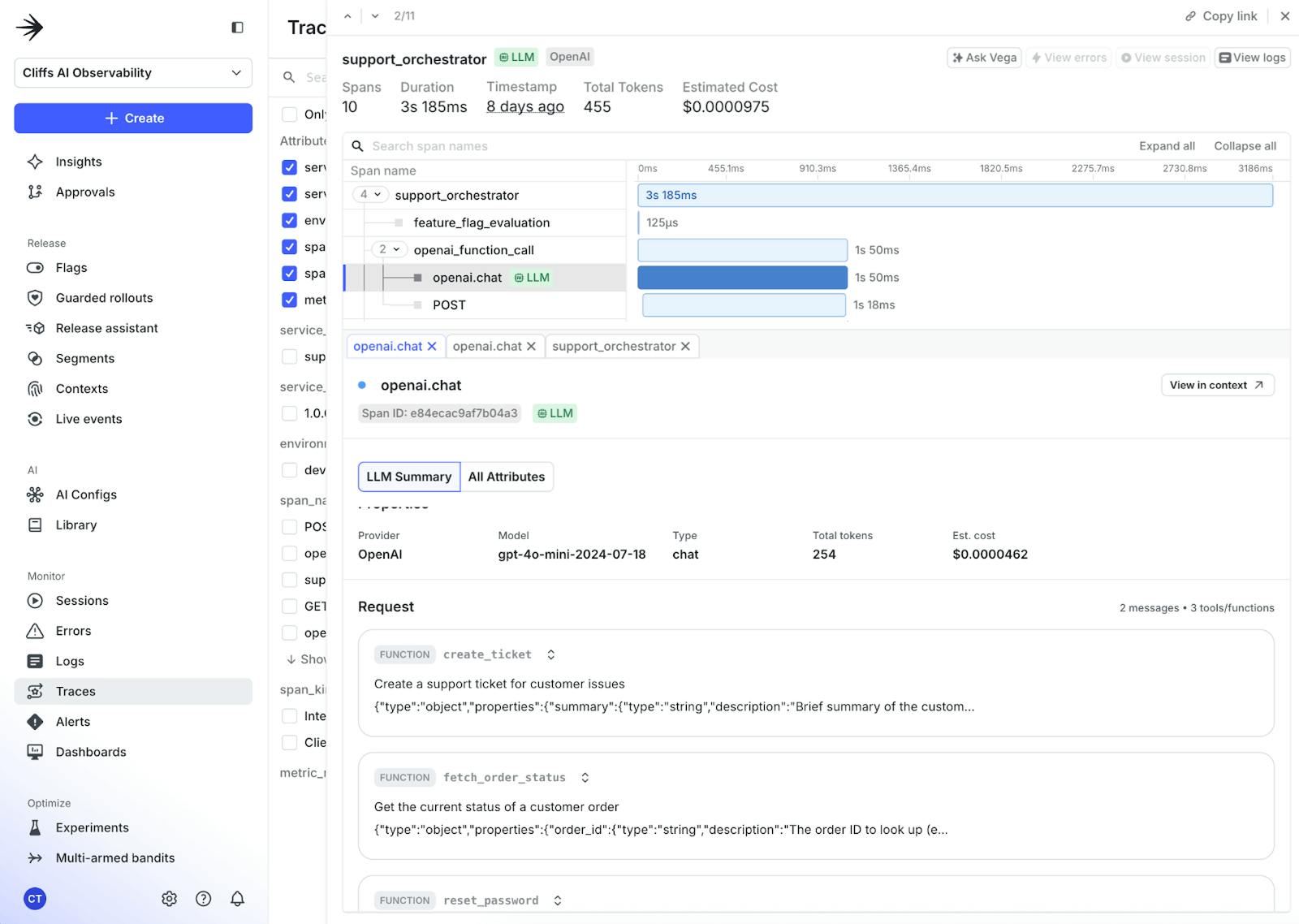

LLM Observability

LLM Observability gives teams visibility into how GenAI applications behave in production. It tracks not only performance metrics like latency and error rates, but also semantic details, including prompts, token usage, and responses. With LaunchDarkly’s LLM Observability, you can debug, monitor, and improve both the performance and quality of your AI-driven features.