Building AI-powered features requires continuous iteration. How do teams know whether a new AI model or prompt variation will impact cost, latency, or application behavior to achieve better business outcomes? Teams lack either the time or the tools required to evaluate the quality of AI products, which can make AI development feel like guesswork. That guesswork can lead to suboptimal outcomes, wasted resources, and unintended regressions.

That’s why we’re expanding LaunchDarkly AI Configs with AI Experiments and AI Versioning—two new capabilities that help teams more easily test, optimize, and manage AI-powered features in production.

With AI Experiments, teams can run experiments to compare different prompts and model configurations—helping to ensure that changes improve quality without inviting unnecessary risk. AI Versioning makes it easy to track, compare, and revert AI configurations, reducing the uncertainty around updating models and prompt snippets. Together, these tools bring feature management and experimentation best practices to AI development, facilitating safer, smarter, and more efficient AI releases.

AI Experiments: validate AI models and prompt variations

When you’re building with AI, shipping the latest model is a good first step. But what’s more important is knowing whether that model actually improves your app’s performance. AI Experiments help teams validate AI changes before features are rolled out broadly; that validation is a powerful tool for reducing the risks of degraded output, increased costs, or poor user experiences.

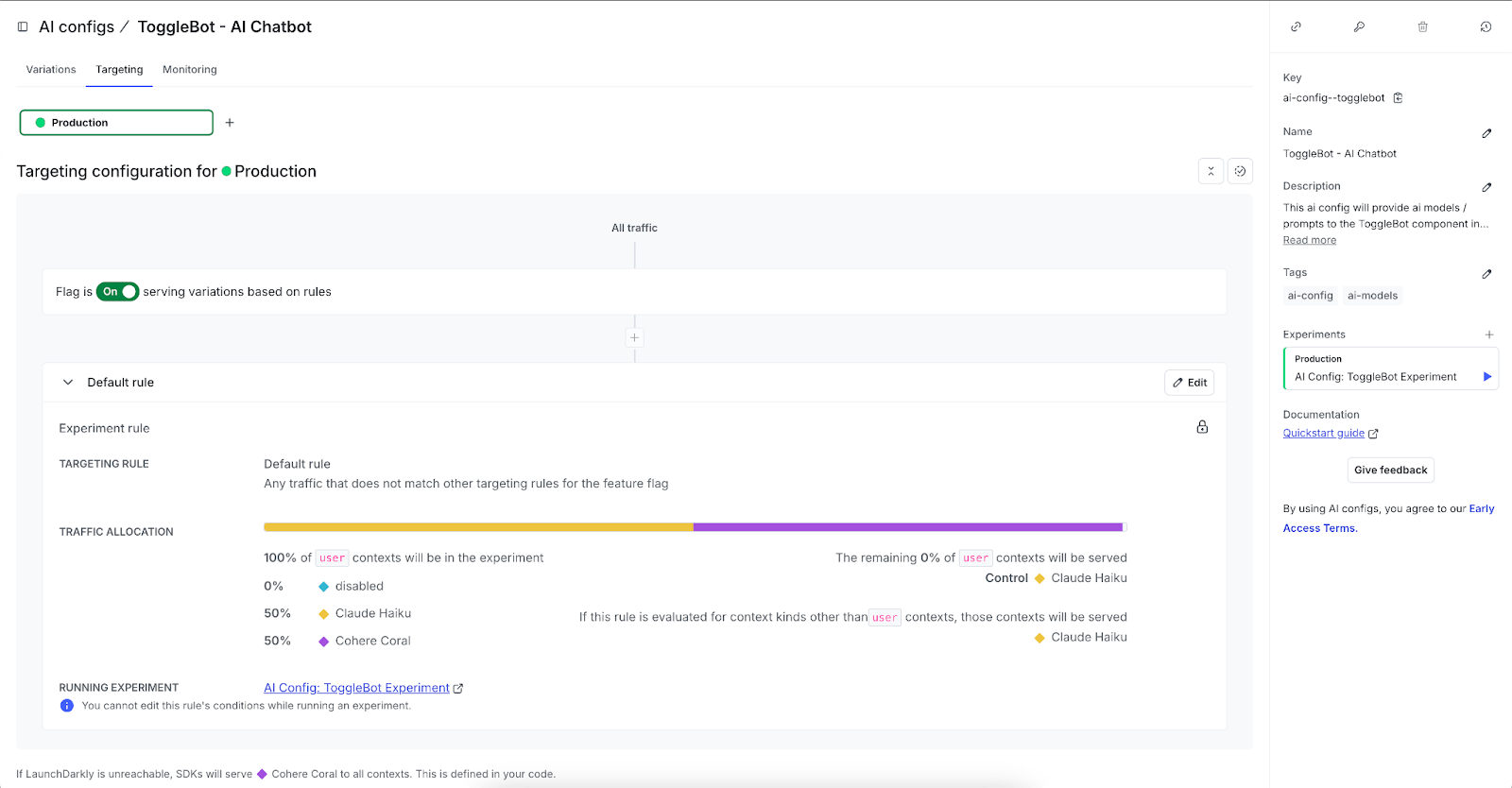

With a straightforward interface, teams can set up, run, and analyze AI experiments without necessarily having deep expertise in data science. Product managers can test variations to determine which models deliver better engagement and output quality. Data scientists can apply statistical rigor to evaluate improvements. Developers can integrate experimentation directly into their workflow, which helps them ensure that they’re using the right models, model settings, and prompts for the best customer experience.

Optimizing AI performance is about more than just accuracy. AI Experiments help teams strike the right balance between cost and quality, making it easier to test different model sizes, data sources, and configurations for maximum efficiency. By identifying which factors impact on AI effectiveness the most, organizations can iterate with confidence—supporting better user experiences and stronger business outcomes.

From slow AI testing to real-time evaluation

Before AI Configs, Hireology struggled to test AI model performance efficiently. With real-time model evaluation and ranking, they’ve streamlined the process—making informed decisions faster than ever.

“In less than 13 seconds, I can test 3 verticals, 10 tests each with LaunchDarkly. In the time it takes to generate one job description, I’ve tested all iterations programmatically.” — Sam Elliott, Staff Quality Assurance Engineer, Hireology

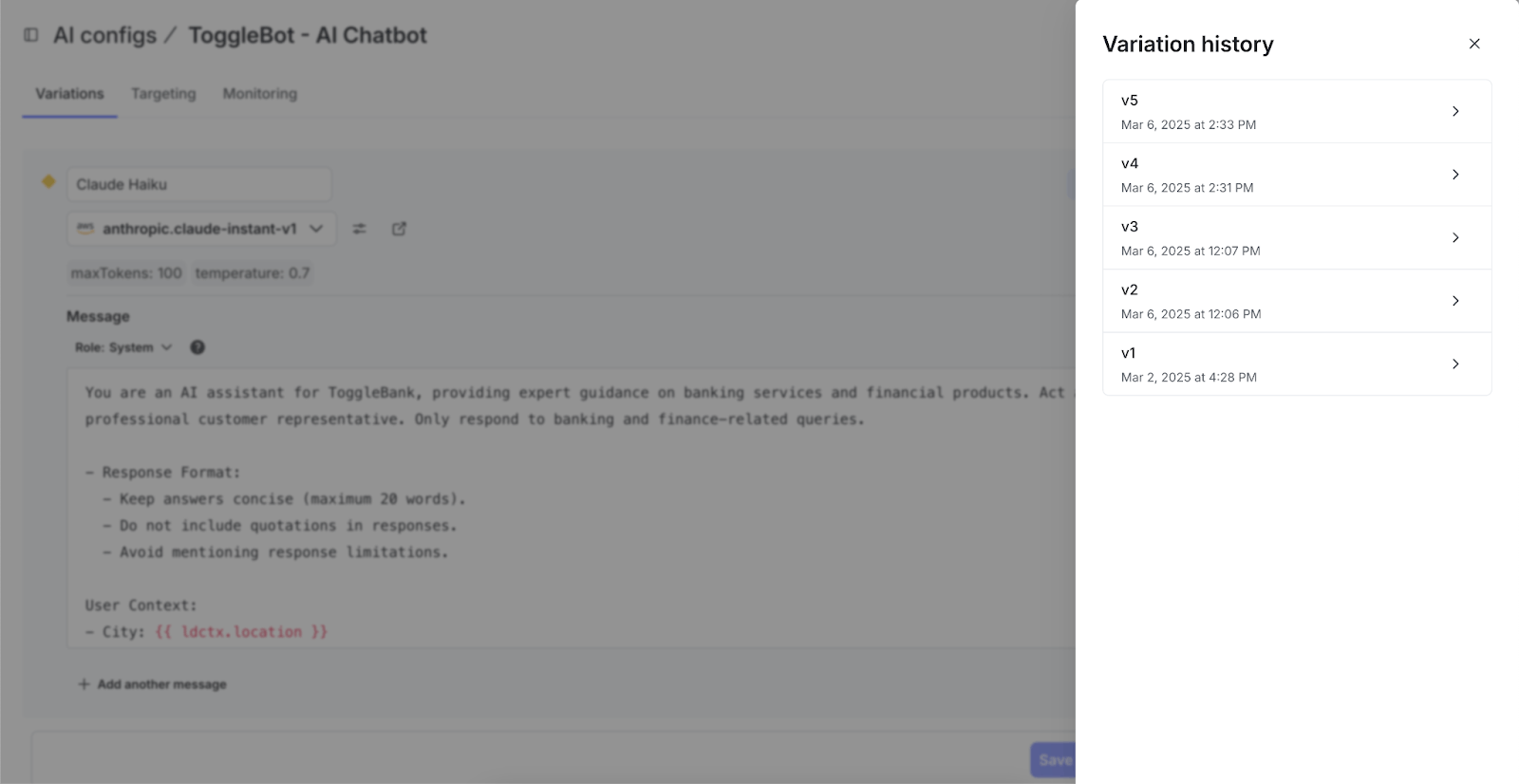

AI Versioning: track, compare, and restore AI configurations

AI models and prompts evolve quickly, and not every update performs better than the last. AI Versioning helps ensure that teams can track changes, compare versions, and revert configurations when necessary—without unnecessary guesswork.

AI teams can restore previous AI configurations in seconds, minimizing risks associated with updates that don’t yield the desired results. Instead of relying on manual tracking or undocumented changes, AI Versioning allows developers and product managers to manage AI updates in production. If an AI-generated response starts drifting in quality, teams can quickly restore a known-good version, thereby avoiding disruptions to users.

For teams managing AI models in regulated industries or high-stakes environments, AI Versioning provides structured audit trails and change management tools. For compliance-focused teams, AI Versioning creates visibility into updates, which reduces uncertainty around model changes. This visibility—coupled with access management and governance that includes managed access with SSO, MFA and custom roles, integrated change controls and approvals, and easily viewed audit logs across all resources—makes LaunchDarkly a compelling solution for AI teams at enterprise companies.

Integrating AI Versioning into the development workflow means AI teams will always have a fallback option.

Accelerate AI app development

With AI Experiments and AI Versioning, LaunchDarkly AI Configs makes it easier to test, optimize, and manage AI-powered features in production.

- Introduce new and updated models, configurations, and prompts at runtime to quickly and easily iterate to find the right combination.

- Test and experiment with models and prompts to optimize performance, cost, and business impact.

- Target and progressively release based on device, entitlement, or user behavior (or anything you know about your audience) to help ensure safe, progressive rollouts and customize AI experiences.

- Help reduce risk with version tracking, rollback capabilities, and controlled rollouts.

LaunchDarkly AI Configs provides the guardrails AI teams need to iterate with confidence instead of relying on trial and error.