What is release testing?

Release testing refers to coding practices and test strategies that give teams confidence that a software release candidate is ready for users. Release testing aims to find and eliminate errors and bugs from a software release so that it can be released to users. Let’s dive in and explore several methods used to perform release testing.

Release testing starts with having a software release candidate. This represents a snapshot of the code baseline containing all new software features and bug fixes selected for this release. This software candidate has been packaged and labeled as “production-ready.” It’s this software release candidate that we want to test to ensure that it’s ready for our users.

Release testing comprises all the development and testing activities that ensure a software release candidate is ready for users.

In other words, the objective of release testing is to build confidence into a release candidate. Release testing is a testing approach or strategy rather than one single grand testing method.

Like any other testing approach, release testing aims at breaking the system running the software release candidate in a controlled environment. Development teams focus their quality assurance efforts on this one release candidate, trying to break it so it can be fixed before it gets to actual users.

Release testing environment

Before we can start release testing, we need to prepare the environment where our release candidate will be running. Once we can run the release candidate software, we can start testing it according to various release test methods.

Software engineers and quality assurance professionals differ in how they describe this release testing environment—“pre-production environment,” “preview environment,” and “release testing environment.” All these terms refer to the same goal: deploying and running our software release candidate into an environment matching the production environment used by our users.

Our release testing environment should match our production environment.

However, in practice, maintaining a testing environment identical to our production environment isn’t possible. As a consequence, we always run our release candidate within an approximation of our production environment.

Now that we understand release testing and the environment we’re using, let’s talk about actually testing our software release candidate.

Automated testing

Testing any software release must start while the software is being developed. So, even before we have a software release candidate, new features and bug fixes should already be tested to ensure they work properly. Over the years, software engineers have been incorporating tests into their code so that the code they write gets tested as it is being written.

Automated tests represent code specifically written for the purpose of testing a new feature or bug fix.

Why do we call it automated? Simply because this special testing code is running every time the code baseline is being built as new code gets committed into the code baseline. Performing test automation has become part of what we call continuous integration. Let’s take a look at the most common automated tests.

Unit testing

While writing new features and fixing bugs, most software developers also write unit test code that exercises the new code they’ve just written. Unit tests represent testing code that exercises new code using simulated data and conditions meant for testing most code paths.

Depending on the technology stack used in software production, software engineers typically use one or two unit test frameworks that are integrated into the code baseline.

The goal for unit testing is just that: to test a small software unit and ensure that all critical code paths work as designed.

Whether it’s defect testing or new feature testing, unit tests give immediate feedback to a developer while they’re developing the code. A reliable series of tests that verify most code paths and logic goes a long way toward building confidence in our code baseline.

End-to-end testing

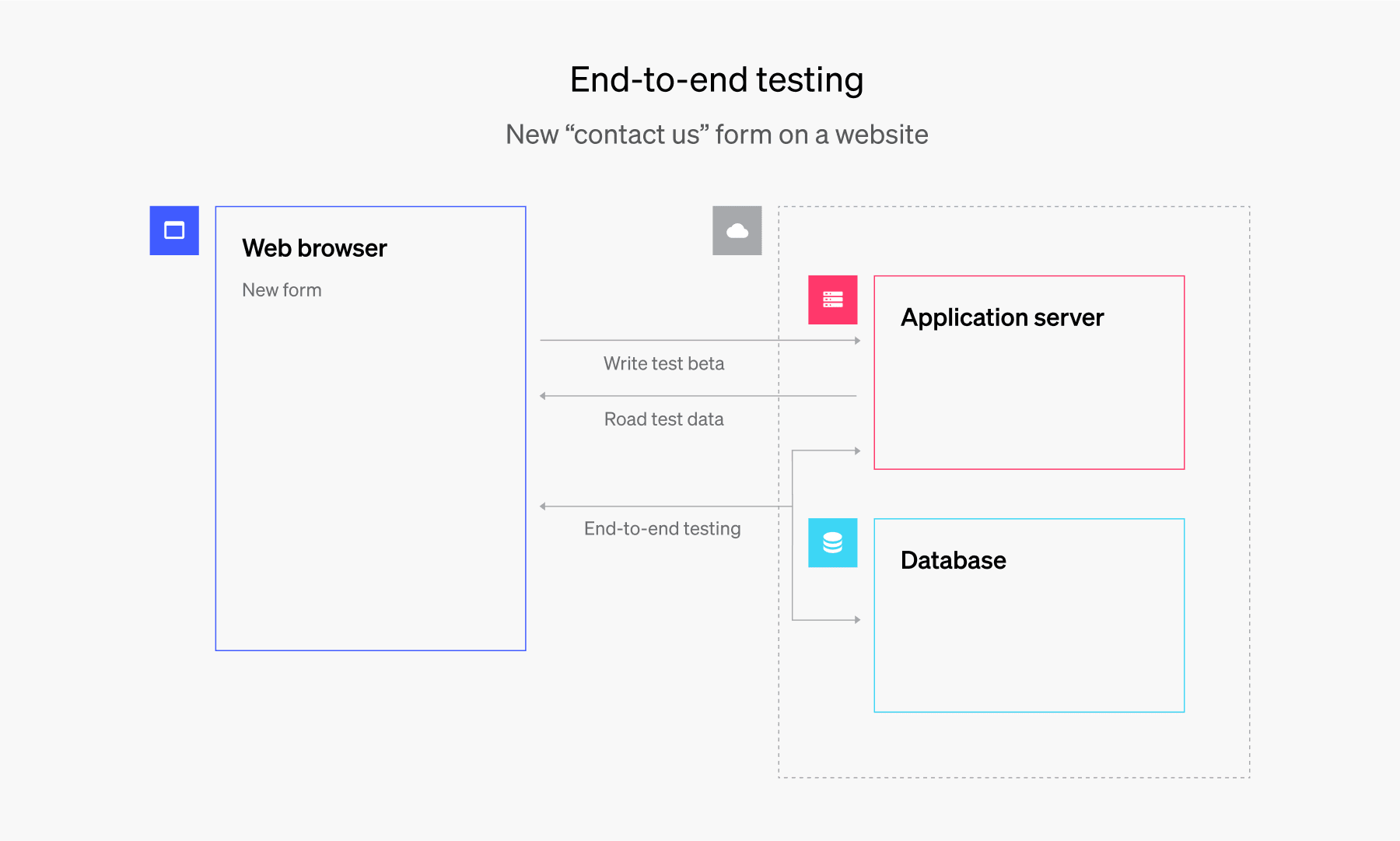

While unit testing focuses on the small units that make up a software feature, an end-to-end test focuses on testing slices of the entire system. Since modern software contains many moving parts, we’re talking about system development when we talk about software development. Consequently, end-to-end test cases represent a system test as opposed to smaller-scale unit tests.

During the normal software development process, end-to-end testing refers to special testing code written for testing a particular software feature spanning multiple layers of a software architecture. Development team members write special code and test scripts that exercise long slices of the tech stack. End-to-end test suites form the backbone of system testing. The focus of end-to-end testing is broader than the unit test, and it represents a slice of the entire tech stack used for development. An end-to-end series of tests brings confidence in the entire data and logic throughout the entire tech stack.

Typically, end-to-end testing exercises code from the user interface through the service layer into the business logic and all the way to the data layer.

Using unit tests and end-to-end tests, software developers and testing teams ensure that new code functions as designed.

Why care about automated tests?

Importantly, automated tests are supposed to run continually, whenever new code merges into the code baseline. However, in practice, unit tests tend to run with every new code commit, while end-to-end test suites may run overnight due to the time it takes to complete them. In the morning, quality control engineers analyze test results and provide quick feedback to developers about any failures. This setup for continuous integration depends on a project’s size and how long test scripts and code take to complete.

Having robust unit tests and end-to-end test automation goes far in building confidence in a particular software release candidate. These tests form the foundation of system testing and move the development process toward a more test-driven development.

Now let’s move on to testing an actual release candidate software package.

Smoke testing

“Where there’s smoke, there’s fire,” as the saying goes. In software, smoke testing means making sure that the release candidate is stable and all major components are working. It’s kind of like the home inspection performed before buying a house. During smoke testing, the testing team ensures the user interface, service layer, database, and other major components start and function properly.

Smoke testing validates a release candidate to ensure that it’s ready to be tested.

Smoke testing in software is also referred to as pre-release testing or stability testing. By using proper automated testing and implementing CI/CD practices, we can virtually eliminate the need for smoke testing altogether.

Depending on each system’s complexity, a smoke test plan can include specific steps that will exercise the main parts of the software and ensure that this release candidate is valid.

Regression testing

Line of business units and marketing departments always get excited about new features in a new software release candidate. While this is indeed exciting, quality control engineers always have to make sure that existing functionality still works as expected. Development teams want to make sure that they don’t break what their users have already been using.

Regression testing always looks back at existing functionality (existing before this release candidate) and ensures that it still works properly as expected by users.

As the software product matures, the testing team creates and evolves a set of regression test cases and regression test suites that always run during any release test. This becomes the regression test plan that executes during each release testing phase.

So far, we have a stable and reasonably tested release candidate that doesn’t break existing functionality. Release testing is building our confidence in this release candidate.

Now we need to get even closer to running this release candidate in a production-like environment by simulating many concurrent users, just as in normal usage. This is where load testing comes in.

Load testing

Using various techniques and products, software developers and quality assurance (QA) engineers can simulate multiple concurrent users using the system. This way we’re adding load to the system to see if anything breaks under load testing.

During this testing phase, the development team or DevOps team monitors system logs and other signs for any errors and exceptions. Load testing often serves as performance testing as the development and testing team monitors system logs and other performance indicators.

You can formalize the performance criteria in a load test plan.

Acceptance testing

Acceptance testing is a more formal testing method. You need to do it when you deliver the new software release to a customer to install on their own systems. In a normal SaaS (software-as-a-service) model, the software development team performs acceptance testing as part of normal release testing procedure and doesn’t require anything formal like acceptance testing.

Consider other scenarios—for example, when a software engineering company creates and delivers software for an end-user to use on their own system. The users themselves usually perform more testing to ensure that the software release candidate delivered to them matches their requirements and business needs. The customer receives the software release candidate and tests it using their own acceptance test plan and decides to accept it as is or request changes.

By now, we have a reliable software release candidate that has been tested in various ways to ensure that the software is useful and works properly according to known requirements.

History of release testing

Before the implementation of continuous integration and continuous delivery (CI/CD) practices, release testing was slow, rigid, and error-prone. Only when the development process officially ended did the quality assurance teams take over the release candidate and start testing. Selecting a release candidate was also difficult as new features and fixes were seldom completed on time and a somewhat stable code baseline was hard to maintain.

Selecting and deploying a release candidate has long been difficult. This was especially true when the only interfaces available to testing environments were floppy disks and network cables.

In addition, most older release testing procedures comprised many pages filled with test cases and series of tests. Software quality engineers manually executed these, which was tedious work. In this environment, when bugs were found, fixing the bugs and integrating them into the release candidate had to be a very formal process, often inside a change management board that had to discuss and approve every bug fix before it was integrated into the release candidate. Only with this formality were engineers able to maintain a sense of code stability while performing release testing.

Modern release testing

The software development process, however, keeps evolving to solve inherent problems. Continuous integration and continuous delivery practices have removed a lot of the barriers to continual testing.

Nowadays, every new code commit triggers a new build and runs automated tests. This adds a lot of confidence in the code baseline stability. In addition, with new code changes being integrated quickly, quality engineers can quickly verify fixes and provide feedback to developers. These advances have moved the software development process toward test-driven development.

The quick feedback loop between testing and development represents the most significant advancement in software development.

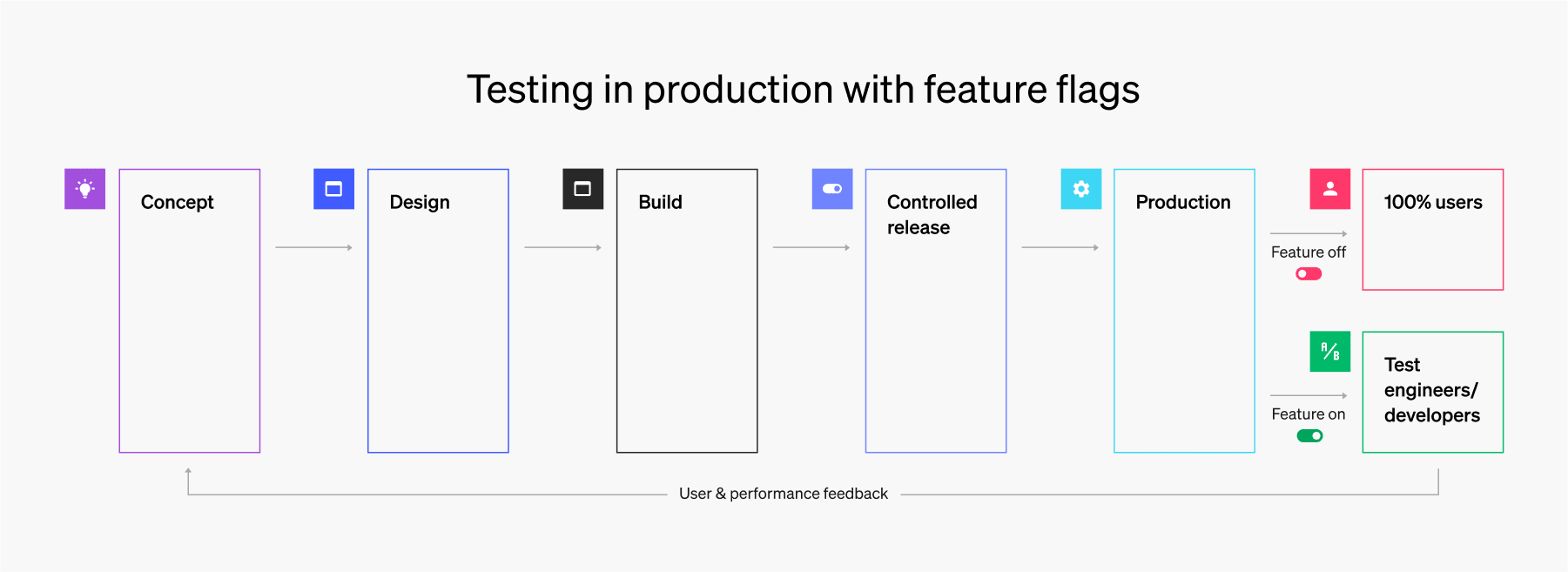

In addition, using feature flags makes selecting a release candidate a much easier task. With feature flags, you can easily turn new features on or off depending on their maturity. So, if a new feature doesn’t complete during a development sprint, you can turn it off until the next release. This way, you don’t have to delay releases until a feature is complete.

Thus, a modern testing process builds confidence into a release candidate much faster than the old testing approach.

Release testing in production with feature flags

Another modern approach to software release testing is testing in production. This means testing new functionality on a live production server. Such an approach gives you a much better idea of how a feature will perform in a real-world setting than with pre-production testing. But conventionally, developers have perceived testing in production as a risky practice. CI/CD principles and feature flags reduce such risk.

First, in keeping with CI/CD principles, if developers are going to test in production, they should only do so with small pieces of functionality. Second, developers should use feature flags to control which users can interact with the new functionality in production. For instance, they should use feature flags to perform canary launches or dark launches, wherein only a small subset of hand-selected users can access the new feature. When the feature passes all its performance tests, then developers can roll out the feature to a broader user audience via either a percentage rollout, a targeted ring deployment, or some other release approach. If, however, the feature hurts system performance, then a developer can disable the relevant feature flag and do so without having to restart the application.

In the latter scenario, feature flags reduce the impact of the defective feature significantly. That is, they allow developers to run tests in production with less risk. And testing in production gives developers much better system performance and user engagement data earlier in the release process than pre-production testing. What’s more, it allows developers to save time and energy on maintaining pre-production test and staging environments.

How are you release testing?

Release testing is a testing approach rather than a single testing method. It spans several common software tests like unit tests, smoke tests, regression tests, and so on. Modern testing practices have also emerged that align with CI/CD and DevOps. More and more teams, for example, have begun testing small batches of functionality in production. And they’re using LaunchDarkly feature flags to do this in a safe, controlled manner.

An ideal testing approach should optimize for speed, stability, and user experience. That is, you want to ship code as fast as possible while maintaining high application performance and delivering a topflight experience to end-users. Running tests in production with feature flags can help development teams strike that perfect balance.

.png)