If you are running an AI app in production, you want to give your users the latest and greatest features, while doing everything possible to ensure their experience is smooth and bug free. Decoupling deployments from AI configuration changes is one approach to reduce risk.

LaunchDarkly’s new AI configs (now in Early Access) can help! With AI configs, you can change your model or prompt at runtime, without needing to deploy any new code. Decoupling configuration changes from deployment grants developers a ton of flexibility to roll out changes quickly and smoothly.

In this tutorial, we’ll teach you to use AI configs to upgrade the OpenAI model version in an ExpressJS application.

Our example app generates letters of reference, using a person’s name as an input parameter. Feel free to substitute another use case if you’ve got one in mind.

Prerequisites

- An OpenAI API key. You can create one here.

- A free LaunchDarkly account with AI configs enabled. In order to get access to AI configs, click on ‘AI configs’ in the left-hand navigation and click ‘Join the EAP’ and our team will turn on access for you.

- A developer environment with Node.js (version 22.8.0 or above) and npm installed.

Setting up your developer environment

Set up your environment and install dependencies using these commands:

git clone https://github.com/annthurium/ai-config-expressjs

cd ai-config-expressjs

npm installRename your .env.example file to .env. Copy your OpenAI API key into the .env file. Save the file. We’ll be setting up our LaunchDarkly SDK key later.

Start the server.

npm startLoad http://localhost:8000/ in your browser. You should see a UI for the student reference letter generator. The “generate” button won’t work until we hook up LaunchDarkly, so we’ll tackle that shortly. But first, let’s take a quick tour through the project’s stack.

Application architecture overview

What are the major components of this project?

index.js holds our routes and basic server logic:

const express = require("express");

const path = require("path");

const fs = require("fs");

const { generate } = require("./openAIClient");

const bodyParser = require("body-parser");

const app = express();

app.use(express.json());

app.use(bodyParser.urlencoded({ extended: false }));

// Serve static files from 'static' directory

app.use("/static", express.static("static"));

app.post("/generate", async (req, res) => {

try {

const studentName = req.body.studentName;

const result = await generate({ NAME: studentName });

res.json({ success: true, result });

} catch (err) {

res.json({ success: false, error: err.message });

}

});

app.get("/", (req, res) => {

fs.readFile(

path.join(__dirname, "static/index.html"),

"utf8",

(err, data) => {

if (err) {

res.status(500).send("Error loading page");

return;

}

res.send(data);

}

);

});

const PORT = process.env.PORT || 8000;

app.listen(PORT, () => {

console.log(`Server running on port ${PORT}`);

});

openAIClient.js calls the LLM to generate a response. LaunchDarkly’s AI SDK fetches the prompt and model data at runtime, which is then passed to the model.

With a call to tracker.trackOpenAIMetrics, LaunchDarkly also records input tokens, output tokens, generation count, and optional user feedback about the response quality. The docs on tracking AI metrics go into more detail if you are curious.

require("dotenv").config();

const OpenAI = require("openai");

const launchDarkly = require("@launchdarkly/node-server-sdk");

const launchDarklyAI = require("@launchdarkly/server-sdk-ai");

const LD_CONFIG_KEY = "model-upgrade";

const DEFAULT_CONFIG = {

enabled: true,

model: { name: "gpt-4" },

messages: [],

};

// example data

const userContext = {

kind: "user",

name: "Mark",

email: "mark.s@lumonindustries.work",

key: "example-user-key",

};

const ldClient = launchDarkly.init(process.env.LAUNCHDARKLY_SDK_KEY);

const ldAiClient = launchDarklyAI.initAi(ldClient);

const openaiClient = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

async function generate(options = {}) {

/**

* Generates text using OpenAI's chat completion API.

* @param {Object} options - Configuration options for the generation

* @returns {Promise<string|null>} The generated text or null if an error occurs

*/

try {

const configValue = await ldAiClient.config(

LD_CONFIG_KEY,

userContext,

DEFAULT_CONFIG,

options

);

const {

model: { name: modelName },

tracker,

messages = [],

} = configValue;

console.log("model: ", configValue.model);

const completion = await tracker.trackOpenAIMetrics(async () =>

openaiClient.chat.completions.create({

model: modelName,

messages,

})

);

const response = completion.choices[0].message.content;

return response;

} catch (error) {

console.error("Error generating AI response:", error);

return null;

}

}

module.exports = { generate };Our front end lives in the static folder. We’re using vanilla HTML/CSS/JavaScript here since the front end state is fairly minimal.

Onwards to configure our first, well, config.

Creating an AI config

Go to the LaunchDarkly app.

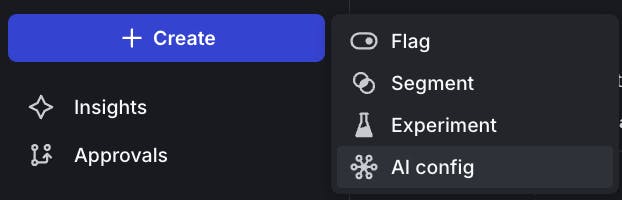

Click on the Create button. Select AI config from the menu.

Give your AI config a name, such as “model-upgrade.” Click Create.

Next, we’re going to add a variation to represent the older model. Variations are an abstraction that represents a specific combination of models and messages, plus other options such as temperature and tokens. Variations are editable, so it’s okay to make mistakes.

In your prompt messages, use double curly braces to surround variables. You can also use Markdown to format messages. You can see additional examples in the docs.

With the Variations tab selected, configure the first variation using the following options:

- Name: gpt-4o

- Model: gpt-4o

- Role: system. “You are a helpful research assistant.”

- Role: user. “Generate a reference letter for {{NAME}}, a 22 year old student at UCLA.”

Leave the max tokens and temperature as is.

When you’re done, save changes.

Next, we’ll create the variation for the newer model. Next, we’ll create the variation for the newer model. When rolling out any kind of upgrade, changing one thing at a time simplifies measuring success. Thus, the messages which constitute our prompt are identical to the first variant. Click the Add another variation button at the bottom, just above the Save changes button. Then input the following information into the variation section:

- Name: chatgpt-4o-latest

- Model: chatgpt-4o-latest

- Role: system. “You are a helpful research assistant.”

- Role: user. “Generate a reference letter for {{NAME}}, a 22 year old student at UCLA.”

Again, save changes when you are done.

Like feature flags, AI configs must be enabled in order to start serving data to your application. Click on the Targeting tab, and then click the Test button to select that environment. (Alternatively, you could use Production, or any other environment that exists in your LaunchDarkly project.)

Edit the Default rule dropdown to serve the gpt-4o variation. This older model is our baseline. We’ll create a progressive rollout to serve the newer model to more and more users if things look good. Click the toggle to turn the config on. When you’re done, click Review and save.

To authenticate with LaunchDarkly, we’ll need to copy the SDK key into the .env file in our JavaScript project.

Select the … dropdown next to the Test environment. Select SDK key from the dropdown menu to copy it to the clipboard.

Open the .env file in your editor. Paste in the SDK key (which should start with "sdk-" followed by a unique identifier). Save the .env file.

Reload http://localhost:8000/ and try putting your name into the reference letter generator. Enjoy the warm fuzzy feelings of your fake achievements.

Progressively upgrading to a newer model

Head back to the LaunchDarkly app. We’re going to use progressive rollouts to automatically release the new model to users, on a timeline we set up.

On the Targeting tab, click Add rollout. Select Progressive rollout from the dropdown.

The progressive rollout dialog has some sensible defaults. If I was upgrading a production API, I’d keep these as is and watch my dashboards carefully for regressions.

If you’re testing or prototyping, you can remove some steps to speedrun through a rollout in 2 minutes.

Delete unwanted rollout stages by clicking on the ... menu, and selecting Delete.

Your finished rollout should have these attributes:

- Turn AI config on: On

- Variation to roll out: chatgpt-4o-latest

- Context kind to roll out by: user

- Roll out to 25% for 1 minute

- Roll out to 50% for 1 minute

Click Confirm then Review and Save on the targeting page. Wait 2 minutes. Generate a new letter. Check your server logs to confirm the newer model is being used.

model: {

name: 'chatgpt-4o-latest',

parameters: { maxTokens: 16384, temperature: 0.7 }

}On the Monitoring tab of the AI configs panel, you can see the generation counts for each variation, along with input tokens, output tokens, and satisfaction rate.

The Progressive Rollout UI will also update the Targeting tab to indicate that chatgpt-4o-latest is being served to 100% of users. 🎉

Wrapping it up

If you’ve been following along, you have learned to use LaunchDarkly AI configs to manage runtime configuration for your ExpressJS app. You’ve also learned how to perform a progressive rollout to upgrade to a newer model, while minimizing risks to your users. Well done!

This app is pretty basic. Some future upgrades to consider:

- Tracking output satisfaction rate. Implementing a 👍/ 👎button your users can press to send answer quality metrics to LaunchDarkly.

- Prompt improvements. The prompt used here was cribbed from a research paper about gender bias and LLMs. Few-shot prompting, or providing examples of good reference letters to the model, could improve response quality. You could also request additional info from the end user (such as the student’s major or GPA) and pass those variables along to the LLM. It might also be worth testing different model/prompt combinations to see how they stack up on cost, latency, and accuracy.

- Advanced targeting. AI configs give you the flexibility to specify what variant a user should see, based on what you know about them. For example, you could serve the latest model to any users who have @yourcompany.com email addresses, for dogfooding purposes.

If you want to learn more about feature management for generative AI applications, here’s some further reading:

- Managing AI model configuration outside of code with the Node.js AI SDK

- Using targeting to manage AI model usage by tier with the Python AI SDK

- Upgrade OpenAI models in Python FastAPI applications—using LaunchDarkly AI configs

Thanks so much for reading. Hit me up on Bluesky if you found this tutorial useful. You can also reach me via email (tthurium@launchdarkly.com) or LinkedIn.