When we decide to roll out a new feature, we can observe what happens to key metrics. But we can’t observe what those metrics would be had we not rolled out that feature (or if we had rolled out a different feature, or the same feature but with some tweaked characteristics).

Wouldn’t it be nice if we could just split the world into a bunch of parallel universes, try out a ton of different options, see which produces the best results, then travel back to the universe where we deployed the best-performing one?

The real question: when should you be experimenting?

Of course, splitting the world like this would require quantum mechanics and fiddling with the space-time continuum — which definitely isn’t practical. But experimentation gets us reasonably close to our desired outcome by splitting our universe (aka our users) into two or more randomly selected groups, delivering different experiences to them, and then observing the results.

Some light statistics—combined with our intuition—makes this approach a pretty good approximation for our theoretical (and not practical) parallel universes approach.

So the big question, then, is: when does it make the most sense to experiment?

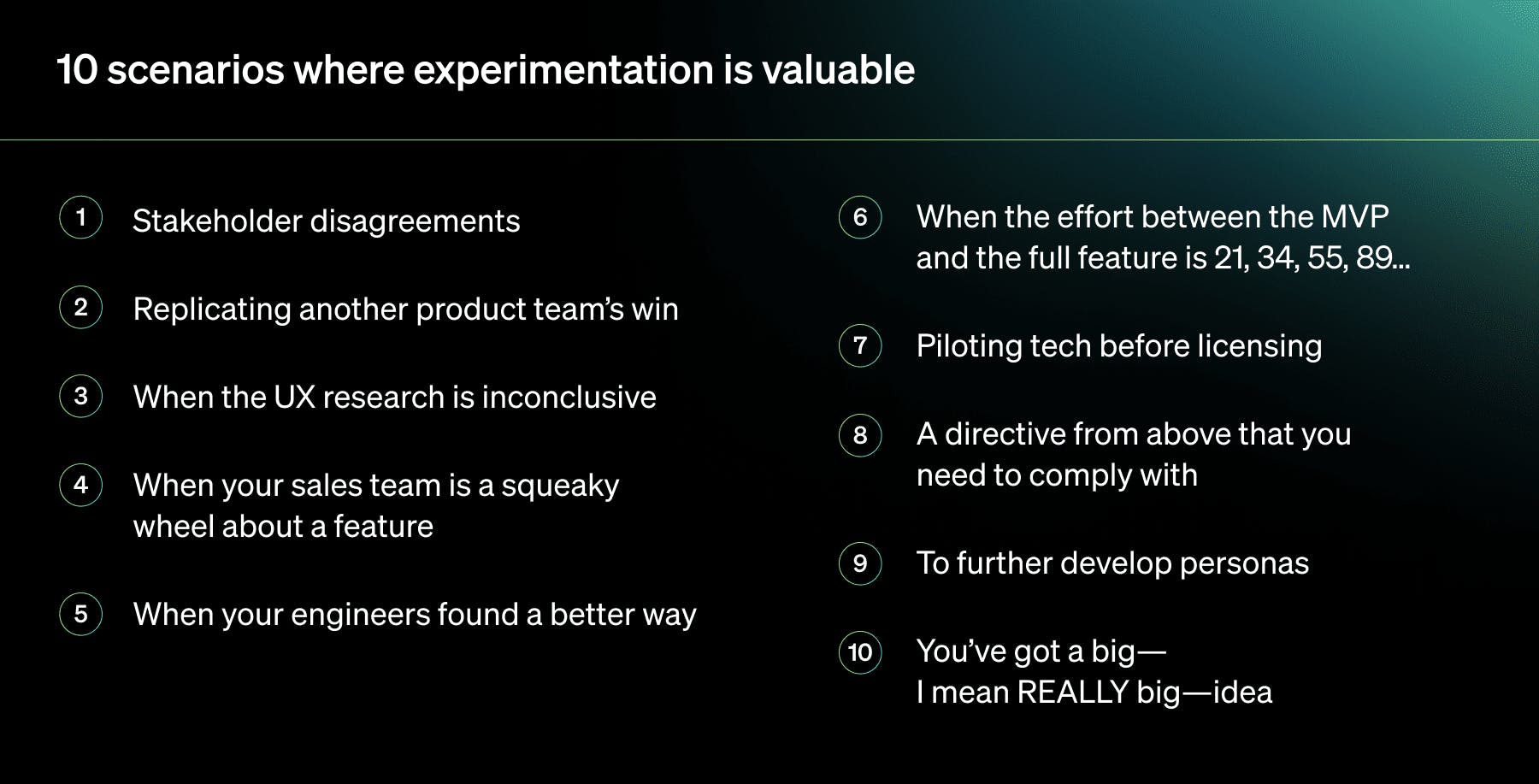

We’ve compiled a list of scenarios that represent some great opportunities for product managers to consider experimenting. This isn’t meant to be exhaustive of all situations, but hopefully it’s some good food for thought.

10 scenarios where experimentation is valuable

1. Stakeholder disagreements

“Let’s just settle this with a test!”

Consensus building is a huge part of the product manager’s role. You’re constantly taking input from engineers, designers, marketers, and executives and trying to strike the right balance between goals, risks, effort, and capacity to build out the roadmap.

In some cases, it can be the right move to let your users decide! If you have key stakeholders with differing opinions on what direction to take, you can test both options.

Use this one sparingly, though, especially if the build time is significant for one or both of the options.

2. Replicating another product team’s win

“If it worked over there, will it work over here?”

Maybe another product team found success with something, and you want to try it. Or maybe someone is recommending you just “go do it” on your team because it worked elsewhere. Regardless, it’s a good idea to validate it before turning it on for all users in your product.

Imagine a financial services company that has several lines of business for each of its lending and credit products. Perhaps one of the credit card teams significantly increased applications by building a flow to intercept users with a pre-qualification invitation code who didn’t apply on the campaign landing page. Before your team adds more weight and complexity to your high-value application flow, experiment to ensure this has the intended impact and doesn’t negatively impact important metrics.

3. When the UX research is inconclusive

“The usability testing says it definitely maybe might work.”

If the user testing or usability research doesn’t give a definitive direction on which concepts work best, try running those variations as a test!

Just because the initial testing didn’t definitively point to one version being worse doesn’t mean both versions are good. The type of evidence you gather from user research is anecdotal or directional (depending on the methodology). It doesn’t conclusively tell us the impact on business metrics.

Deploying one—or both—of the new variations as an experiment enables you to understand the relationship between those different experiences and see the business outcomes.

4. When your sales team is a squeaky wheel

“I HAVE to have this feature to hit my quota!”

If you’re at a SaaS company, this one might be triggering. Sales teams are conditioned to listen for barriers to closing, which are helpful to document but not always in line with the current priorities. Before disrupting your roadmap, try out a painted door test instead!

For example: make a fake button for the suggested feature, but when a user clicks just have a pop-up that says, “Thanks for your interest in this feature. It’s still in the works!” Tracking how many people click the button will help determine if this is a must-have or simply a nice-to-have, and it can get prioritized accordingly.

5. When your engineers find a better way

“I’m telling you, faster and more stable is better!”

Just like the point about sales-driven product ideas, this shows that good ideas can come from anywhere. Ideas from engineers, however, are usually more concerned with platform stability and performance than user-facing features.

With back-end changes, it can often be difficult to detect the impact on short-term business outcomes. Once again, this can be a great thing to test within your beta groups and, hopefully, have a chance to ask some questions about their streamlined and speedier experiences too!

Let’s say you work for a parking spot reservation app and someone on your engineering team was inspired by some of the ways giants like Instagram prioritize speed to increase stickiness within the app. The idea is that, for users that have turned on sharing location data when using the app, begin to pull the open spots within .5 miles of the current device location on app open.

It’s possible that the user is going to search for another location, or even make a reservation for another day, but since over half of your users attempt to book in the same location, let’s experiment to see if this causes an increase in bookings. The longer it takes for someone to find a parking spot when they need it, the more likely they are to stumble across a garage they can park in or find a spot on the 5th loop around the block.

If this proves effective, it will be easier to make the case to dedicate resources to these optimizations over other requested features. And if not, you’ll be able to confidently roll back that more resource-heavy way of initially loading your app.

6. When the effort between the MVP and the full feature is 21, 34, 55, 89 on the Fibonacci scale...

“Before we bet the farm…”

If there’s a big bet being made on a time-consuming feature, you want to be extra, extra sure it’s going to deliver the value you and your team are expecting. Before the full commitment, test everything you can within that MVP to demonstrate the commitment of resources is justified!

An example might be with a large hotel company with several brands at different levels of luxury, each with hundreds of properties. Offering digital keys within the app is complex because not only is the technology that manages keys different at each brand, but it can also vary by property.

Before starting at the brand level and building out all the variations of how this feature works to communicate with the various back-end systems, consider building it out for all of the properties on one of the easy-to-work-with card key systems, regardless of brand. After your team is able to prove that this makes a material impact on the guest satisfaction and loyalty, then determine what the broader roll-out looks like.

This targeted approach will also allow the UX team to nail down how to prompt for enabling the digital key and setting the right permissions to decrease frustration and abandonment of the feature so, as this rolls out to other properties, you’re putting your best foot forward. And, conversely, if this feature isn’t leveraged or it doesn’t make a business impact, you’ll have the data in hand to make the case to prioritize other work!

7. Piloting tech before licensing

“That’s a nice feature, but it ain’t free!”

This scenario is related to the one above, but it involves hard costs and external tech. Before buying and integrating new software, seek to understand exactly how it impacts user flows as well as downstream or outcome metrics.

For example, if you’re an ecommerce company, you’d want to know that addingKlarna orHappy Returns is going to significantly increase average order value or revenue retention before committing to an annual contract and integrating them into your front and back and systems.

8. A directive from above that you need to comply with

“We’ve got no choice, but we still need to quantify the impact.”

A great example of this scenario is when there’s a brand refresh. If the new CMO says you’re switching from product photos to doodles to be on trend, that’s more than a small UI update. That’s going to cause a shift in the whole design language. You’ll want to experiment into this before universally rolling it out.

Even if the direction from above won’t change, you will at least be armed with the data that explains why user behavior and conversion rates have changed, and perhaps have some evidence-driven hypotheses for how to improve.

9. To further develop personas

“No cap. This UX update is bussin’.”

To continue to learn about and understand your key audiences, consider exploring micro copy and positioning. For example, what type of slang would resonate most with your audience? If you’re a GenZ* focused brand, you can talk to your customers differently than if you’re targeting GenX**.

- *Blip (“die another way”) and Lemonade (“The (Almost) 5 Star Insurance Company”)

- ** Nicorette (“Nicorette allows you to fight cravings head on when the urge to smoke hits hard.”) and State Farm (“Like a good neighbor, State Farm is there.”)

10. You’ve got a big—I mean REALLY big—idea

“You want the moon, Mary?”***

Confidently take big swings on disruptive ideas with the safety net of knowing the risk is managed and the impact will be measured. Feature flags help relieve some of this pressure, but the conclusive results from a test is often the justification that will push leadership over the edge of buying into your big idea! What better way to get your idea prioritized and resourced than a demonstration of the impact on business outcomes?

Beyond Guarded Releases to business impact

Continuous deployment and Guarded Releases have helped us ship more confidently, but there is definitely a time and place where you need quantifiable evidence about which variation is truly the winner.

Turn to experimentation when you want to control for the noise and natural seasonality of your product to clearly see which experiences your users prefer and deliver on your business outcomes.

Want to learn more? Get a demo of LaunchDarkly Experimentation.