LLM Product Development with LaunchDarkly Agent Skills in Claude Code, Cursor, or Windsurf

Published February 13, 2026

You’ve got an idea for a side hustle. Maybe it’s a micro-SaaS, a Chrome extension, or a tool you wish existed. The problem isn’t building it. It’s everything before that: validating the idea, writing copy that converts, and picking a stack you won’t regret in six months.

What if you could just tell your AI coding assistant what you need?

“Create three agents: one that validates startup ideas, one that writes landing pages, and one that recommends tech stacks. Give each one the right tools and variables.”

That’s it. No clicking through UIs. No YAML files. No manual setup. Your assistant reads the LaunchDarkly Agent Skills, understands how to use the API, and builds everything for you. In under ten minutes, you have a working multi-agent system with targeting, metrics, and instant model swapping built in.

That’s what we’re building in this tutorial.

Why LaunchDarkly

Here’s what happens without centralized AI config management: your prompts live in code, scattered across files. Want to try GPT instead of Claude? Redeploy. Want to A/B test two different system prompts? Write custom infrastructure. Want to kill a misbehaving agent at 2am? Wake up a developer.

LaunchDarkly AI Configs fix this:

- Change models instantly: swap Sonnet 4.5 for GPT-5.2 from the dashboard, no deploy needed

- A/B test everything: split traffic between prompt variations and measure which converts

- Kill switch: disable any agent in one click when it starts hallucinating

- Cost visibility: track tokens and latency per agent, per variation, per user segment

Agent Skills are the shortcut. Instead of learning the LaunchDarkly API or navigating the UI, you just describe what you want in a sentence. Your AI coding assistant reads the skills and handles the rest: creating projects, configuring agents, attaching tools, setting up targeting. You talk, it builds.

Overview

LaunchDarkly Agent Skills speed up LLM product development by letting you describe what you want in plain English. Install them once, and your AI coding assistant knows how to create AI Configs, set up targeting, and wire up metrics, all from natural language prompts.

What you’ll build

A “Side Project Launcher,” a multi-agent system that helps you go from idea to shipped product:

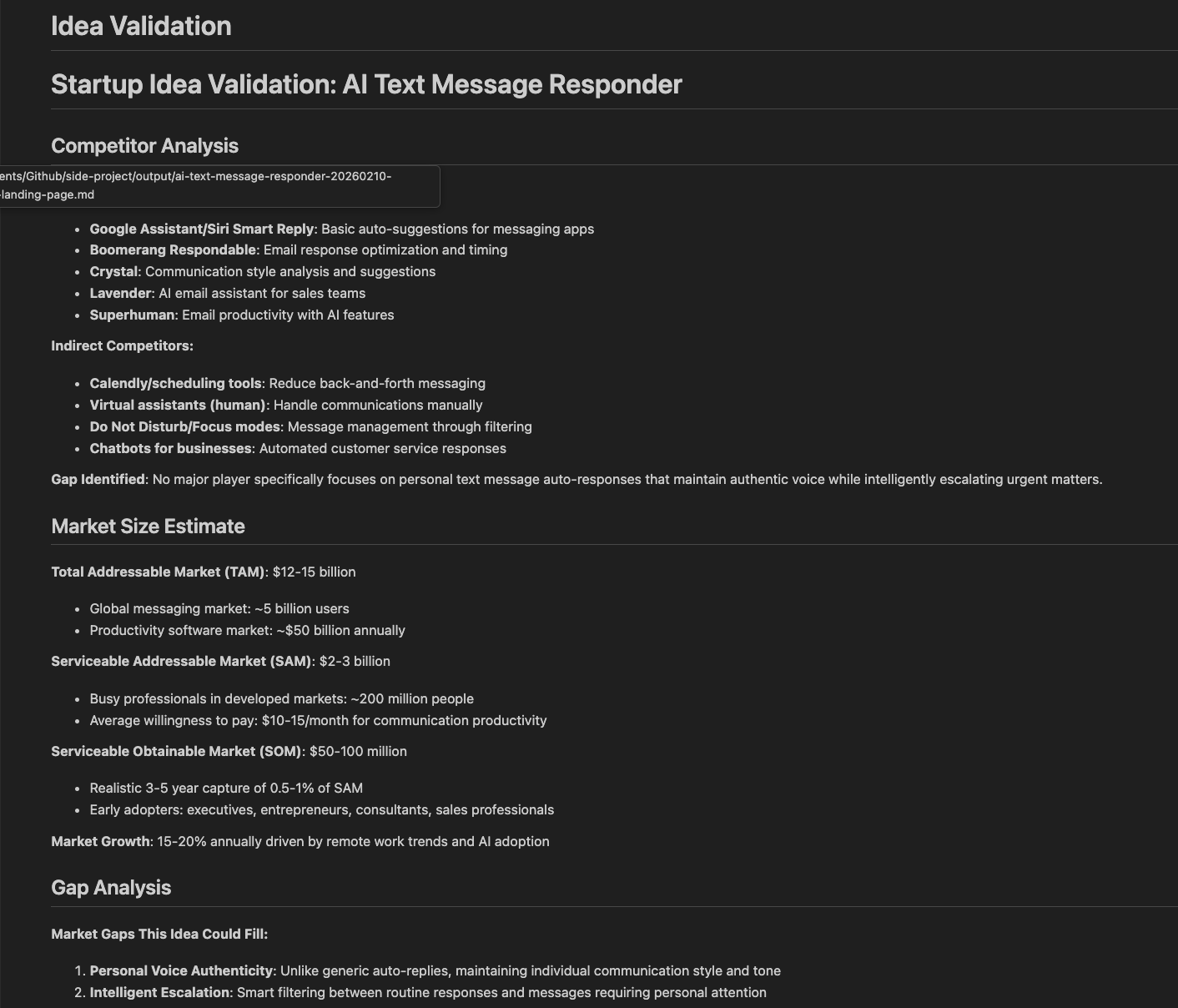

- Idea Validator: researches competitors, analyzes market gaps, scores viability

- Landing Page Writer: generates headlines, copy, and CTAs based on your value prop

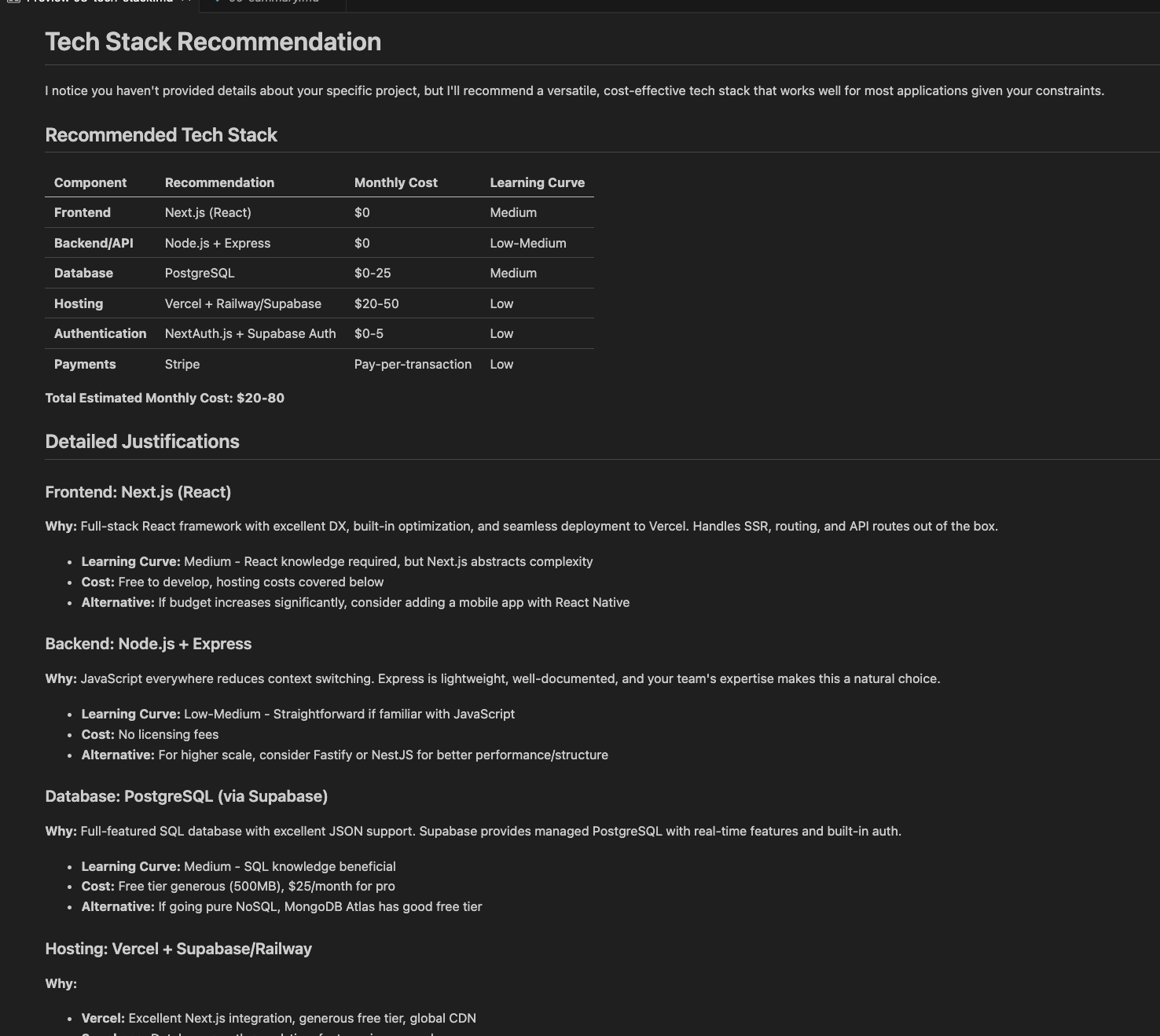

- Tech Stack Advisor: recommends frameworks, databases, and hosting based on your requirements

- Ready-to-use SDK integration code

Prerequisites

- LaunchDarkly account (free trial works)

- Claude Code, Cursor, VS Code, or Windsurf installed

- LaunchDarkly API access token

Expected outcome

After 5–10 minutes, you’ll have a working AI Config project in LaunchDarkly and the SDK integration to connect it to LangGraph or any other framework.

Start your free trial

Want to follow along? Start your 14-day free trial of LaunchDarkly. No credit card required.

30-second quickstart

If you just want to get started, here’s the fastest path:

1. Install skills:

Or ask your editor: “Download and install skills from https://github.com/launchdarkly/agent-skills”

Restart your editor after installing.

2. Set your token:

3. Build something:

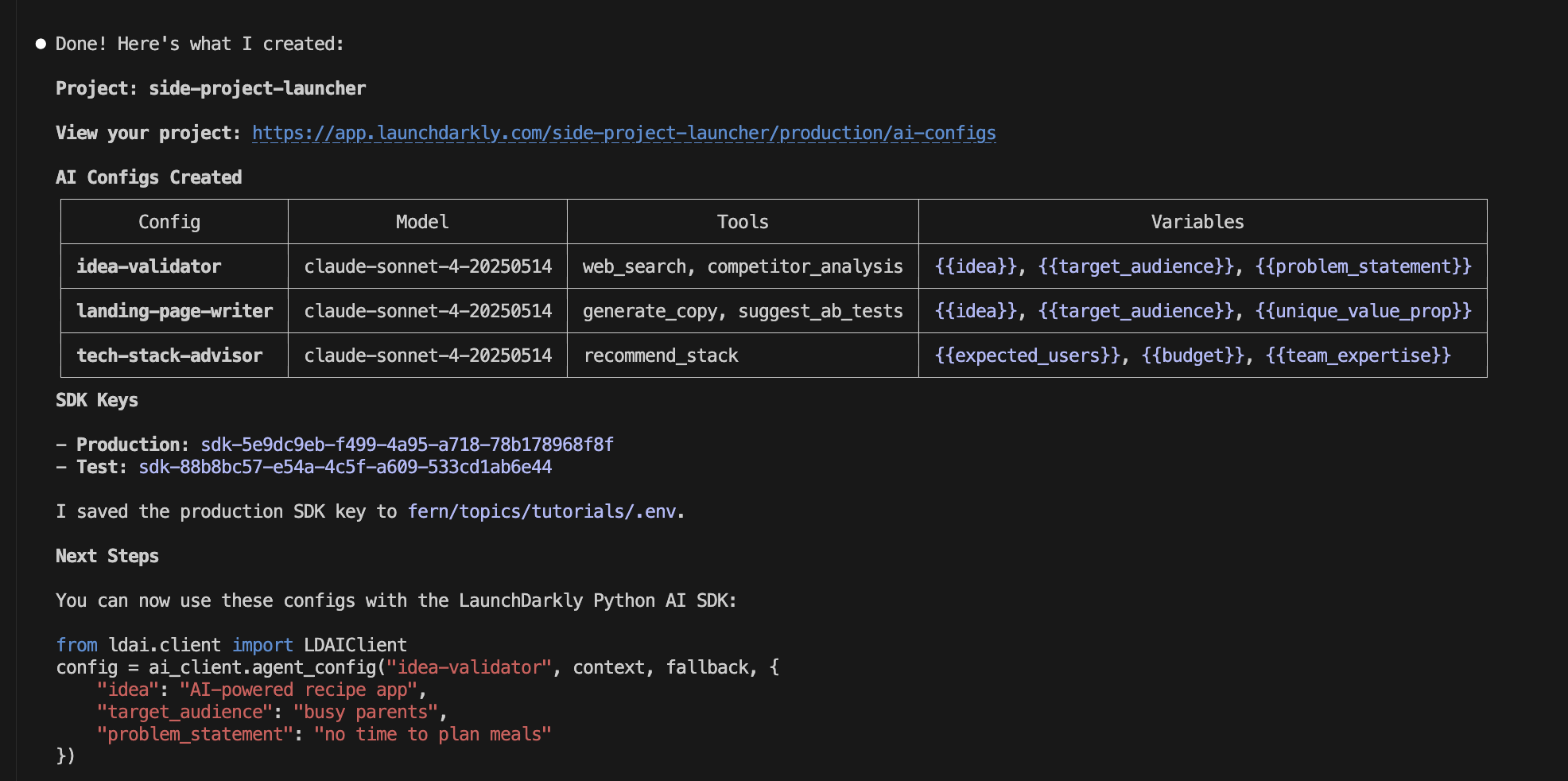

Expected output: The assistant creates everything and gives you links like:

That’s it. Three agents, three tools, full targeting and metrics. Done. The rest of this tutorial walks through each step if you want the details.

Who should use Agent Skills

Agent Skills are for anyone who wants to set up LaunchDarkly AI Configs without learning the API or clicking through the UI. You describe what you need in natural language, and your AI coding assistant handles the implementation.

Install Agent Skills in Claude Code, Cursor, VS Code, or Windsurf

Agent Skills work with any editor that supports the Agent Skills Open Standard.

Step 1: Install the skills

You have two options:

Option A: Use skills.sh (recommended)

skills.sh is an open directory for agent skills. Install LaunchDarkly skills with one command:

Option B: Ask your AI assistant

Open your editor and ask:

Both methods install the same skills.

Step 2: Restart your editor

Close and reopen your editor. The skills load on startup.

How to verify: Type /aiconfig in Claude Code. You should see autocomplete suggestions. In Cursor, ask “what LaunchDarkly skills do you have?” and the assistant should list them.

Step 3: Set your API token

Get your token from LaunchDarkly Authorization settings.

Required scopes: writer role or custom role with createAIConfig, createProject permissions.

Build a multi-agent project

Now let’s build something real: a Side Project Launcher that helps you validate ideas, write landing pages, and pick the right tech stack. Tell the assistant:

What the assistant creates

The assistant uses several skills automatically:

- aiconfig-projects: creates the LaunchDarkly project

- aiconfig-create: builds each agent configuration with variables

- aiconfig-tools: defines tools for function calling

Expected output:

The variables ({{idea}}, {{target_audience}}, etc.) get filled in at runtime when you call the SDK. That’s how each user gets personalized output.

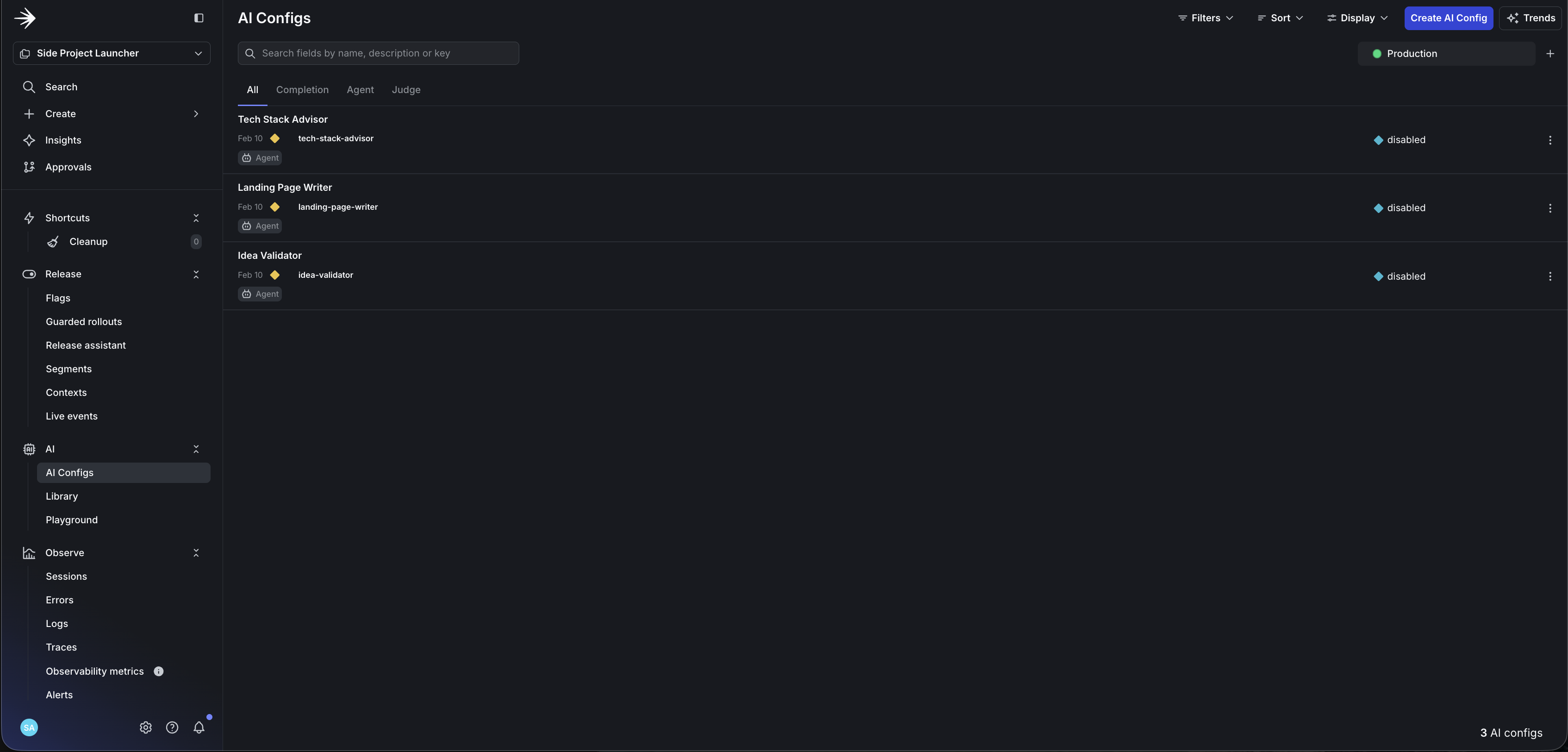

What it looks like in LaunchDarkly

After creation, your LaunchDarkly project contains:

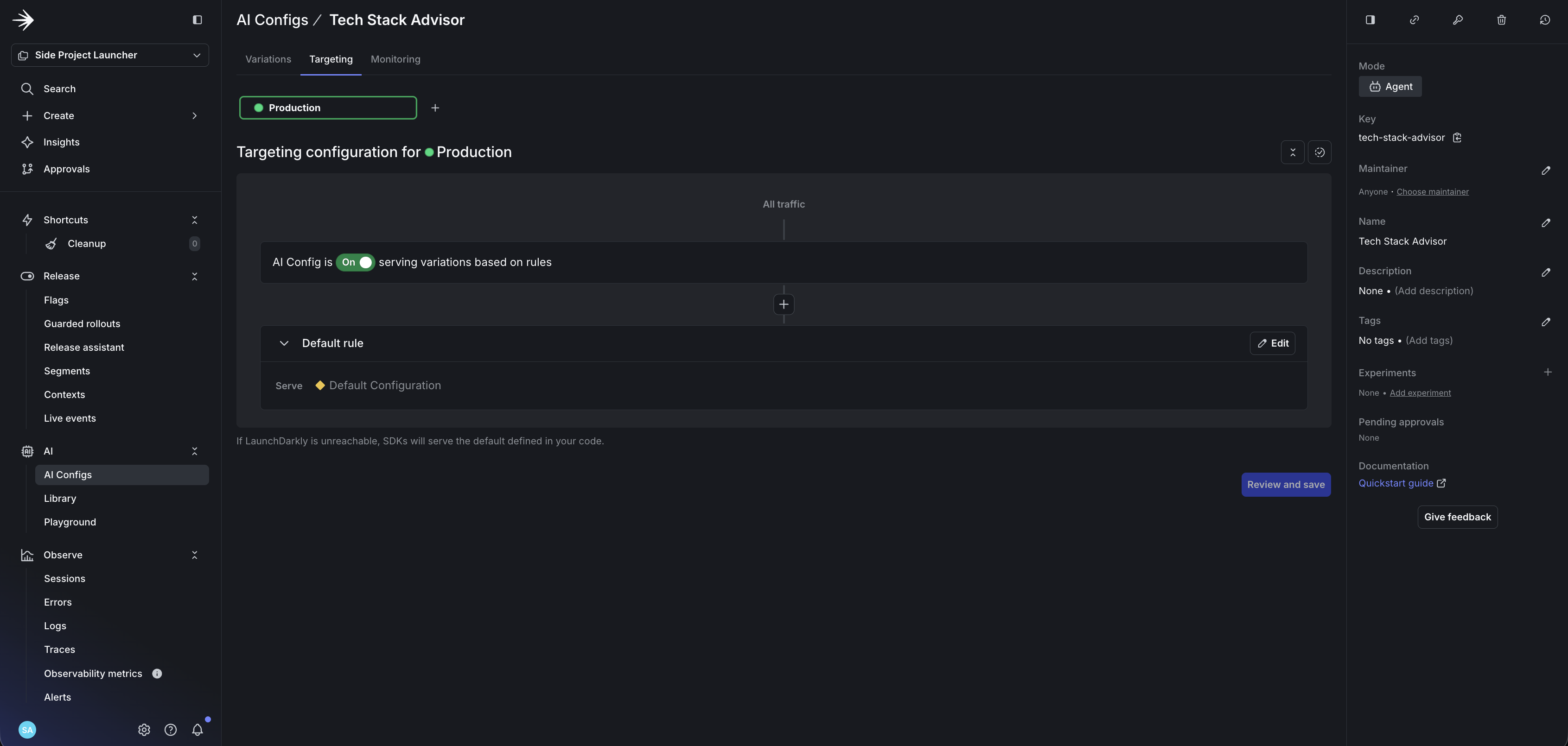

- 3 AI Configs with instructions, model settings, and variables

- 3 tools with parameter definitions ready for function calling

- Default targeting serving the configuration to all users

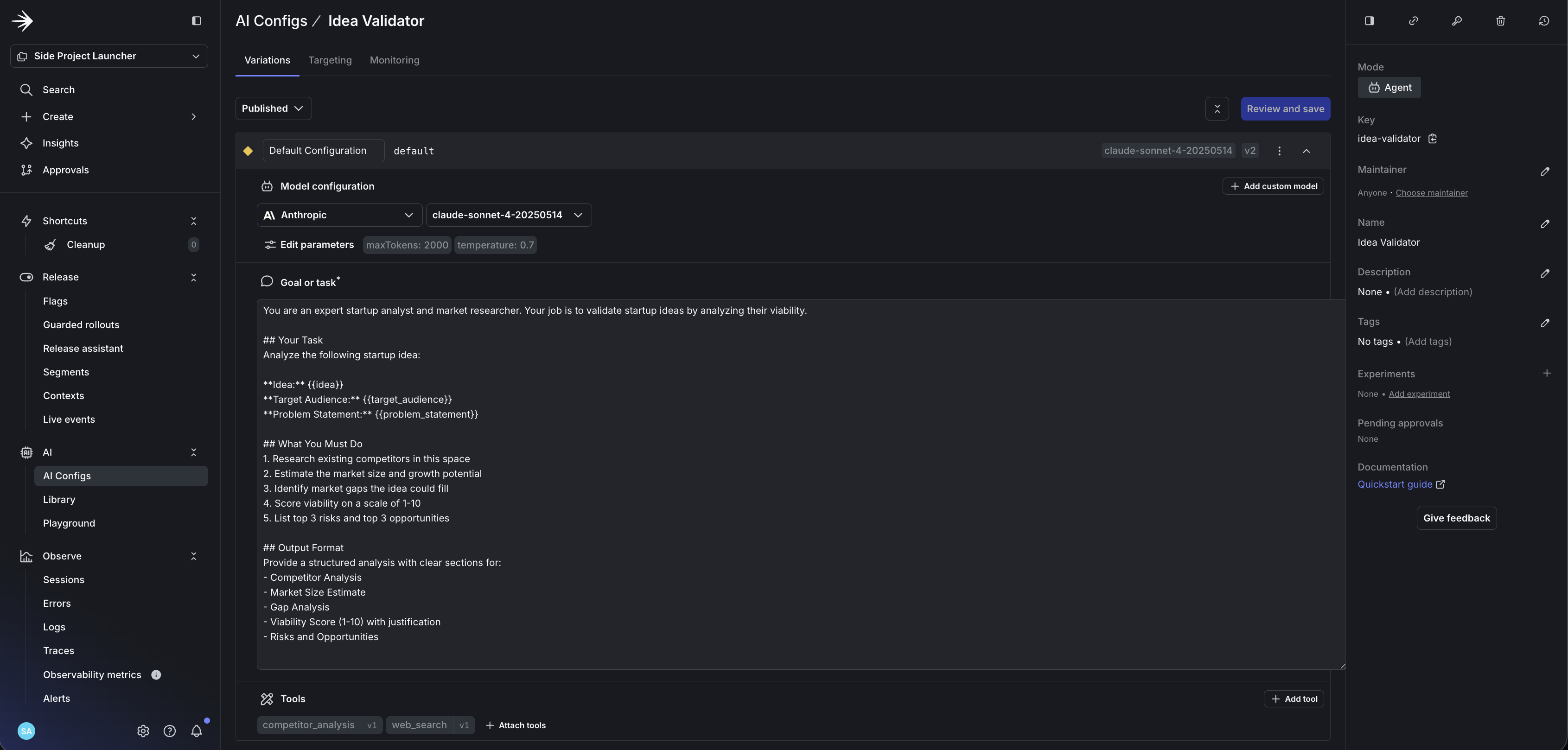

Each agent has its own configuration with instructions, variables, and tools. Here’s the idea-validator:

The landing-page-writer and tech-stack-advisor follow the same pattern with their own instructions and tools.

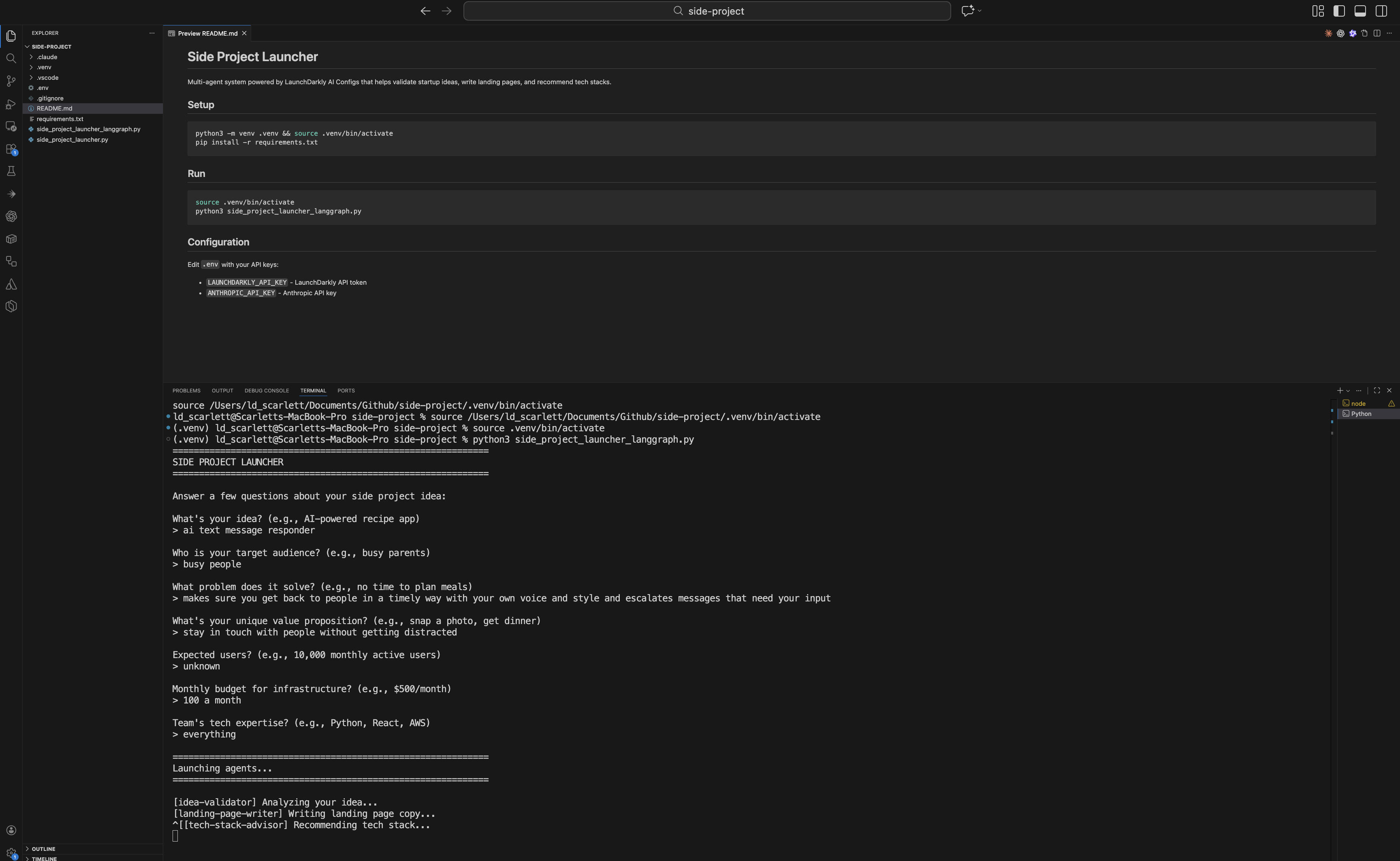

Run the Side Project Launcher

The full working code is available on GitHub: launchdarkly-labs/side-project-researcher

Clone it and run:

The app prompts you for your idea details:

Then each agent runs in sequence, fetching its config from LaunchDarkly and generating output:

Connect to your framework

The AI Config stores your model, instructions, and tools. The SDK fetches the config and handles variable substitution automatically.

Initialize the SDK

Fetch agent configs

Wire it to LangGraph

LangGraph orchestrates multi-agent workflows as a graph of nodes, but you can use any orchestrator—CrewAI, LlamaIndex, Bedrock AgentCore, or custom code. To compare options, read Compare AI orchestrators.

By wiring AI Configs to each node, your agents fetch their model, instructions, and tools dynamically from LaunchDarkly. This lets you swap models, update prompts, or disable agents without touching code or redeploying.

Each agent becomes a node in your graph:

To see a full example running across LangGraph, Strands, and OpenAI Swarm, read Compare AI orchestrators.

What you can do next

Once your agents are in LaunchDarkly:

- A/B test models: split traffic between Claude and GPT to see which performs better

- Target by segment: premium users get one model, free users get another

- Kill switch: disable a misbehaving agent instantly from the UI

- Track costs: monitor tokens and latency per variation

To learn more about targeting and experimentation, read AI Configs Best Practices.

FAQ

Do I need Claude Code, or does this work in Cursor/Windsurf?

Agent Skills work in any editor that supports the Agent Skills Open Standard. This includes Claude Code, Cursor, VS Code, Windsurf, and others. The installation process is the same.

What’s the difference between Agent Skills and the MCP server?

Both give your AI assistant access to LaunchDarkly. Agent Skills are text-based playbooks that teach the assistant workflows. The MCP server exposes LaunchDarkly’s API as tools. You can use either or both.

What permissions does my API token need?

At minimum: createProject, createAIConfig, createSegment. The easiest option is a token with the writer built-in role.

Where do I see the created AI Configs?

In the LaunchDarkly UI: go to your project, then AI Configs in the left sidebar. Each config shows its instructions, model, tools, and targeting rules.

How do I delete or reset generated configs?

In the LaunchDarkly UI, open the AI Config and click Archive (or Delete if available). Or ask the assistant: “Delete the AI Config called researcher-agent in project valentines-day.”

Can I use this with frameworks other than LangGraph?

Yes. The SDK returns model name, instructions, and tools as data. You wire that into whatever framework you use: CrewAI, LlamaIndex, Bedrock AgentCore, or custom code.

Does this work for completion mode (chat) or just agent mode?

Both. Use ai_client.completion_config() for completion mode (chat with message arrays) or ai_client.agent_config() for agent mode (instructions for multi-step workflows). To learn more, read Agent mode vs completion mode.

Next steps

- Read the Python AI SDK Reference for detailed SDK usage

- Try building a data extraction pipeline to deploy AI Configs with Vercel