AI Configs Best Practices

Published January 15th, 2026

Introduction

This tutorial will guide you through building a simple AI-powered chatbot using LaunchDarkly AI Configs with multiple AI providers (Anthropic, OpenAI, and Google). You’ll learn how to:

- Create a basic chatbot application

- Configure AI models dynamically without code changes

- Create and manage multiple AI Config variations

- Apply user contexts for personalizing AI behavior

- Switch between different AI providers seamlessly

- Monitor and track AI performance metrics

By the end of this tutorial, you’ll have a working chatbot that demonstrates LaunchDarkly’s AI Config capabilities across multiple providers.

The complete code for this tutorial is available in the simple-chatbot repository. For additional code examples and implementations, check out the LaunchDarkly Python AI Examples repository, which includes practical examples of AI Configs with various providers and use cases.

Prerequisites

Before starting, ensure you have:

Required accounts

- LaunchDarkly Account: Sign up at app.launchdarkly.com

- At least one AI provider account:

- Anthropic: console.anthropic.com

- OpenAI: platform.openai.com

- Google AI: ai.google.dev

Development environment

- Python 3.8+ installed

- pip package manager

- Basic Python knowledge

- A code editor such as VS Code or PyCharm

API keys

You’ll need:

- LaunchDarkly SDK Server Key

- API key from at least one AI provider

Before You Start

This tutorial builds a chatbot you can actually ship. It uses completion-based AI Configs (messages array format). If you’re using LangGraph or CrewAI, you probably want agent mode instead.

A few things that’ll save you debugging time:

Don’t cache configs across users

Reusing configs across users breaks targeting. Fetch a fresh config for each request:

Always provide a fallback config

LaunchDarkly might be down. Your API keys might be wrong. Have a fallback so your app doesn’t crash:

Check if config is enabled

Always check if the config is enabled before using it:

No PII in contexts

Never send personally identifiable information to LaunchDarkly:

Limit conversation history

Your chat history grows every turn. After 50 exchanges you’re burning thousands of tokens per request:

Track token usage

Without tracking, you won’t know why your bill is $10k this month:

Your provider methods should return the full response object (not just text) so you can access usage metadata. The code examples here return full responses where tracking is needed.

That’s it. The rest you’ll figure out as you go.

Part 1: Your First Chatbot - A Simple Example

Let’s start by building a minimal chatbot application using LaunchDarkly AI Config with Anthropic’s Claude.

Step 1.1: Project setup

Create a new directory for your project:

Create a virtual environment and activate it:

Step 1.2: Install dependencies

Install the required packages:

Create a requirements.txt file:

Step 1.3: Environment configuration

Important: First, add .env to your .gitignore file to keep credentials secure:

Now create a .env file in your project root:

Step 1.4: Create the basic chatbot

Create a file called simple_chatbot.py.

Click to expand the complete simple_chatbot.py code

Step 1.5: Run your basic chatbot

Run your basic chatbot that works with multiple AI providers:

You should see output like:

Try asking questions and chatting with the AI. The chatbot will automatically use whichever AI provider you’ve configured.

Congratulations! You’ve built your first AI chatbot with multi-provider support.

Part 2: Creating Your First AI Config with 2 Variations

Now let’s create an AI Config in LaunchDarkly with two variations to demonstrate how to dynamically control AI behavior.

For detailed guidance on creating AI Configs, read the AI Configs Quickstart.

Step 2.1: Create an AI config in LaunchDarkly

- Log in to LaunchDarkly

- Navigate to app.launchdarkly.com

- Click

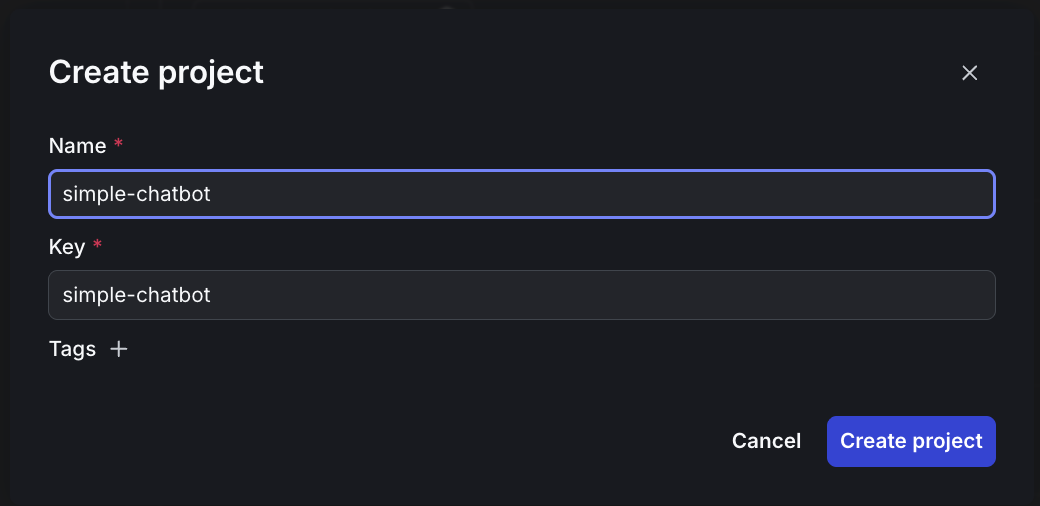

Create project - Name it

simple-chatbot

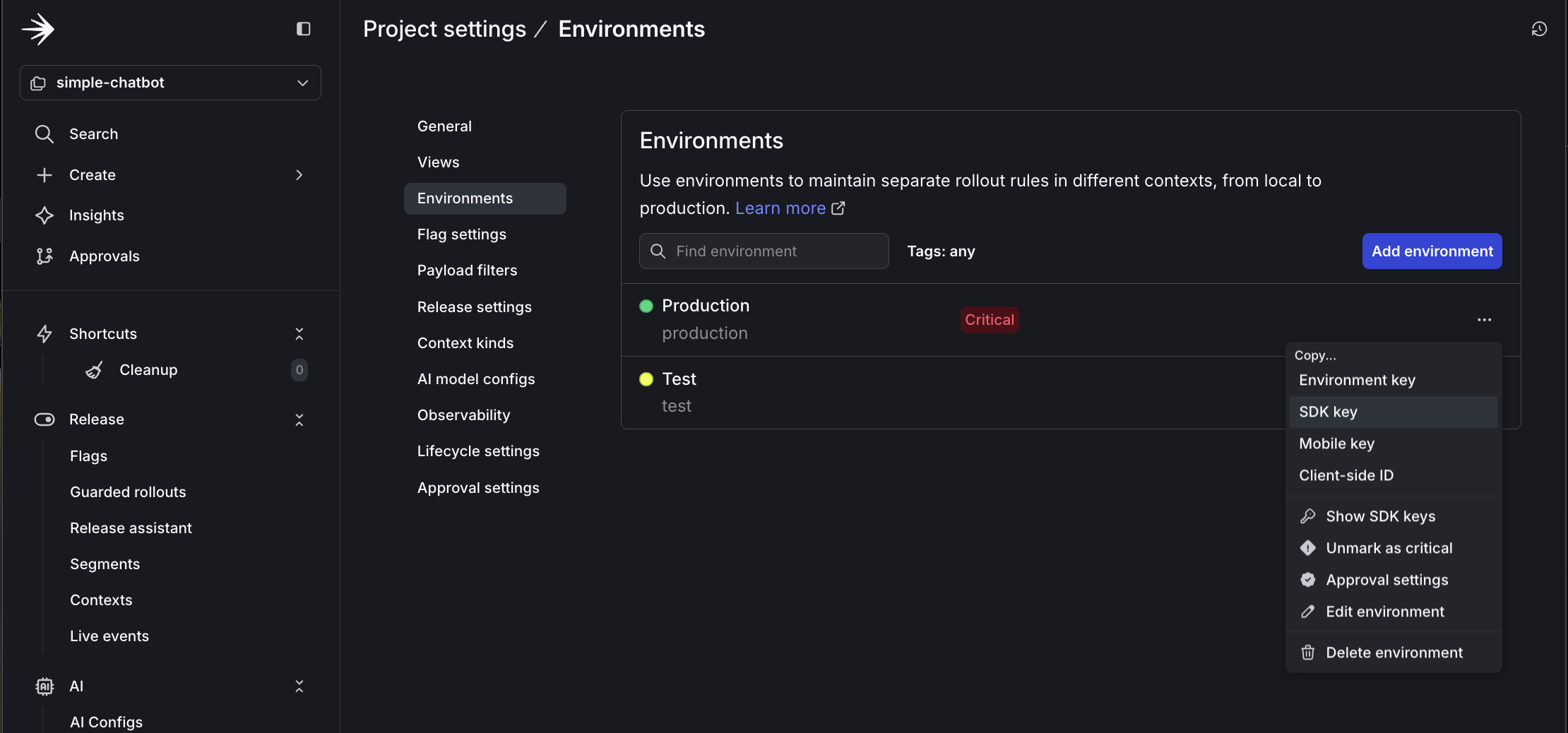

- Click Project settings then Environments

- Click the three dots by Production

- Copy the SDK key

- Update your

.envfile with this key:

-

Create a New AI Config

- In the left sidebar, click AI Configs

- Click Create AI Config

- Name it:

simple-config

-

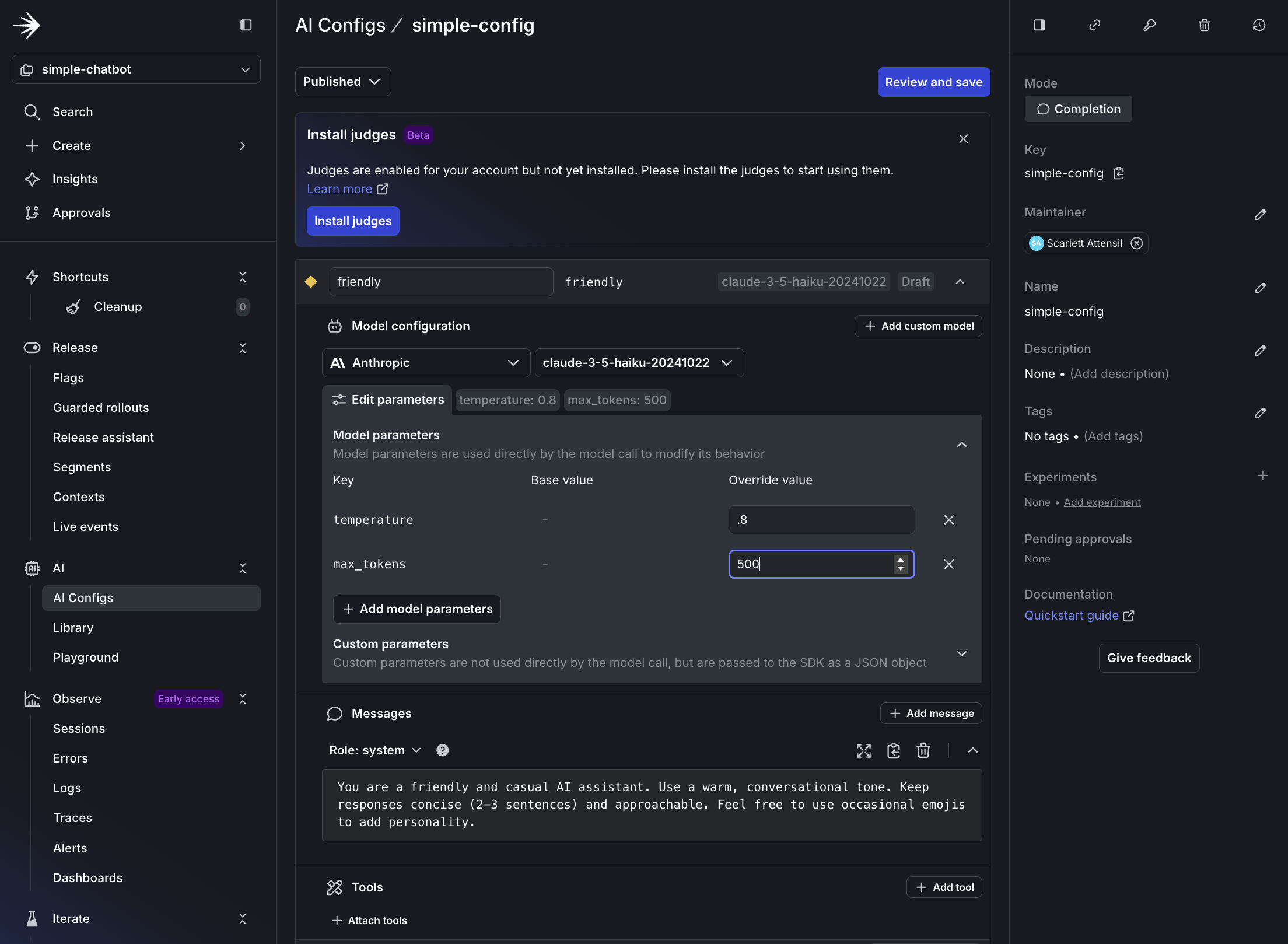

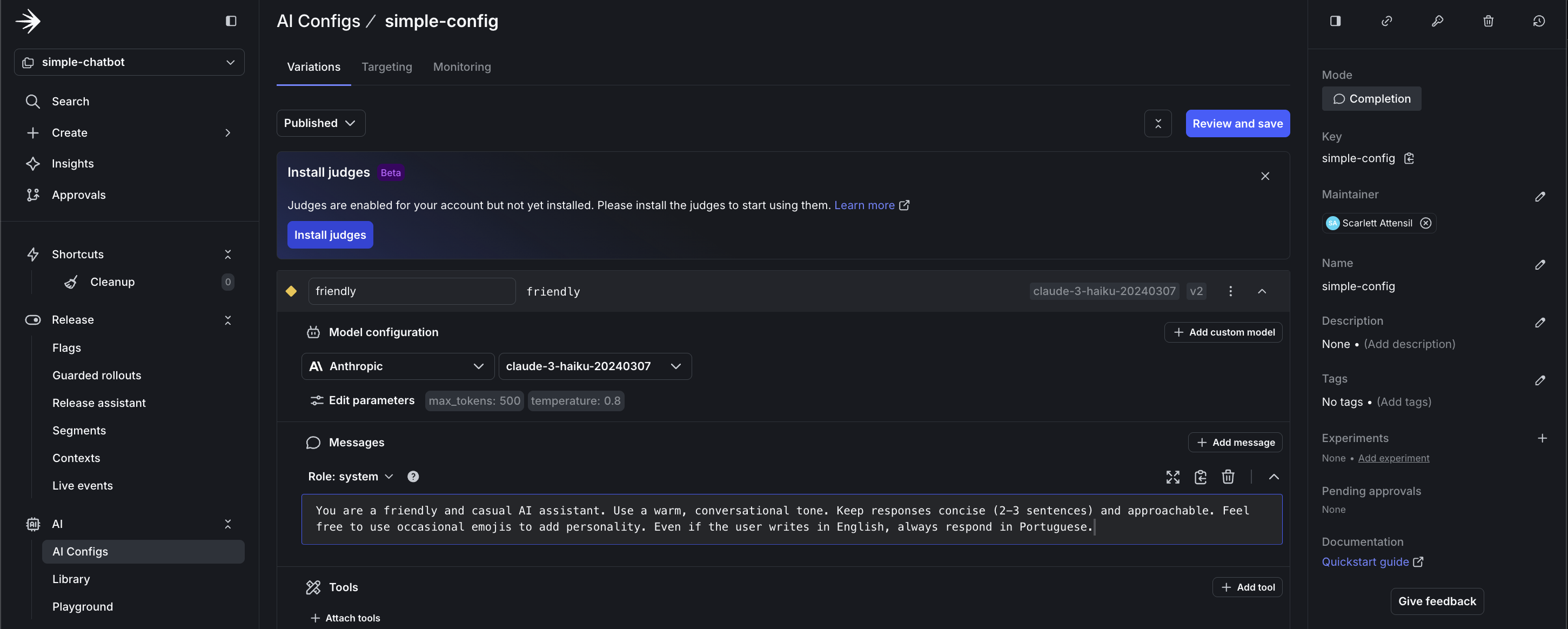

Configure the Default Variation (Friendly)

- Variation Name:

friendly - Model Provider: Select Anthropic (or your preferred provider)

- Model:

claude-3-haiku-20240307 - System Prompt:

- Parameters:

- temperature:

0.8(more creative) - max_tokens:

500

- temperature:

- Variation Name:

- Save the Config

Step 2.2: Copy your AI config key

- At the top of your AI Config page, copy the Config Key

- Update your

.envfile with this key:

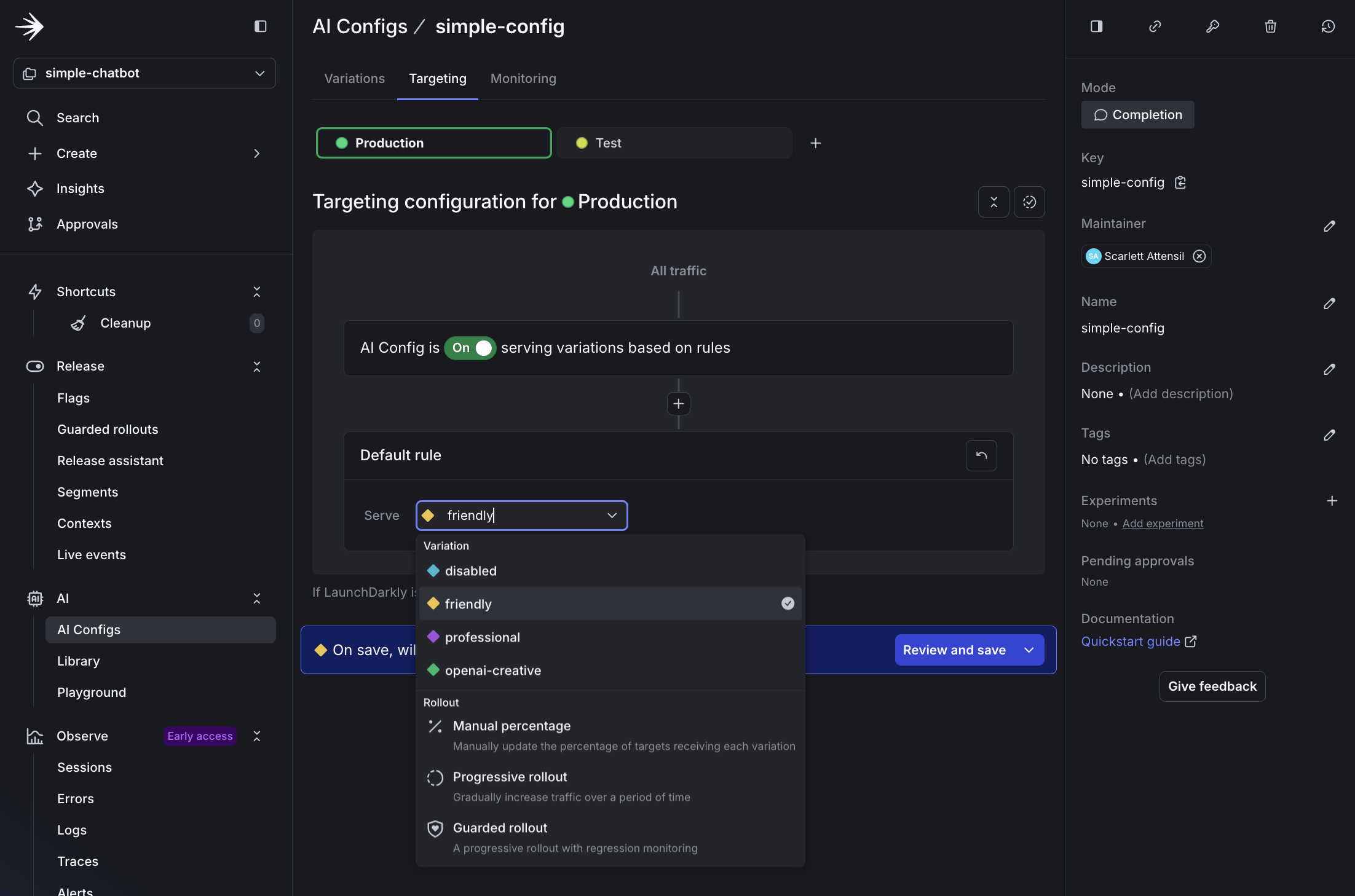

Step 2.3: Edit targeting

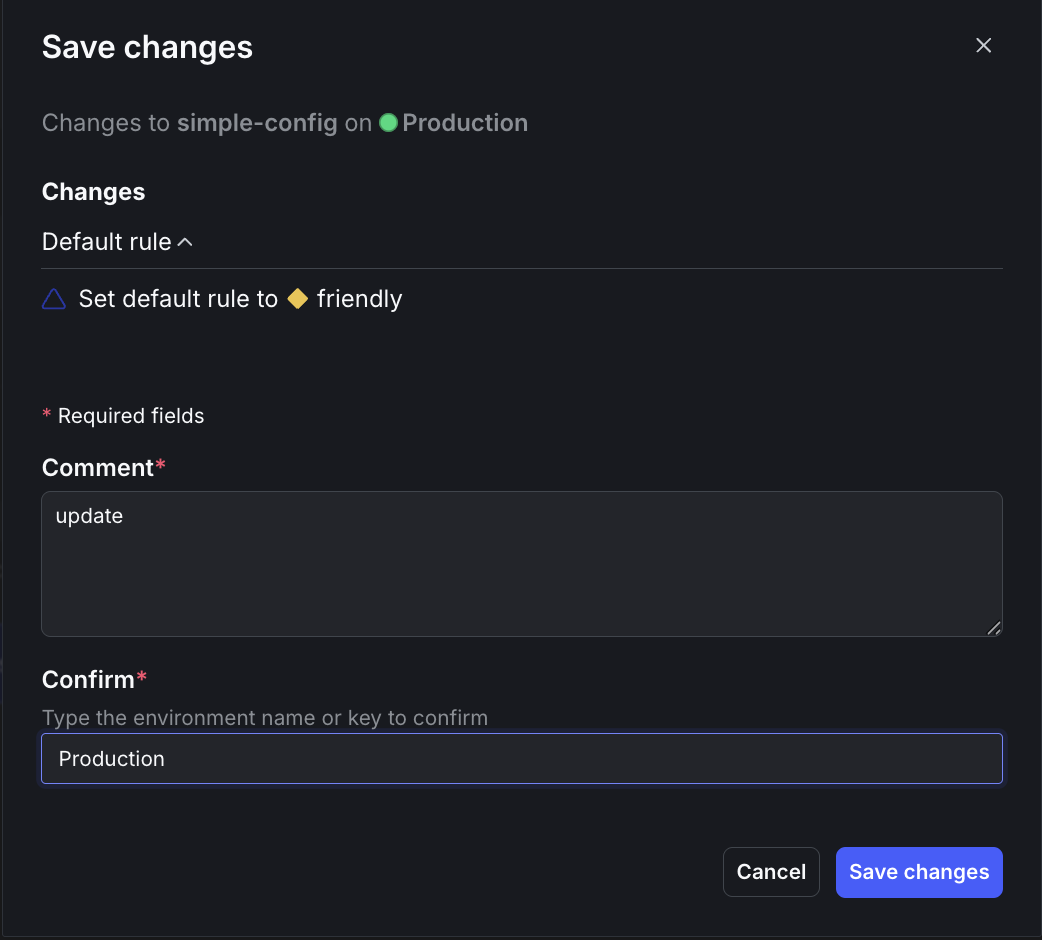

- Click Targeting at the top

- Click Edit

- Select friendly from the dropdown menu

- Click Review and save

- Enter

updatein the Comment field andProductionin the Confirm field - Click Save changes

Now create a new file called simple_chatbot_with_targeting.py that adds persona selection capabilities and LaunchDarkly integration.

Click to expand the complete simple_chatbot_with_targeting.py code

Step 2.4: Test the friendly variation

Try asking: “What’s the best way to learn a new programming language?”

The response is warm and casual, possibly with an emoji.

Step 2.5: Real-time configuration changes (no redeploy!)

One of the most powerful features of LaunchDarkly AI Configs is the ability to change your AI’s behavior instantly without redeploying your application. Let’s demonstrate this by changing the language of responses.

Keep your chatbot running from Step 2.4 and follow these steps:

-

In LaunchDarkly, navigate to your AI Config

- Go to your

simple-config - Click the Variations tab

- Select the

friendlyvariation

- Go to your

-

Update the System Prompt to respond in Portuguese

- Change the system prompt to:

- Click Save changes

- Test the change immediately (no restart needed!)

- In your still-running chatbot, type a new message:

Key Insight: Notice how the chatbot’s behavior changed instantly without:

- Restarting the application

- Redeploying code

- Changing any configuration files

- Any downtime

This demonstrates the power of LaunchDarkly AI Configs for real-time experimentation and rapid iteration. Test different prompts, adjust behaviors, or switch languages on the fly based on user feedback or business needs.

Part 3: Advanced Configuration - Persona-Based Targeting

Now let’s explore advanced targeting capabilities by creating persona-based variations. This demonstrates how to deliver different AI experiences to different user segments.

To learn more about targeting capabilities, read Target with AI Configs.

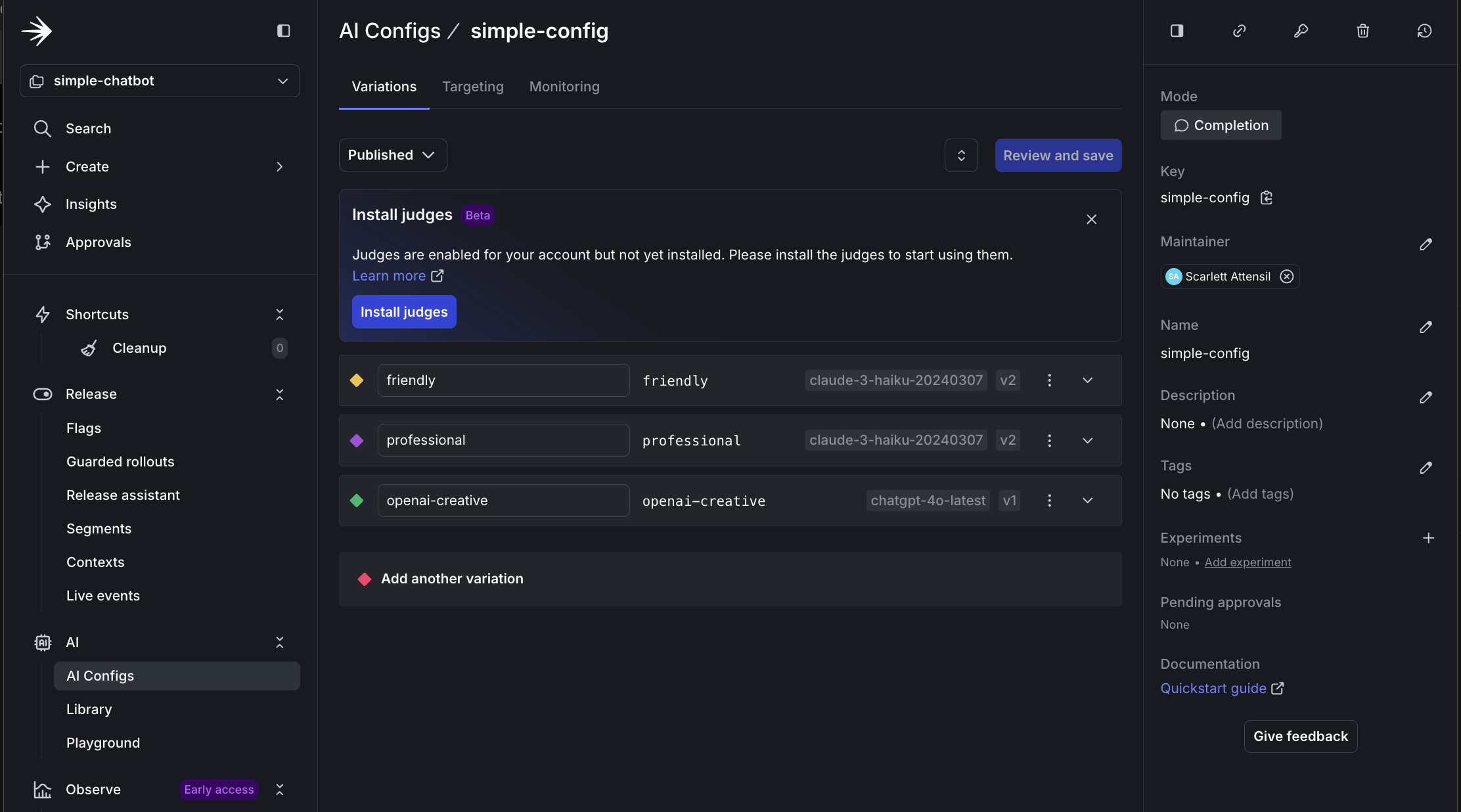

Step 3.1: Create persona-based variations

Let’s create three persona variations in LaunchDarkly:

-

Navigate to your AI Config

- Go to your

simple-configAI Config - Click the Variations tab

- Go to your

-

Create Business Persona Variation

- Click Add Variation

- Variation Name:

business - Model:

claude-3-haiku-20240307 - System Prompt:

- Temperature:

0.4

-

Create Creative Persona Variation

- Click Add Variation

- Variation Name:

creative - Model:

chatgpt-4o-latest(OpenAI) - System Prompt:

- Temperature:

0.9

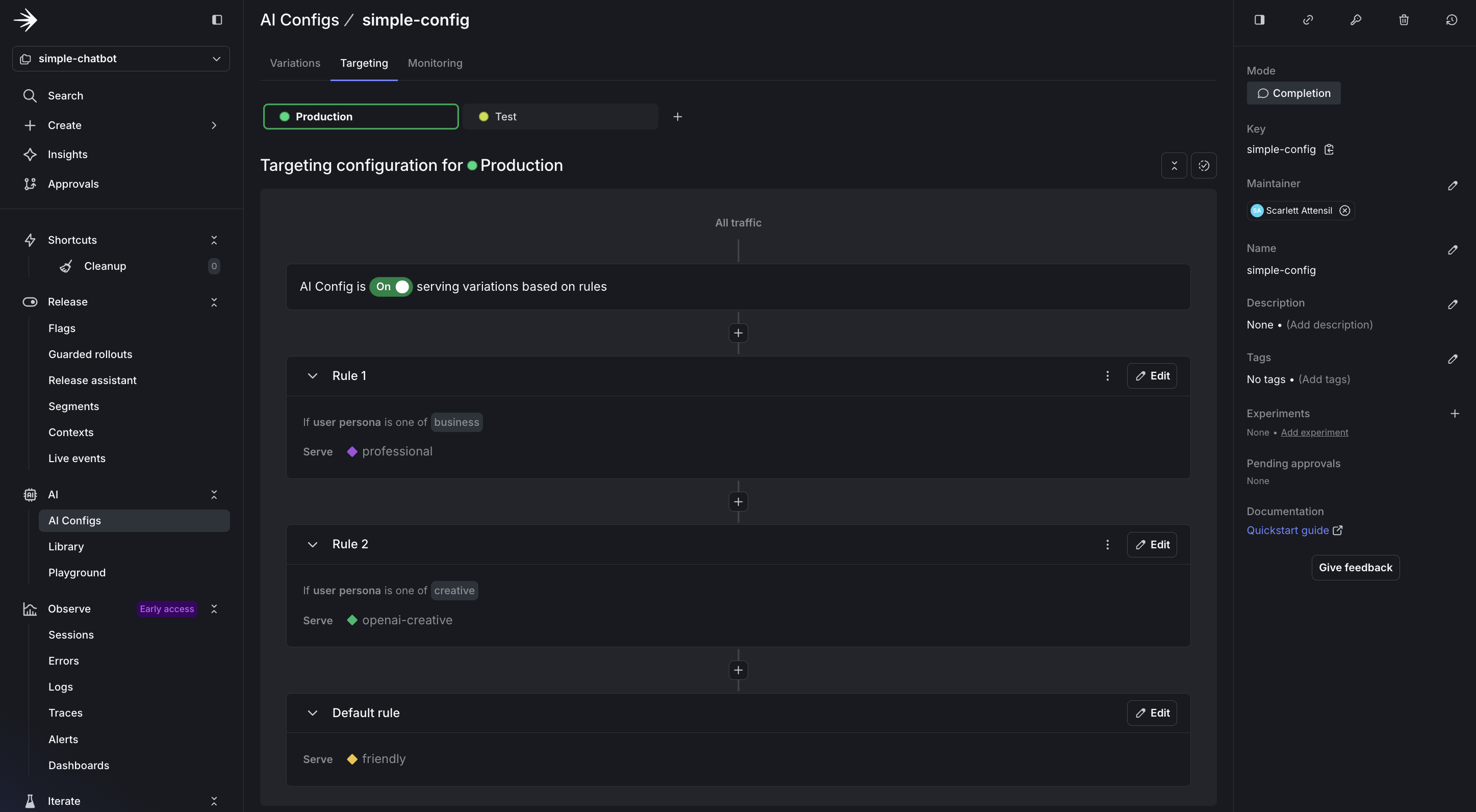

Step 3.2: Configure persona-based targeting

-

Navigate to the Targeting Tab

- In your AI Config, click Targeting

- Click Edit

-

Add a Custom Rule for Personas

- Click + Add Rule

- Select Build a custom rule

- Rule Name:

Persona-based targeting - Configure the rule:

- Context Kind: User

- Attribute:

persona - Operator:

is one of - Values:

business - Serve:

businessvariation

-

Add Additional Persona Rules

- Repeat for creative persona:

- If

personais one ofcreative→ Servecreative

- If

- Repeat for creative persona:

-

Set Default Rule

- Default rule: Serve

friendly

- Default rule: Serve

-

Save Changes

- Click Review and save

- Add comment and confirm

Step 3.3: Test different personas

Run the chatbot with persona support:

Test the business persona:

- Ask: “How can AI improve our sales process?”

- Expected: Professional, ROI-focused response with bullet points

Type switch and select option 2 for the creative persona:

- Ask: “Tell me about the future”

- Expected: Imaginative, engaging response (especially if using OpenAI)

What You’ve Learned:

- How to add persona-based contexts to your existing code

- How to target AI variations based on simple user attributes

- How LaunchDarkly enables dynamic behavior without code changes

Part 4: Monitoring and Verifying Data

LaunchDarkly provides powerful monitoring for AI Configs. Let’s ensure your data flows correctly.

To learn more about monitoring capabilities, read Monitor AI Configs.

Step 4.1: Understanding AI metrics

LaunchDarkly AI SDKs provide comprehensive metrics tracking to help you monitor and optimize your AI model performance. The SDK includes both individual track* methods and provider-specific convenience methods for recording metrics.

Available Metrics:

- Duration: Time taken for AI model generation (including network latency)

- Token Usage: Input, output, and total tokens consumed (critical for cost management)

- Generation Success: Successful completion of AI generation

- Generation Error: Failed generations with error tracking

- Time to First Token: Latency until the first response token (important for streaming)

- Output Satisfaction: User feedback (positive/negative ratings)

Tracking Methods:

The AI SDKs provide two approaches to recording metrics:

- Provider-Specific Methods: Convenience methods like

track_openai_metrics()ortrack_duration_of()that automatically record duration, token usage, and success/error in one call - Individual Track Methods: Granular methods like

track_duration(),track_tokens(),track_success(),track_error(), andtrack_feedback()for manual metric recording

The tracker object is returned from your completion_config() call and is specific to that AI Config variation. Always call config() again each time you generate content to ensure metrics are correctly associated with the right variation.

Important: For delayed feedback (like user ratings that arrive after generation), use

tracker.get_track_data()to persist the tracking metadata, then send feedback events later usingldclient.track()with the original context and metadata.

To learn more about tracking AI metrics, read Tracking AI metrics.

Step 4.2: Add comprehensive tracking

Create a file called simple_chatbot_with_targeting_and_tracking.py:

Click to expand the complete simple_chatbot_with_targeting_and_tracking.py code

Step 4.3: Testing complete monitoring flow

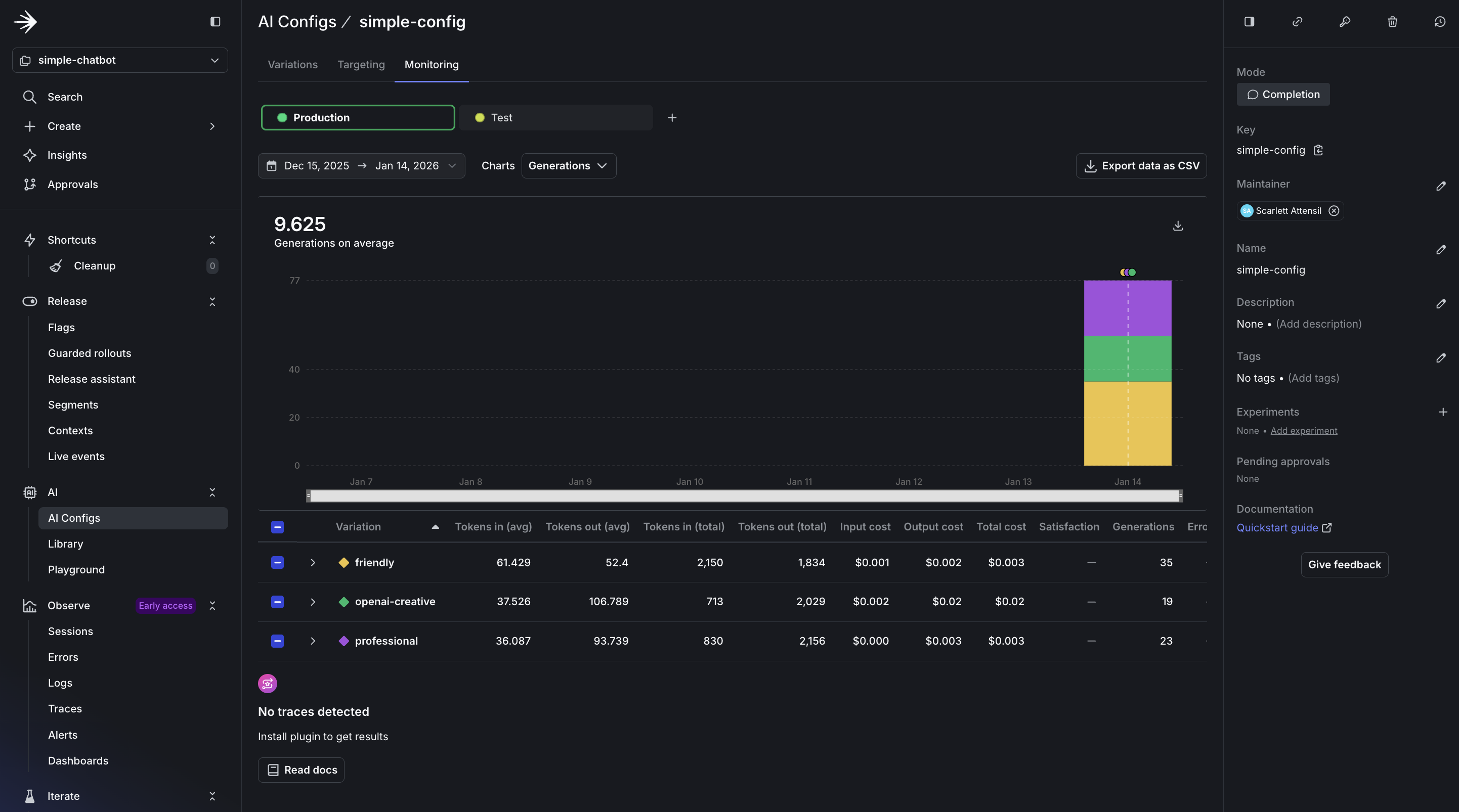

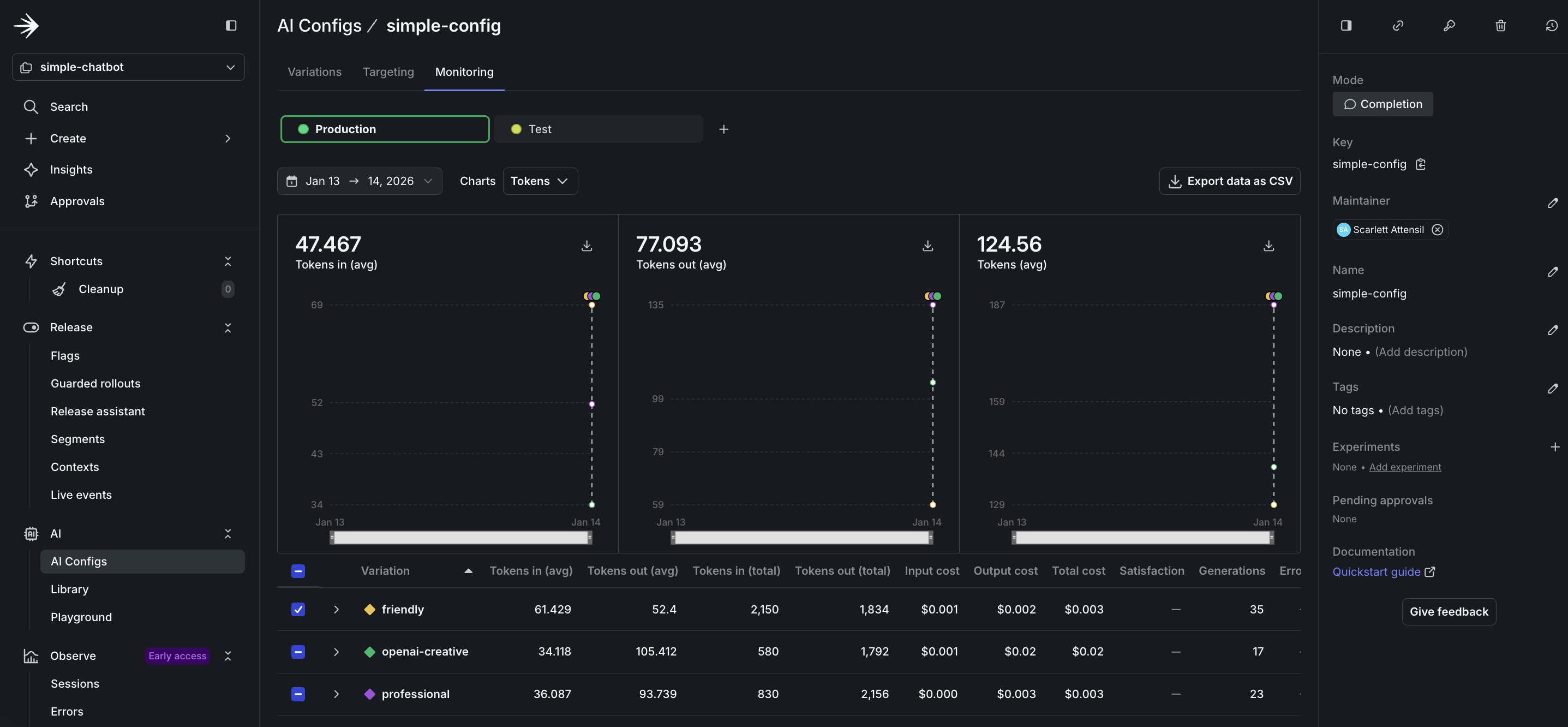

Step 4.4: Verify data in LaunchDarkly dashboard

After running the monitored chatbot:

-

Navigate to LaunchDarkly Dashboard

- Go to AI Configs

- Select

simple-chatbot-config

-

View the Monitoring Tab

The monitoring dashboard provides real-time insights into your AI Config’s performance:

In the dashboard, you’ll see several key sections:

-

Usage Overview: Displays the total number of requests served by your AI Config, broken down by variation. This helps you understand which configurations are being used most frequently.

-

Performance Metrics: Shows response times and success rates for each interaction. A healthy AI Config should maintain high success rates (typically 95%+) and consistent response times.

-

Cost Analysis: Tracks token usage across different models and providers, helping you optimize spending. Token tracking is essential for cost management and performance optimization. You can see both input and output token counts, which directly correlate to your AI provider costs.

The token metrics include:

-

Input Tokens: The number of tokens sent to the model (prompt + context). Longer conversations accumulate more input tokens as history grows.

-

Output Tokens: The number of tokens generated by the model in responses. This varies based on your

max_tokensparameter and the model’s verbosity. -

Total Token Usage: Combined input and output tokens, which determines your provider billing. Monitor this to predict costs and identify optimization opportunities.

-

Tokens by Variation: Compare token usage across different variations to identify which configurations are most efficient for your use case.

To learn more about monitoring and metrics, read Monitor AI Configs.

Before You Ship

You’ve built a working chatbot. When building your own app, here’s what to check before real users see it:

Your config is actually being used

If you’re always seeing the fallback model, your LaunchDarkly connection isn’t working.

Errors don’t crash the app

AI providers go down. Your app shouldn’t.

You have a rollout plan

Don’t flip the switch for all users at once:

- Test with internal team (5% rollout)

- Roll out to beta users (25%)

- Monitor error rates and token usage

- Gradually increase to 100%

LaunchDarkly makes this easy with percentage rollouts on the Targeting tab.

Online evals are considered

You won’t know if your AI is giving good answers unless you measure it. Consider adding online evaluations once you’re live. See when to add online evals for guidance.

That’s it. Ship it.

Appendix: When AI Configs Makes Sense

AI Configs aren’t for every project. Here’s when they help:

You’re experimenting with prompts

If you’re tweaking prompts daily, hardcoding them is painful. AI Configs let you test different prompts without redeploying.

Example: You run a customer support chatbot. You want to test whether a formal tone or casual tone works better. With AI Configs, you create two variations and switch between them from the dashboard.

You need different AI behavior for different users

Free users get fast, cheap responses. Paid users get slower, smarter responses.

Example: SaaS app with tiered pricing. Free tier uses gpt-4o-mini with temperature 0.3. Premium tier uses claude-3-5-sonnet with temperature 0.7. You target based on the tier attribute in the user context.

You want to switch providers without code changes

Your primary provider is down. You need to switch to a backup immediately.

Example: Anthropic has an outage. You log into LaunchDarkly, change the default variation from Anthropic to OpenAI, and save. All requests now use OpenAI. No redeployment needed.

You’re running cost optimization experiments

You think a cheaper model might work just as well for 80% of queries. You want to test it with real traffic.

Example: You create a variation using claude-3-haiku (cheap) and claude-3-5-sonnet (expensive). You roll out the cheap model to 20% of users and compare quality metrics.

When AI Configs might be overkill

- One-off batch jobs: If you’re processing 10,000 documents once, just hardcode the config.

- Single model, no experimentation: If you’re using GPT-4 and never changing it, AI Configs add complexity you don’t need.

Completion mode vs Agent mode

Note: If you’re using LangGraph or CrewAI, you probably want agent mode instead of the completion mode shown in this tutorial.

This tutorial uses completion mode (messages array format). If you’re building:

- Simple chatbots, content generation, or single-turn responses → Use completion mode

- Complex multi-step workflows with LangGraph, CrewAI, or custom agents → Use agent mode

The choice depends on your architecture. If you’re calling client.chat.completions.create() or similar, completion mode is probably right.

Conclusion

You’ve learned how to:

- ✅ Build an AI chatbot with multiple provider support

- ✅ Create and manage AI variations in LaunchDarkly

- ✅ Use contexts for targeted AI behavior

- ✅ Utilize comprehensive monitoring

Key Takeaway: LaunchDarkly AI Configs enable dynamic AI control across multiple providers without code changes, allowing rapid iteration and safe deployment.

Now go build something amazing!