LaunchDarkly's server-side streaming service is now deployed in two new regions: Europe and Asia Pacific, complementing our existing North America region. This update enables faster connections and faster SDK initialization, thus reducing app start-up times for LaunchDarkly customers who use server-side streaming SDKs to manage feature rollouts around the world.

The Flag Delivery team recently completed the rollout, with the goal to strengthen LaunchDarkly’s offering by improving service resilience across the board.

Significant improvement in stream initialization time

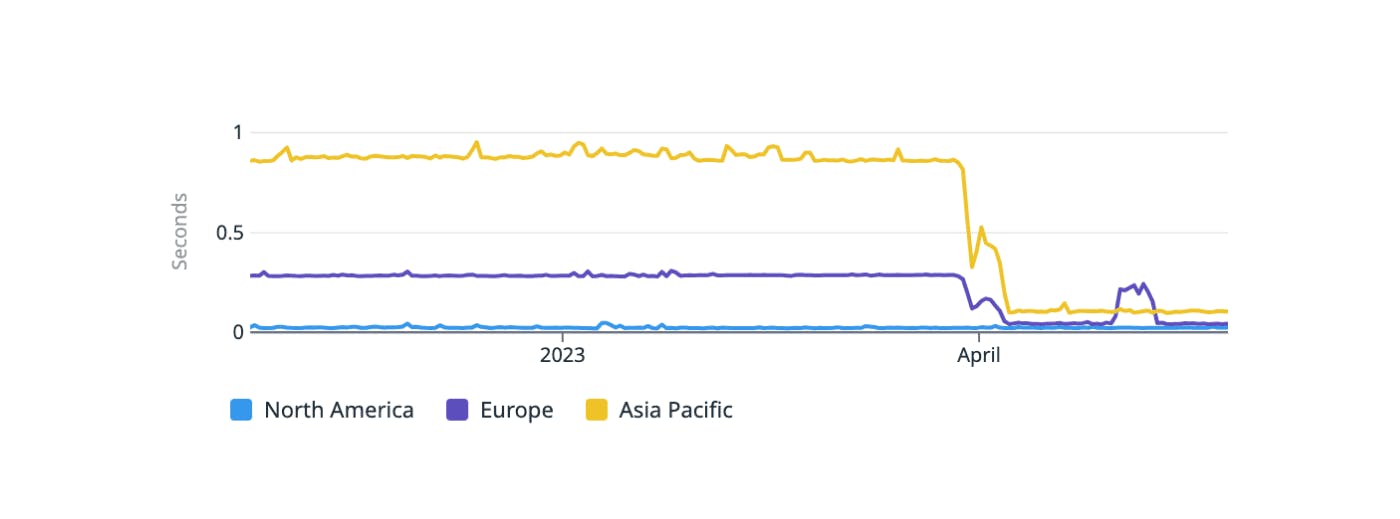

We continuously monitor performance and correctness of our flag delivery system from around the world using synthetic tests. These tests had shown that the requests originating from Europe and Asia Pacific incurred higher SDK initialization latency than those originating from North America.

Distance between client and server adds significant network latency. To reduce latency, we brought the servers (plus in this case, data) closer to the clients. With new data centers in Europe and Asia Pacific, the in-region requests are now served closer to home, instead of by our primary data center in North America.

The below graph shows per-region improvement. Asia Pacific saw more than a 75% reduction in server-side SDK stream initialization latency.

This chart shows the average time taken to initialize a server-side streaming SDK in each of the three regions - North America, Europe and Asia Pacific. It is important to note that our synthetic tests run on infrastructure that may not reflect our customers’ actual experience. And the streaming SDK initialization latency that our customers see might be higher.

Service resilience as a first-class requirement

At LaunchDarkly, we treat service resilience as a core requirement.

A resilient system is one that continues to function despite failures. Building a resilient system requires us to plan at each level of the architecture. Both Google Cloud and AWS contend that building resilient systems also extends to people and culture. The main reason for including people and culture is to know that a big part of building resilient systems is to understand that it is a continuous process where we keep learning from system failures and iterating to make the system more resilient. “Learn and grow” is a core value at LaunchDarkly.

LaunchDarkly’s streaming service is built with several resilient characteristics, such as supporting timeouts with retries, exponential backoff, jitter, and caching at various levels. The service is deployed to multiple availability zones and has the ability to auto-scale.

With the new data centers, we have improved service resiliency. Now we can failover to a different region if there are region-wide issues. The ability to route traffic away from a region also gives us the flexibility to do regional maintenance more confidently.

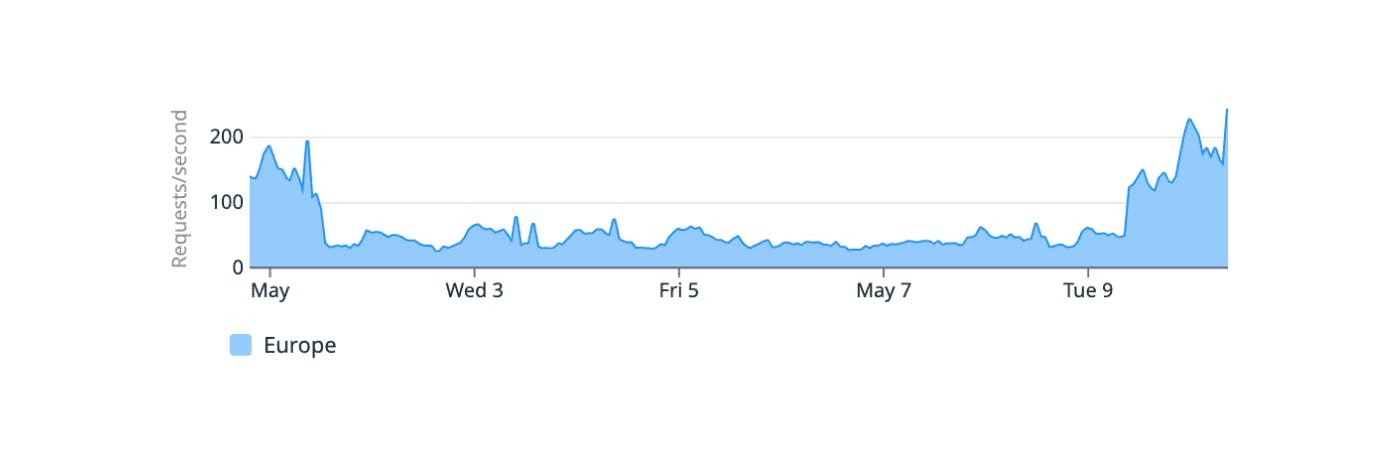

Below is an example where we had to route traffic away from the Europe data center to other regions as this region was under planned maintenance. Observe the drop in requests per second followed by an increase in requests per second.

Updated server-side streaming architecture

With the top goal to increase service resiliency, reduce SDK initialization latency, and to do all of this in a cost-efficient manner, the Flag Delivery team researched, compared, and contrasted several ways to achieve the goals.

Below is a simple diagram showing the updated server-side streaming architecture with the new data centers. On the left side is the outside world from where the server-side streaming SDKs connect to LaunchDarkly services. The right side shows the three data centers where server-side streaming infrastructure is deployed. The colored lines depict traffic flow, e.g., the blue line shows that traffic originating from Asia Pacific is served by the data center in Asia Pacific, and henceforth.

.png?ixlib=gatsbyFP&auto=compress%2Cformat&fit=max&rect=0%2C0%2C1400%2C823&w=1400&h=823)

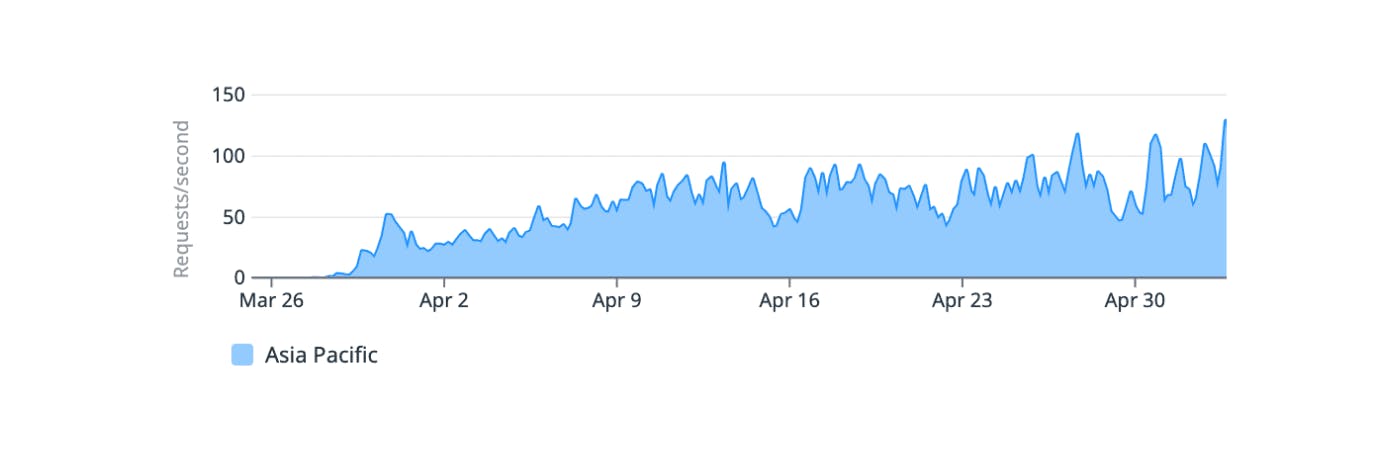

The routing layer was one of the most interesting pieces to build. An array of AWS Route53 records are used to distribute traffic across the load balancers across the three data centers. To address the stream initialization latency requirement we used Route 53’s Latency Based Routing. Weighted DNS records are used at the top level to facilitate regional failovers and load shedding. Using the routing layer we gradually onboarded traffic to the new regions and reached from 0 to 100% across the new regions.

The below chart shows the gradual increase in requests per second in the Asia Pacific region.

Feedback

If you are using one of our server-side streaming SDKs, you are already using the new system and hopefully seeing improvements in SDK stream initialization latency. If you have any questions or feedback about your experience with our server streaming, we would love to hear from you. As always, feel free to email our product team at feedback@launchdarkly.com.